Hi, if you're reading this, I hope you're having a good day. Now, onto the actual topic: what happens when you change a model's CLIP with a different one?

Note 1: Throughout this article, I will be using CLIP and text encoder interchangeably.

Note 2: I am the maker of the OpenSolera model, which I'm trying to make a little bit better every time I try and update this thing, so most of my testing will surround this model. If anyone wants one of the experimental CLIP merges without getting A1111 and Model Toolkit then doing it yourself, tell me in the comments and I'll upload them.

If you're here for the images, click this Google Drive link: https://drive.google.com/drive/folders/1X-f4WnTmsFuTyAbC-hmC15xnDpyFAVgO?usp=sharing

Preamble/Rant

One of the thing I noticed during my merges is that the base_alpha doesn't really changes. I read somewhere that leaving it as anything different than 0 or 1 might corrupt the text encoder. So far, OpenSolera (a.k.a my merge) follows this advice pretty closely, not changing the base_alpha from anything but 0 or 1 during MBW merges. However, with the (slightly) lackluster results I had using Alpha 2, I thought about just straight up using Alpha 1's text encoder for Alpha 2, but before that, I want to try something a bit weirder: using Alpha 2, but with some of the original model's CLIP.

Now, I haven't seen anyone do this on here yet (or maybe I just haven't looked around enough yet), but it's an interesting idea to me. Also, it's really hard to improve my models when:

a) It feels like any other generic SD1.5 anime model on this platform (that's kind of the point, but it should be more versatile than that)

b) I have no idea where to start when fixing models if I don't know why it is broken in the first place

So, I came up with this (frankly stupid) idea to satisfy that curiosity and kind see where this goes (and actually properly stress test my model for once).

Preparation

Model used

OpenSolera - Alpha 2 (base model)

YiffyMix - v37 (CLIP donor)

Hardcore Hentai - v1.3 (CLIP donor)

ElixirProject - v1.6 (CLIP donor)

AuroraONE (CLIP donor)

OpenSolera - Alpha 1 (additional source for comparison)

Parameters

Client: SD.Next [a874b27e], torch2.3.0+cu12.1, diffusers 0.29.1, transformers 4.42.3

Resolution: 512x768, 768x512

Sampler + Scheduler: DPM++ 2M Karras, discard penultimate sigma

Step count: 20

Prompt attention parser: Full parser (SD.Next) (no normalization)

Cross attention: Scaled-Dot-Product + Math attention + Flash attention + Memory attention (this makes most of these generations basically impossible to fully replicate, unfortunately T_T)

CFG Scale: 7

CLIP Skip: 1

Seed: 1111, 2222, 3333, 4444, 5555

Prompts

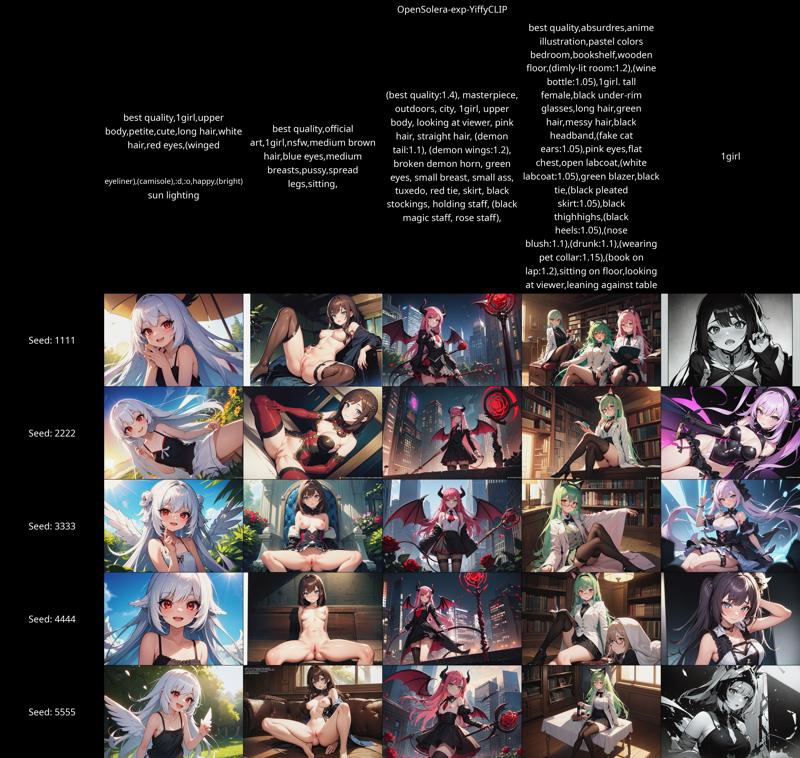

The first 2 is from a rentry that's now gone, the next 2 are my personal OC tests, and the 1girl is just there for checking purposes. I also did an additional run testing if the CLIP-replaced models could still use any of the merged LoRAs (probably not). All of them use the same negative prompt: (worse quality:1.4), (low quality:1.4)

Onto the actual test prompts:

(SFW - base prompt recognition) best quality,1girl,upper body,petite,cute,long hair,white hair,red eyes,(winged eyeliner),(camisole),:d,:o,happy,(bright) sun lighting

(NSFW - outright explicit) best quality,official art,1girl,nsfw,medium brown hair,blue eyes,medium breasts,pussy,spread legs,sitting

(NSFW...sometimes - exposed breasts) (best quality:1.4), masterpiece, outdoors, city, 1girl, upper body, looking at viewer, pink hair, straight hair, (demon tail:1.1), (demon wings:1.2), broken demon horn, green eyes, small breast, small ass, tuxedo, red tie, skirt, black stockings, holding staff, (black magic staff, rose staff),

(Should be SFW - basically a token overload test) best quality,absurdres,anime illustration,pastel colors bedroom,bookshelf,wooden floor,(dimly-lit room:1.2),(wine bottle:1.05),1girl. tall female,black under-rim glasses,long hair,green hair,messy hair,black headband,(fake cat ears:1.05),pink eyes,flat chest,open labcoat,(white labcoat:1.05),green blazer,black tie,(black pleated skirt:1.05),black thighhighs,(black heels:1.05),(nose blush:1.1),(drunk:1.1),(wearing pet collar:1.15),(book on lap:1.2),sitting on floor,looking at viewer,leaning against table

(No idea how it would go) 1girl

The following are used in a different run:

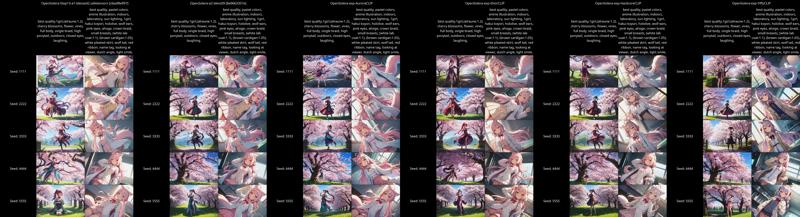

(Additional test - AIOMonsterGirl - Should be SFW, also actually seeing if I actually merged it in correctly) best quality,1girl, (alraune:1.2), cherry blossoms, flower, vines, full body, single braid, high ponytail, outdoors, closed eyes, laughing,

(Additional test - Hololive - SFW) best quality, pastel colors, anime illustration, indoors, laboratory, sun lighting, 1girl, hakui koyori, hololive, wolf ears, pink eyes, ahoge, crown braid, small breasts, (white lab coat:1.1), (brown cardigan:1.05), white pleated skirt, wolf tail, red ribbon, name tag, looking at viewer, dutch angle, light smile, (yes, it's the same as OpenSolera's example image, and yes, I like Koyori, though I don't think anyone cares)

Also, no embedding or textual inversion is used, which is intentional. See Yuno's "Model basis theory" for more details.

Tl;dr: It destroys the model's intrinsic style for most things not Counterfeit/Counterfeit-based. Yuno only said this explicitly for EasyNegative, but just to be safe, I don't use any.

Extensions used + How-to

Model Toolkit (to switch CLIPs)

You only need this thing for the most part. The basic step are as follows:

Click on the "Toolkit" tab

Choose a model to load into Model Toolkit in the "Source" section and load the model

Navigate to the Advanced tab

In the Component section, select CLIP-v1 as Class

Select the model you want the CLIP of in the Action section, then click Import

Wait a few moments, rename the new model (optional, but highly recommended for clarity), and click Save

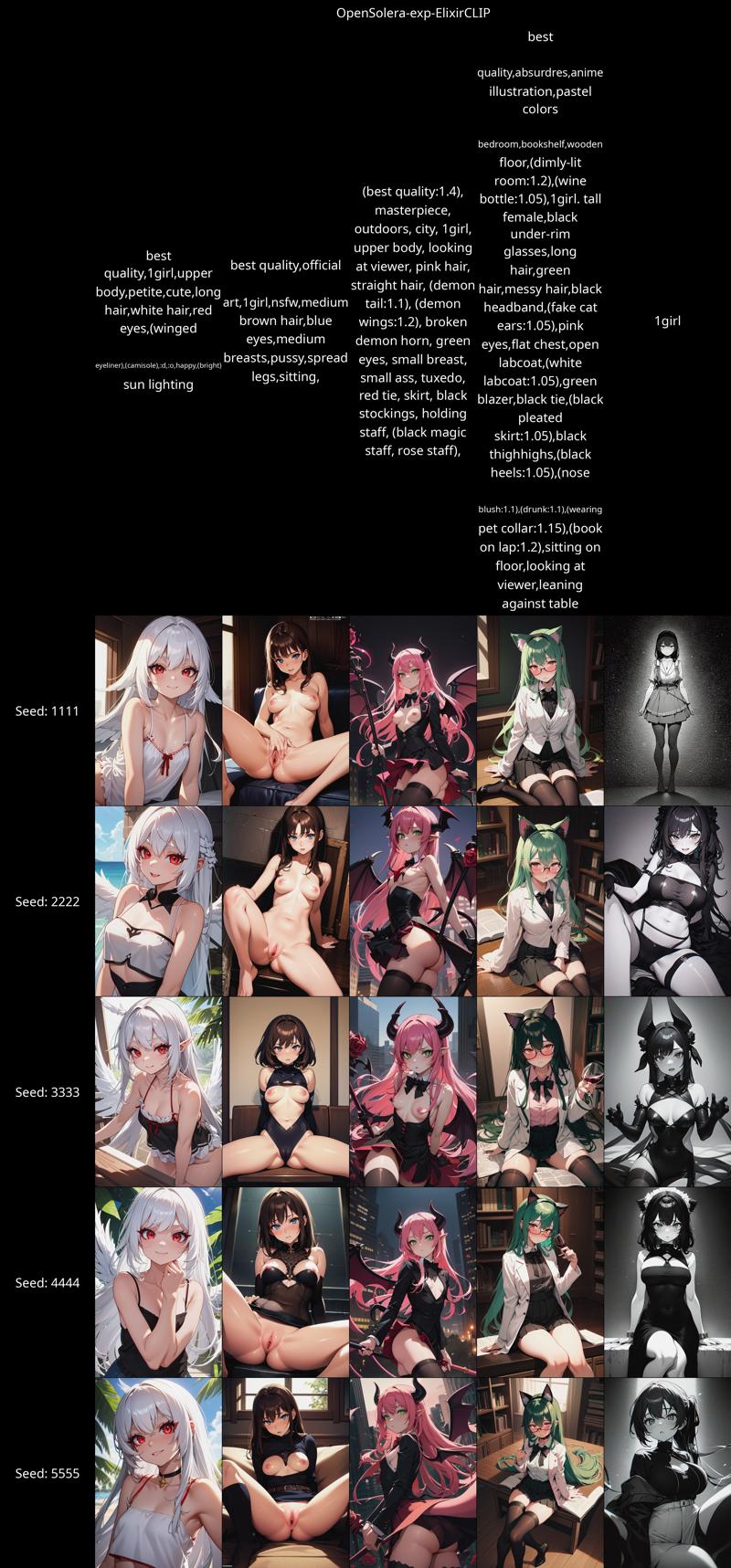

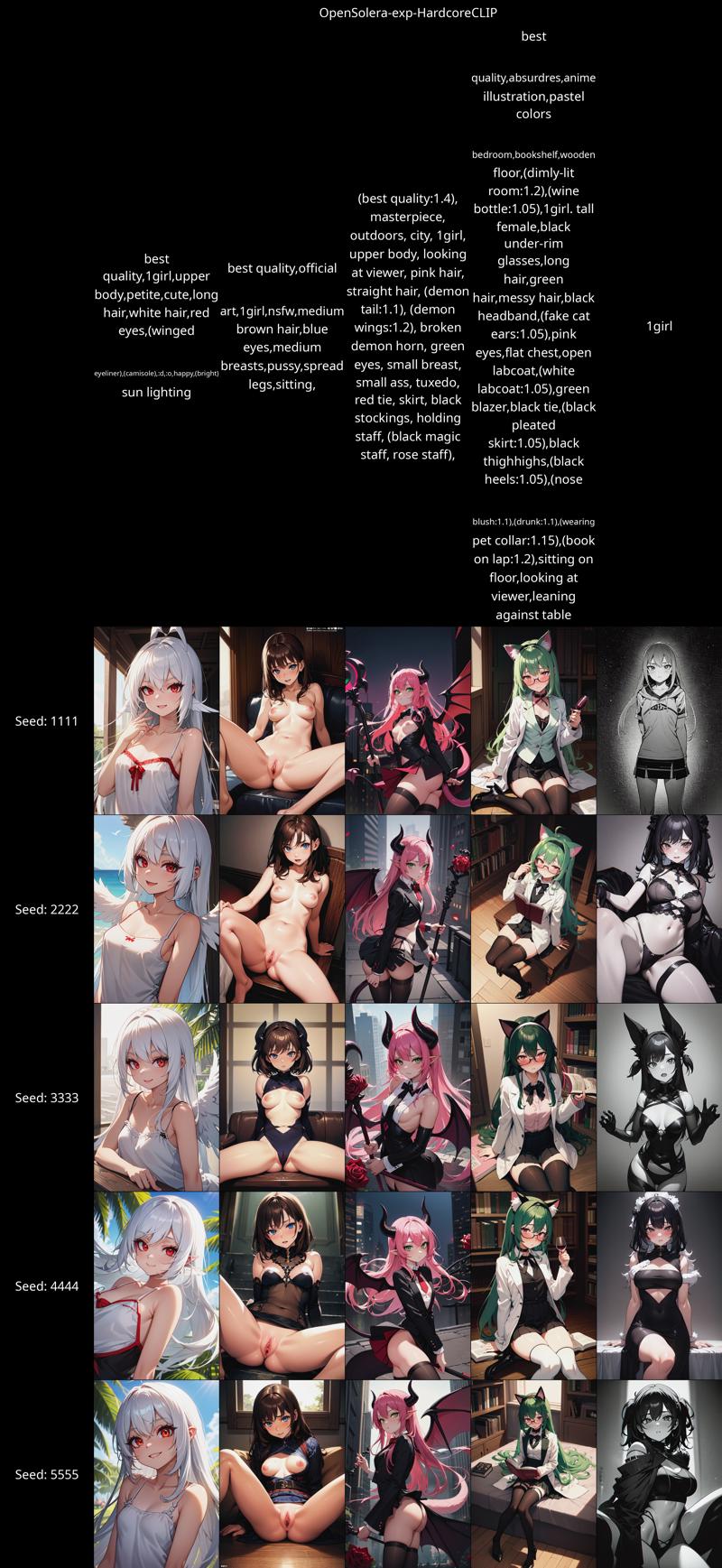

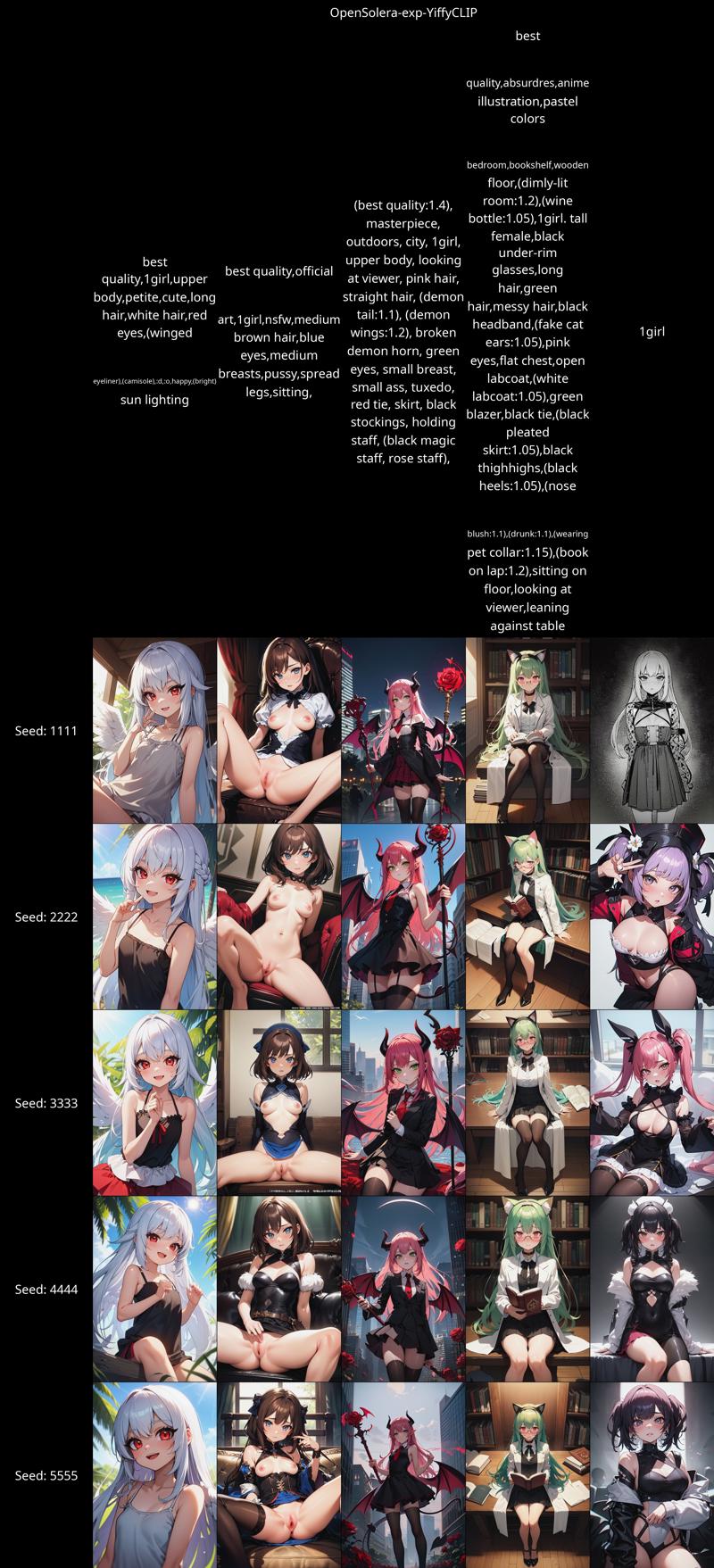

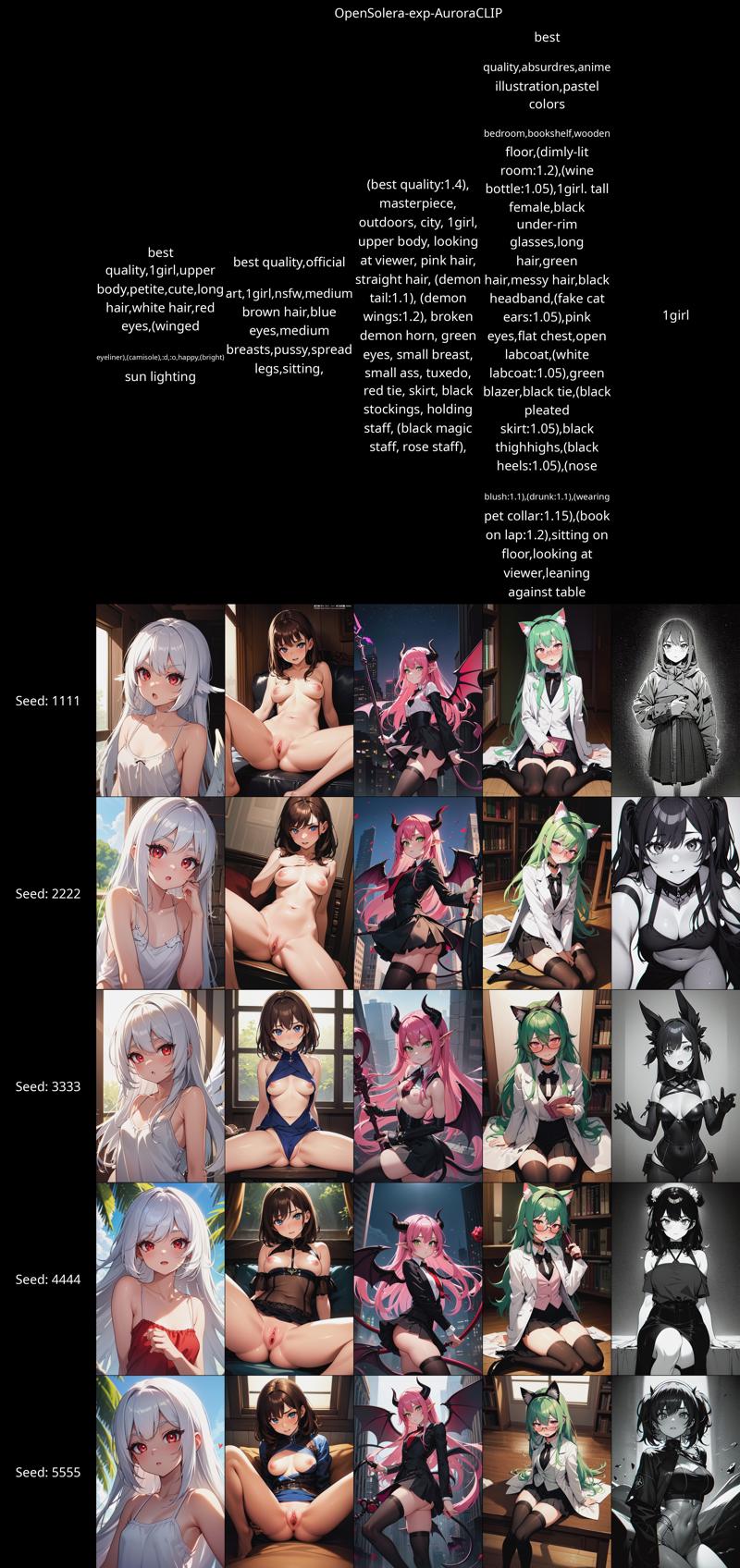

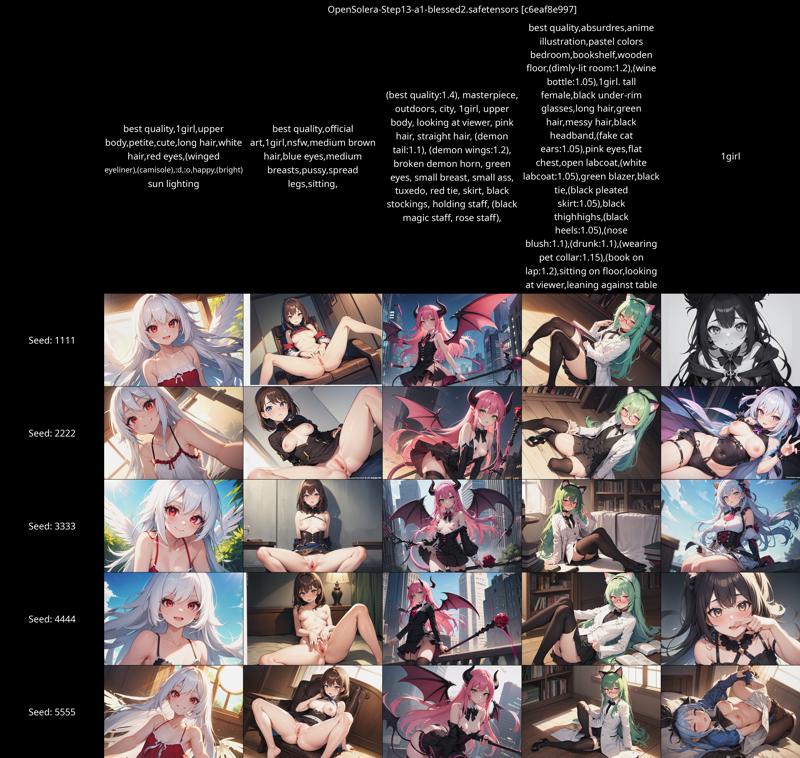

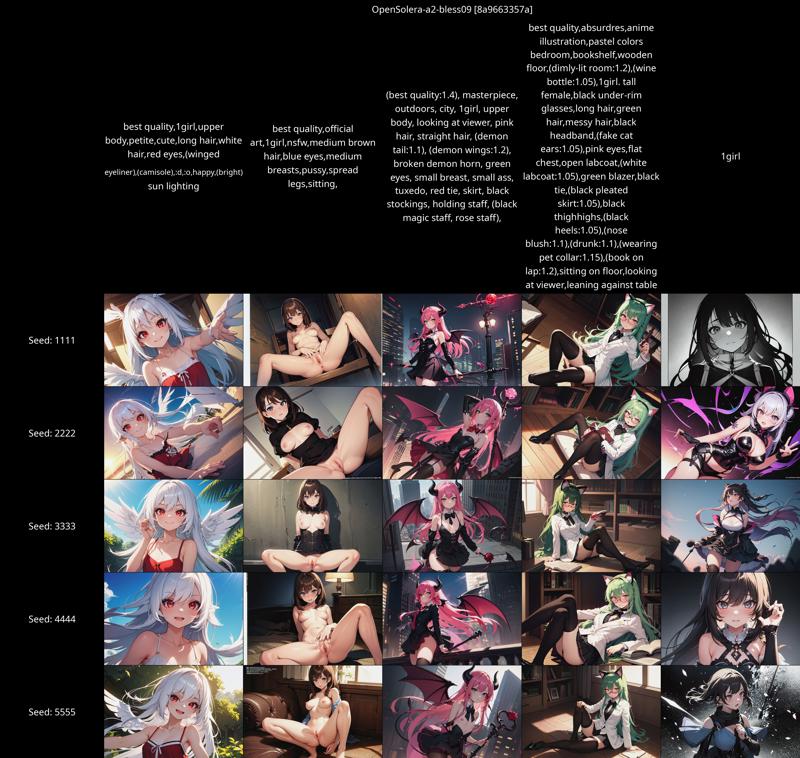

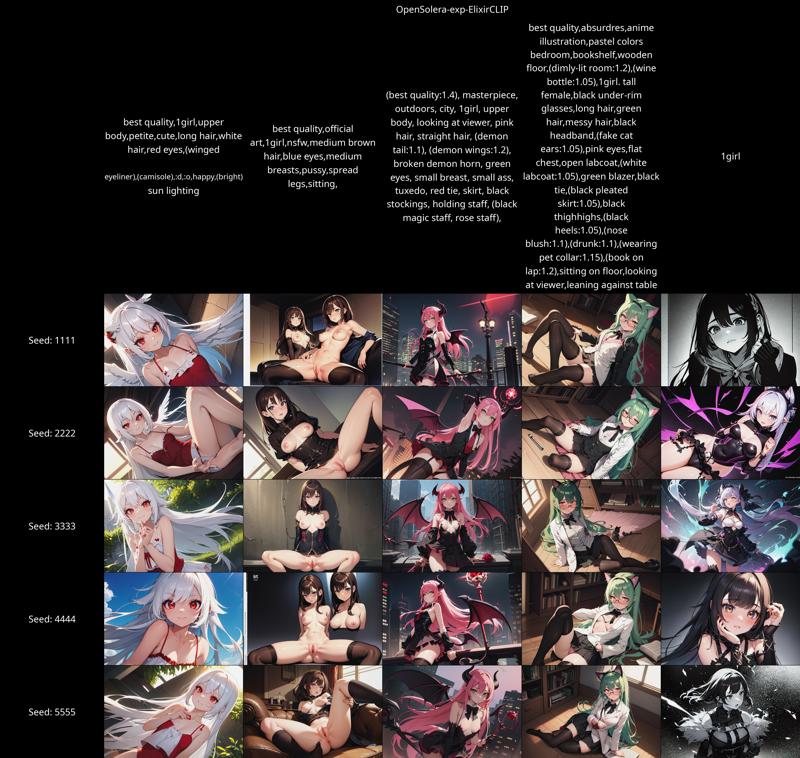

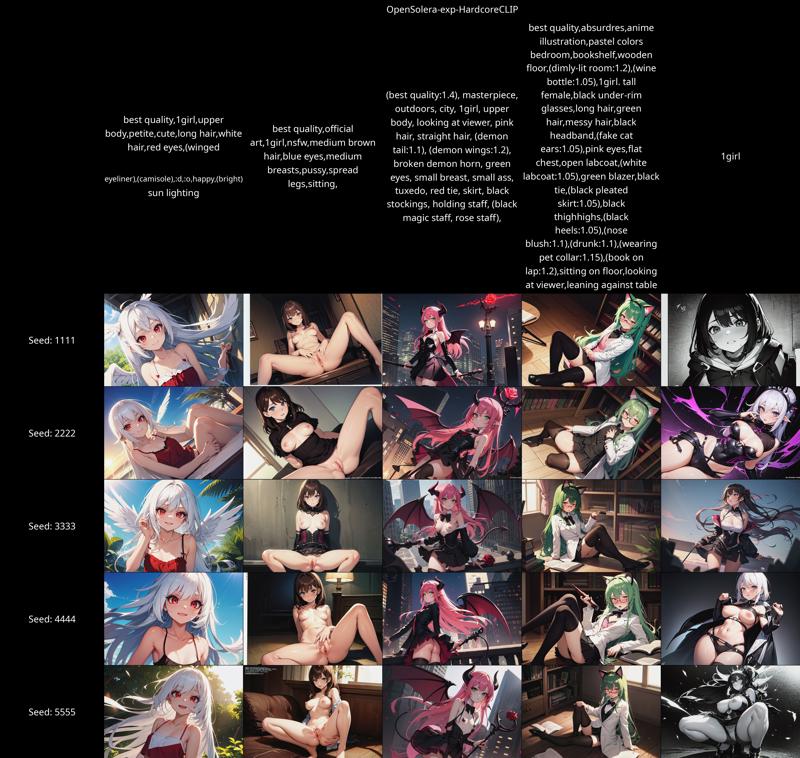

The "OpenSolera-exp" models are Alpha 2 models with the CLIP replaced

Images

For the sake of visibility, only the individual model's XYZ grid will be shown on the article. If anyone wants the entire pile of images and XYZ grids, I'll upload it onto my Google Drive (since its actual size is far, far too big for CivitAI). Regardless, this part is going to be stupid long purely due to the "overload" prompt length. Also, the sorting order is basically random, sorry about that.

Link to the CLIP experiment images are here: https://drive.google.com/drive/folders/1X-f4WnTmsFuTyAbC-hmC15xnDpyFAVgO?usp=sharing

Portrait - Base Sets

OpenSolera - Alpha 1

OpenSolera - Alpha 2

OpenSolera-a2 with ProjectElixir CLIP

OpenSolera-a2 with Hardcore Hentai CLIP

OpenSolera-a2 with YiffyMix CLIP

OpenSolera-a2 with AuroraONE CLIP

Landscape - Base Sets

OpenSolera-a1

OpenSolera-a2

OpenSolera-a2 with ProjectElixir CLIP

OpenSolera-a2 with Hardcore Hentai CLIP

OpenSolera-a2 with YiffyMix CLIP

OpenSolera-a2 with AuroraONE CLIP

Additional Tests (LoRA)

(Please grab the large version from Google Drive if you want a proper view of these grids)

Results/Comments

I really need to merge the LoRAs again, I kinda fucked up on merging both of them into the model. Really need to do that for the next version (Alpha 3)

I genuinely did not expect the landscape images to change that much with just a CLIP replacement. Huh... that's cool. Yiffy's composition is quite good for landscape images, but Aurora stuck to my prompt better

Portraits are more resilient against CLIP changes than I expected. Looks like I need to do my testing on landscape-oriented images as well. I quite like Aurora's on portrait pieces, but I think YiffyMix aligns closer to what I want

Alpha 1 is brighter, while Alpha 2 has better contrast. The base Alpha 2 CLIP looks like a fusion of Aurora and ProjectElixir in my eyes

I now realize I suck at making XYZ Grids...

If anyone reading this has any questions or thoughts, feel free to leave them in the comments. I do random stuff like this from time to time, mostly to test out my own models and (frankly neurotic) ideas. As for why this article is in "Musing" instead of "Comparative Study", it's because this is more me showing off something interesting and gathering some interesting insight from them through hindsight rather than outright comparing things.