Oh hey. Welcome back.

Did you come from part 1? Awesome! Happy to have you hooked for the sequel.

Usually, the sequel is not as good as the original. Well, let's see what you think after finishing this page-turner!

This is a training diary for:

Wooly Style Flux LoRA

I've uploaded each version of the model as a separate version. There was enough donations to cover the first trainings and more, so I tried a couple of other captioning tools to see how they stack up.

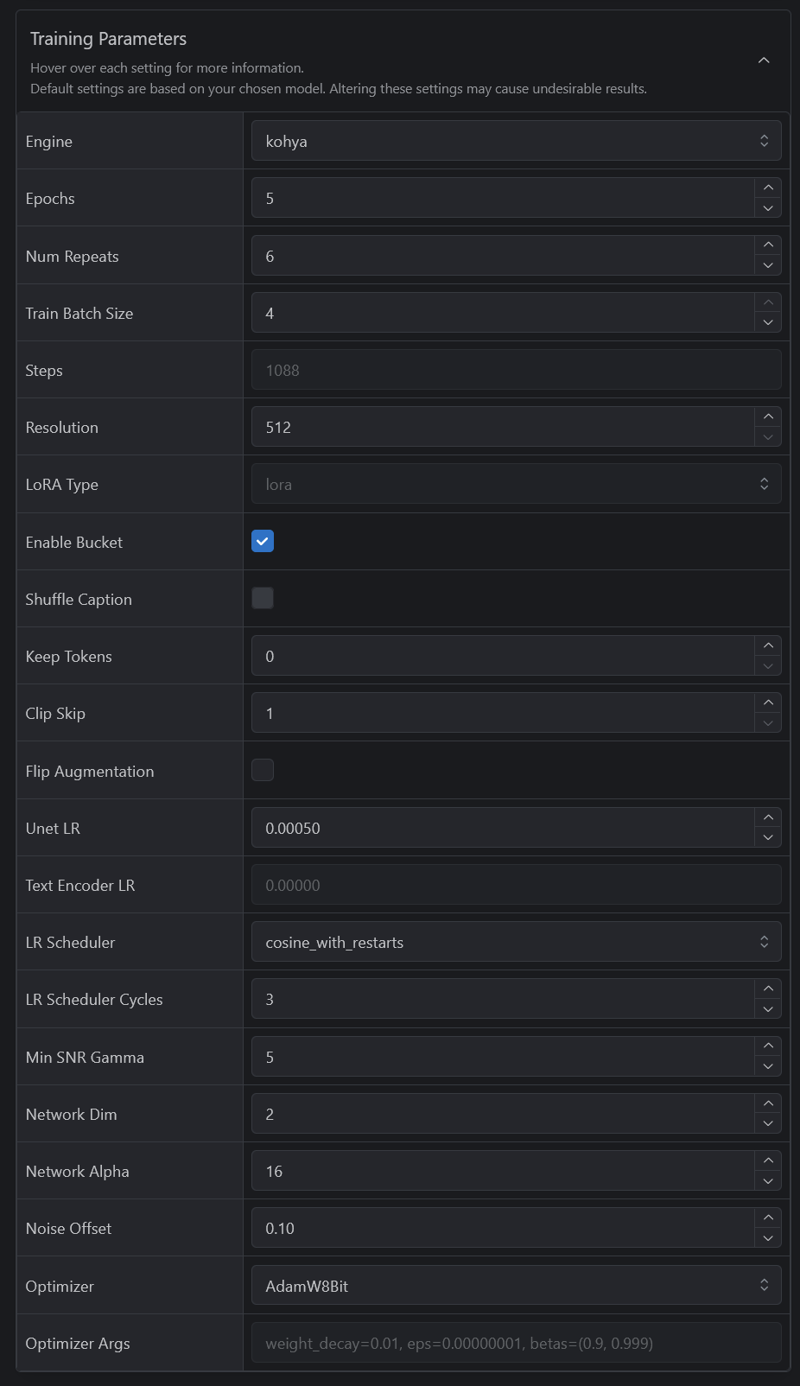

Training Settings

Each model was trained on the same 145 images. The datasets are shared on the model pages. The differences are in the captions. Each dataset has their own caption types. This guide will provide one example caption for the 4 new types. Part 1 has the caption for the caption types covered there.

I went with the recommended training settings from the Documentation.

Specifically, adjusting repeats to reach ~1000 steps in the training.

This time I trained with a Resolution of 512. The first 4 were trained with 1024. I don't think it has any impact at all in this level of training.

{

"engine": "kohya",

"unetLR": 0.0005,

"clipSkip": 1,

"loraType": "lora",

"keepTokens": 0,

"networkDim": 2,

"numRepeats": 6,

"resolution": 512,

"lrScheduler": "cosine_with_restarts",

"minSnrGamma": 5,

"noiseOffset": 0.1,

"targetSteps": 1088,

"enableBucket": true,

"networkAlpha": 16,

"optimizerType": "AdamW8Bit",

"textEncoderLR": 0,

"maxTrainEpochs": 5,

"shuffleCaption": false,

"trainBatchSize": 4,

"flipAugmentation": false,

"lrSchedulerNumCycles": 3

}Version 5 - CogVLM

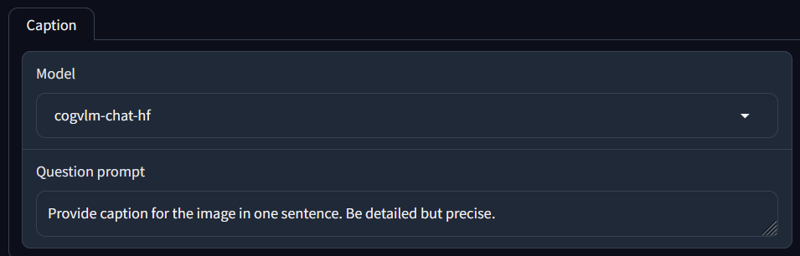

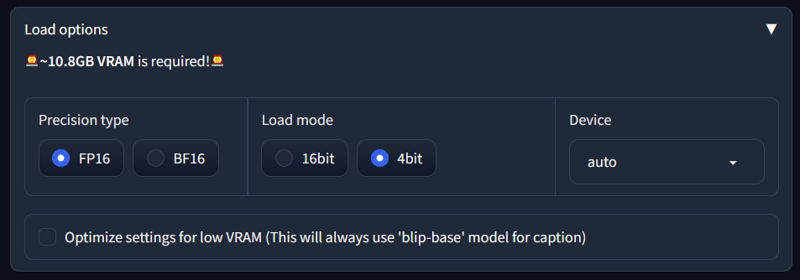

Does not use any trigger word.

This version uses CogVLM from inside the image-interrogator tool. Fairly easy to set up, and has a lot of VLMs you can use, try out and compare. When installing image-interrogator, remember to follow the instructions carefully. DO NOT CREATE YOUR OWN VENV! Install with their command: install_windows.bat T. Do not follow into my footsteps :)

With this tool, you can set a prompt instruction. I used the default prompt.

Example caption:

A meticulously crafted crochet castle with red turrets stands atop a verdant hill, surrounded by mountains, trees, and a winding pathway made of yarn

I think this is a very high quality caption. It's precise, focuses on the right parts of the image, but isn't bloated. It doesn't cover everything fully, but I'm not convinced this gives the best result either.

Since the prompt is asking it to focus the information down to one sentence, it could be a bit too short with this default prompt, but I still think it's very strong like this.

Overall, I'm very happy with the tool and would recommend it if you have 12gb vram or more.

Version 6 - Florence2

Does not use any trigger word.

Florence 2 is a very good VLM (Vision Language Model) from Microsoft. It's easy to use and processes very fast. On a 3090 you can use it in batch 7 at 0.6 seconds per image (1024x1024) on average. If you turn batch size down to 1 it only requires 6gb VRAM, so it runs well even on low-end GPUs. At batch size 1 on a 3090 it takes 1.05 seconds per image on average.

Here's a link to my florence2-caption-batch script / tool.

Example Caption:

The image shows a small toy excavator made of yellow yarn. The excavator has a long arm with a bucket attached to it, which is used to dig and move dirt and debris. The body of the excavator is black and has a small scoop at the end. The toy is placed on a brown knitted blanket, and there are a few small white balls scattered around it. The background is a plain grey color.

The caption is very good. It really depends on the model you are training it for. I think this may be suitable for Flux training.

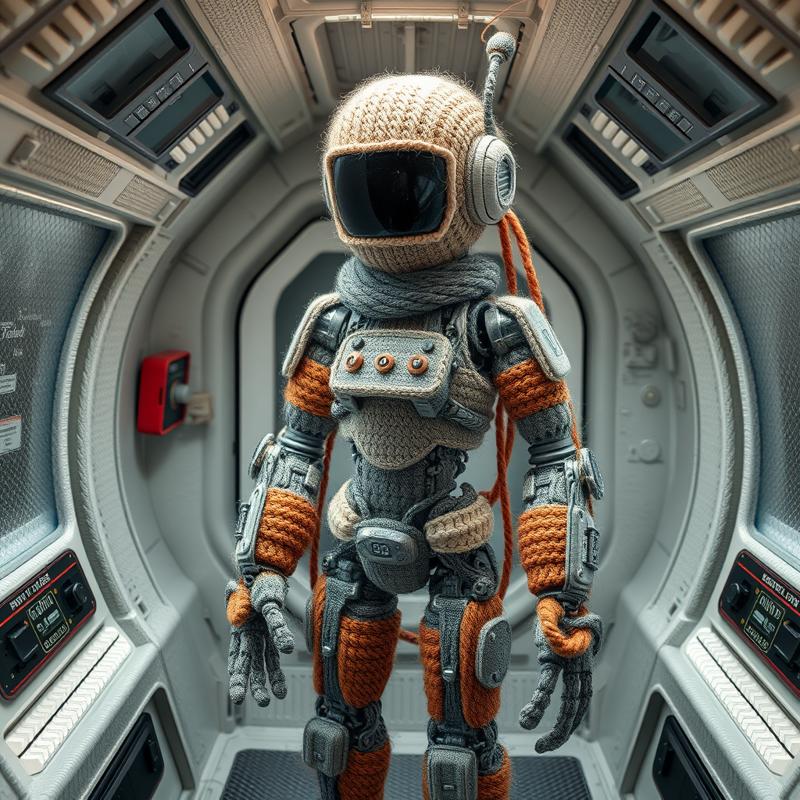

Version 7 - Moondream 2

Does not use any trigger word.

"Uhh, isn't Moondream 2 like, old and stuff?"

Wait wait, hold on, don't leave.

Moondream 2 is a little bit old and weak at this point, yes. But it is very fast!

It's also easy to batch multiple questions on the same image to it. This means that we can ask very specific questions about topics we care about, and get answers to them, and then use this data in various ways.

In this experiment, I used 2 prompts to Moondream:

Wool Figure: Shortly describe the wool figure of this image

Background: Describe the background of this image

I saved each of these queries to a json, and then I combined the answers into the .txt caption file, starting with the description.

Example Caption:

The wool figure is a robot, designed to look like a human. It is standing in a space-like setting, possibly inside a spaceship or a room with a white door. The robot is wearing a hat and appears to be a knitted or crocheted creation, giving it a unique and human-like appearance. The background of the image features a wall with a door and a screen.

I think this works well. It lets you really precisely nail down the outputs you want from the VLM, and lets you configure the prompt for each part individually. You can use a generic captioning prompt if you'd like, but then you're losing out on the strength of this method.

I have a tool / code set up on how to do this easily, but I haven't cleaned it up enough to release it yet. Feel free to do whatever you do to my Github account to get notifications when stuff happens. I will always upload my scripts and training codes there, as well as guides on how to use them both there, and here on my CivitAI articles space.

More on this method coming up.... NOW!

Version 8 - PaliGemma - Longprompt

Does not use any trigger word.

PaliGemma-3b is a strong VLM from Google. It takes instructions fairly well out of the box and it works rather well.

But more importantly, it's fairly easy to finetune. Huge thanks to Gökay Aydoğan for teaching and helping me with this for hours! ❤️❤️❤️

I haven't released the finetune code and notebook, but I intend to release it as soon as there's time to make the code a little bit prettier and do a write-up of it.

Introducing PaliGemma - Longprompt

I have only done one finetune on it, and the results are okay, but likely too long and complex. I need to do a second pass at this model and train it on more suitable length and less hallucinatory. However, even as it is, it produces very strong results.

The special feature about it is that it combines wd14-style captions with natural language descriptions. And I think this is the strength of the model.

Example Caption:

tank, sky, desert, sand, plant, scenery, day, a colorful and imaginative scene depicts a toy tank sitting on a sandy surface in the middle of a desert-like setting. the tank's design is inspired by world war ii tanks, with a red body and yellow turret. surrounded by plants and rocks, the desert environment adds to the contrast of the bright colors of this image. a yellow color dominates the scene, while the orange and blue colors are present in smaller elements such as the plants or the sky. overall, this picture exudes a playful and carefree atmosphere, evoking feelings of childhood playfulness

I think the caption is fairly strong, but it does tend to hallucinate. The model needs further training and refinement, but it shows promise.

It's not that hard to combine wd14-captions with any other natural language model. And this is in fact how I trained this one.

Allow me to go into a little bit of detail.

Sidequest: Creating the data for PaliGemma - Longprompt

Feel free to skip to the next section. It has pretty images, like, 198 of them!

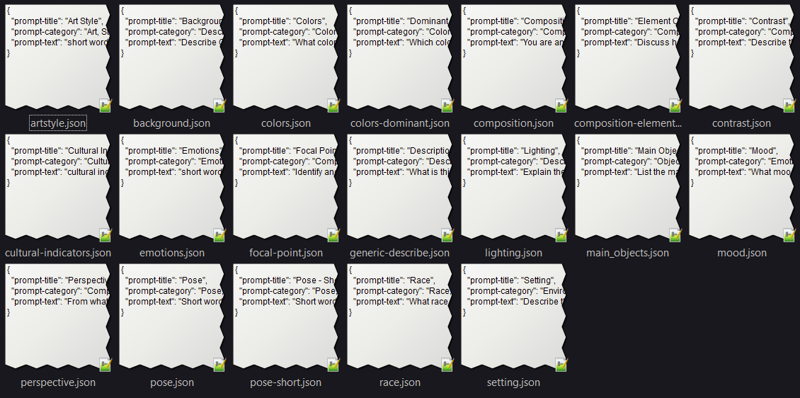

I used Moondream 2 with 19 different questions about various topics:

Each of these queries is sent to the model, and I get a response about this subject only. The prompts have been optimized to produce brief and truthful results with minimal hallucinations.

I then run each image through a wd14-style captioning tool. I use joytag-batch for this to quickly get captions for all images in a folder (including subfolders).

For the next step, I combine send all of this information into an LLM, and ask it to combine it into a very long description. I ask it to prioritize and emphasize details that are included several times, and to ignore details that don't seem to fit in.

For anyone interested, here's my LLM prompt for this:

[YOUR ROLE]\nYou are an expert master professional editor, you have won the nobel prize in literature and several other prestigious writing awards.\n[OBJECTIVE AND MISSION]\nPlease rewrite the following text into one cohesive description without losing details describing the image. The purpose of the result is to train an image AI model with it. Keep this in mind.\nThere may be redundant information, combine such information.\n[DETAILS AND RULES AND INSTRUCTIONS TO FOLLOW]\nContradicting Information - There may be contradictions in the text. Ignore the information with less mentioning of it, or tone it down to make the information less prominent. Example: It may describe an inanimate object as having a pose or being human. In this case, disregard such details.\nDo not miss out on important details - Such as character descriptions and poses. It's okay to infer details if insinuated by the text.\nBe assertive - Do not use vague terms like 'likely', 'possibly' or 'could depict'.\nIgnore unknowns or uncertainties - Remember that this is a descriptive text, so sentences like 'However, without more context, it is challenging to pinpoint' are not helpful. Avoid repetition. Don't use words like 'the image...'. Only describe what's actually known or in the image.\nYour output should be at least 800 tokens. Do not summarize.\nWrite it all in one section paragraph, DO NOT use linebreaks!!!\nStart with the most relevant descriptions of the main subject first. And then go down into details. Do not use 'power words' or exaggerations. Example: Avoid things like 'The image presents a visually striking', or 'The exact nature of this significance remains elusive'. Keep the text descriptive.\nOnly return the result, and only return it in a code block text output please. Remember: one paragraph and no new-lines.\nDo not acknowledge the user, only output the image description.\nImage description. Additionally, also consider the following list of tags and incorporate that into the description, where it matches the text description above. This is just FYI. Do not return a list of tags to me.I think this step needs improvement to reduce hallucinations. The outputs are too long and tend to blather on with unimportant details towards the end. It could be that newer and stronger LLMs will produce better results, but the prompt above definitely needs improving.

Here's an example output caption:

weapon, gun, 1boy, outdoors, sky, weapon_on_back, male_focus, from_behind, rifle, holding, cloud, standing, sword, coat, red_scarf, black_hair, sunset, scenery, facing_away, scarf, holding_weapon, mountain, pants, holding_gun, short_hair, rock, gloves, In this digital art piece, a solitary man stands proudly in the center of a vast, desert landscape, grasping a sword in his hand, his brown coat and red scarf culturally indicative of the Middle Ages, suggesting he might be a knight or warrior. He wears black hair, and his facial expression conveys a sense of contemplation and solitude as he stands amidst the desolate, empty desert. His stance is assertive, with his feet firmly planted on the sand, and his gaze directed towards the distance, creating an atmosphere of isolation and introspection. Behind him, the majestic backdrop of mountains stretches across the horizon, where a vibrant sunset unfolds, casting a warm, golden glow across the scene. The predominantly orange hue of the sky and background injects a sense of drama and intensity, while the rocky terrain, depicted in shades of brown, adds depth and texture to the composition. The image features a stark contrast between the man's attire and the arid environment, with his sword adding a sense of adventure and danger to the scenario. The overall mood evoked is one of awe, curiosity, and solitude, inviting the viewer to imagine the narrative behind the man's journey in the desert.

It starts pretty decently, but towards the end it gets a bit philosophical. It can also certainly hallucinate, partially depending on the inputs it recived.

At any rate, I'll post more about this model when I release the PaliGemma finetuning tools and guide at some point.

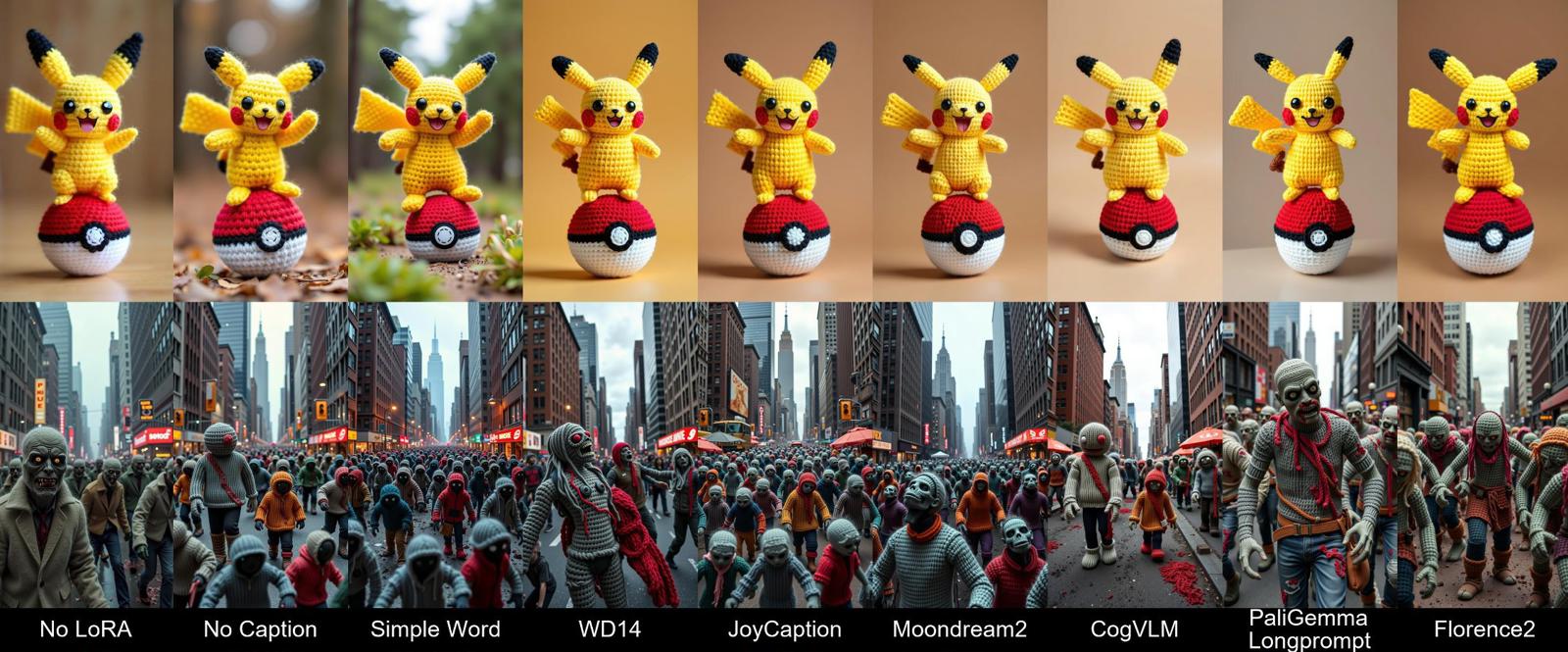

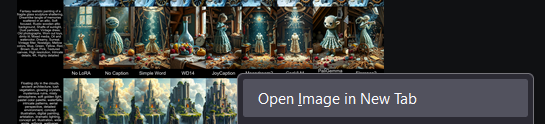

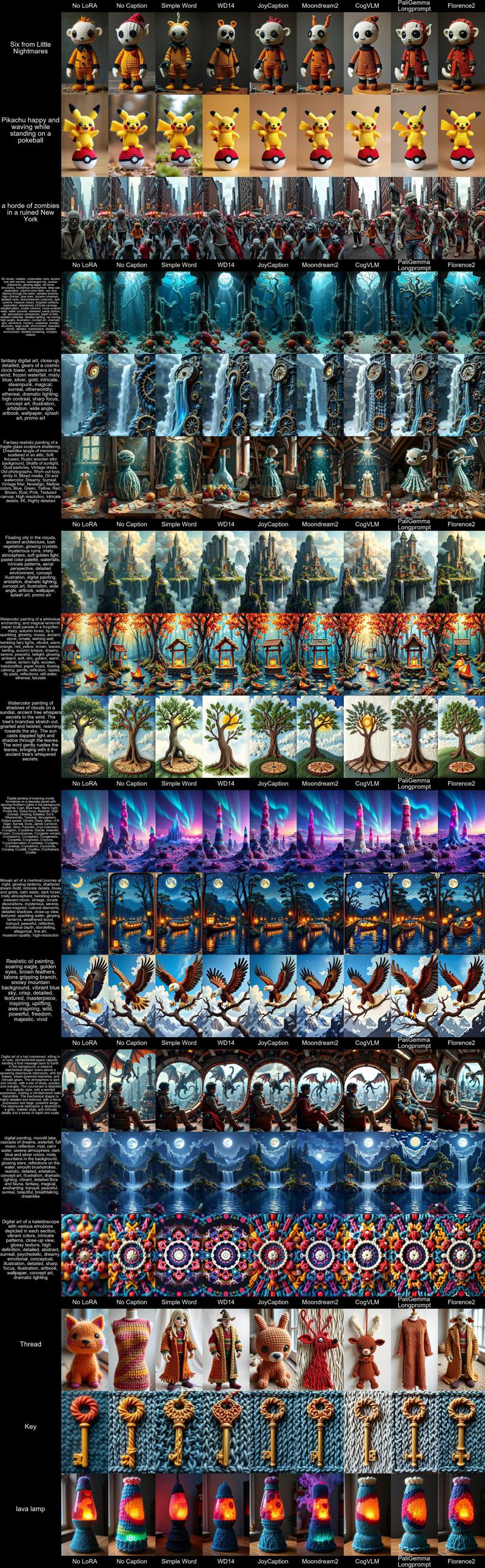

Wool Model Result Comparison

Okay, okay, I get that this is why you actually came here. I created an XY-comparison of all these different captioning methods and let it generate for a bit. Here are the results.

Here's a link to the large full-sized image: Link. On the image page, right-click on image and select: "Open Image in new tab" to see it in full-size.

I used a custom XY-generator script to make the comparison sheet. It's also a bit rough, but I'll release it once I have time to make it more user-friendly. It has a nice repeat label feature :)

Final Conclusions

Yeah, I don't really know how much can be concluded. Honestly all versions of the model are strong. Flux is strong. It seems hard to break.

I think it's very strong with "simple" models like this. Models that it already seen similar things of in it's training set (knitted objects). You can already get decent results without any of these LoRAs. They just enhance the effect and make it more consistent, stronger, and prettier.

I'll go through the overview sheet and write down what conclusions I can think of.

No LoRA

This only works on the simplest of objects. The single word ones like Key, Lava Lamp and the Pikachu ones stand out as working well here. The tree also has an organic string-like feel to it, so it does it okay. To remind you, these no lora images were given the same visual wool-description as all the other ones without a trigger word.

We can conclude that Flux is strong, but needs help for more complex combinations.

No Caption

Flux dark wizardry. Just give it training data and it'll figure it out without ANY captions. It performed exceptionally well on the Pikachu, Key, the Watercolor Tree, and the Crystal Formations.

The Key stands out as the only key t hat is fully made out of wool.

It was weaker on long and complex prompts. I think it could be that it's so overloaded with information from my longer prompts, and it has less of the trained embeddings to tie to the subject, so it gets distracted.

There's still use for No Captions. I'll write a bit more about it in the Hybrid section below.

Simple Word

A reminder about this one: It has a trigger word, and it uses a simple word description for the training data. W00lyW0rld airplane as an example caption. This is a strong method to create World Morphs since back in SD1.5, and it works well here.

Noteworthy is that the Pikachu one has the most natural environment compared to the rest. Why? The only one close to it is the No Caption one. My guess is that the lack of details on the captions allows the model to interpret the data more freely and less restrictive, and thus shows more creativity.

We can also see it showing the best yarny water in the Clocktower Waterfall.

It performs specifically poorly on the Watercolor Tree and the Crystal Formations. I can't think of a reason why compared to the rest. When the No Caption deals with both of them exceptionally well.

WD14

The classic. In the first round of tests, WD14 performed strongly.

We see similar results here. It has very strong showings in the Eagle, Lava Lamp and Clocktower Waterfall. It was also the only one to produce a glass bottle on the Glass Sculpture Attic one, not that it's made out of wool.

Overall it's good and produces very clear contrasts in the wool texture, as well as beautiful fuzzy edges.

On the bad side of things (some might say), the zombie horde got nipples and panties... WD14 does what it's best at I suppose.

JoyCaption

A decent showing with strong results in the Watercolor Tree, Crystal Formations and Kaleidoscope.

I don't think it's the strongest in any image. I really like the water-string coming down from the Clocktower Waterfall, and it did a very strong dress in the Glass Sculpture Attic-scene.

Moondream 2

The Kaleidoscope and the Watercolor Tree stand out to me in their details of this one. I really like the complete stitching of the Watercolor Tree. It's not so wooly, but it looks good and gives it a 2D feeling, which matches the Watercolor part of the prompt.

What stands out to me of the images is the thin-ness of the threads of this version. I don't believe this has anything to do with the model or captioning, but rather just where the training ended for it.

The Cosmonaut also got a red space suit here for some reason, compared to the rest. Could it be more sensitive to color-bleeding?

CogVLM

This one has a couple of very strong showings. The Eagle has the background in yarn, which is suitable since it's supposed to be a "Realistic oil painting". It was the only one that flattened out this image. And it performed similarly well in flattening out the image in the Riverboat.

In terms of details, it was the only model to add wool treasure chests to the Underwater Ruins.

The Cosmonaut and the Zombies are the worst ones of the bunch. I can't think of any particular reason or connection.

Something that stands out with this model is the glow. It's really letting glowing bloom out over the image, not turning it into 2D yarn glow.

PaliGemma - Longprompt

I'm not sure why, but this one one seems to have the most MVP images of them all in my opinion. I hope it's not confirmation bias since I trained the VLM. Let's go through the good ones.

The Zombie is showing the most detail, including guts and gore made out of wool. It's not as stylized and doll-like as the others, but I find that more impressive. We see the same result with the Kosmonaut. It m anages to make the whole costume very much out of yarn.

The Clocktower Waterfall has strong yarn details that look good.

The Floating City was failed by all models except this one. I wonder why. The prompt isn't the most detailed. My gut feeling is that it's a very zoomed out subject to focus on. Most of the training images show the subject in strong focus and mostly close-up. Since this model has very long captions, could it be that it manages to find all the details in these zoomed out images where it's harder to connect the details to the learnings of the model?

The Well Paper Boat is very strong wool-like, but it's completely lacking the boat element.

The Riverboat Journey is looking great and it captured the 2D instruction of the "Mosaic Art" parts of this prompt.

The Eagle has the second best knitting feeling, only beaten by WD14 in sharpness and clarity.

The Moonlit Lake is also exceptional compared to the rest. Similar to the Floating City, this one is the strongest for these distant environments.

For some reason, it made a mouse toy in the Glass Sculpture attic. Not sure where that came from.

I don't know, maybe it was just a lucky training, it ended on a strong note, but I think this model performs exceptionally well.

Florence 2

Another very strong MVP contender here. It has the best Well Paper Boat, and it was the only one that made the boats out of wool as well. Similar to PaliGemma - Longprompt, it performed exceptionally well on the hard environmental ones like the Floating City and Moonlit Lake.

The Cosmonaut has a very strong showing with the human being very wool-made, but also with the dragon in the background.

The Riverboat Journey is also best in class here, with the whole thing being flat and 2D (the prompt mentions "Mosaic Art" so this is suitable. And it even managed to make the boat, the boat's driver, and the waves around the boat in yarn! Very impressive!

I think this model showed exceptional results.

Ranking of these models

I don't think this is a very good metric. This is a sample of ONE model, with only 20 or so prompts. So much could have happened if I used different epochs of each model, or different weights. Just a different seed may have made a huge difference.

For each image, I'll choose one winner, and the number of wins will determine the rank. I'll add a brief comment as to why the winner was chosen.

Six: No Caption (All bad as the character wasn't known by Flux, this one was appealing)

Pikachu: Simple Word (Best background, better natural lighting)

Zombies: PaliGemma Longprompt (Amazing closeup and details with strong human morph)

Underwater Ruins: Florence2 (Decent aesthetics and good yarn)

Clockwork Waterfall: PaliGemma Longprompt (Best clockwork details and water of yarn)

Glass Sculpture Attic: PaliGemma Longprompt (Best dress and yarn details, doll character)

Floating City: PaliGemma Longprompt (Best yarn details even at the background)

Well Paper Boat: Florence2 (only one to get boat, and beautiful yarn woven details)

Watercolor Tree: No Caption (Cleanest tree, even though ground was lacking)

Crystal Formations: No Caption (Most crystal-like yarn, the glow and mix with actual crystals)

Riverboat Journey: Florence2 (It yarned everything! Even the water!)

Eagle: WD14 (Super clean details on the knitting of the bird and branch)

Kosmonaut: PaliGemma Longprompt (Great human detail and the cockpit is great)

Moonlit Lake: PaliGemma Longprompt (Strong ability to get the details right in the background)

Kaleidoscope: No Caption (Slightly more pleasing to the eyes with a bit of shading)

Thread: DISQUALIFIED. This prompt was too vague and the entries was too different for a fair comparison. My favorite would be the old man of WD14, so it gets half a point.

Key: No Caption (Best key, only key fully out of wool)

Lava lamp: No Caption (Full subject out of yarn, nice glows combined)

Results

PaliGemma Longprompt: 6

No Caption: 6

Florence2: 3

WD14: 1.5

Simple Word: 1

CogVLM: 0

JoyCaption: 0

Moondream2: 0

PaliGemma Longprompt vs No Caption. I did not expect these two to come in shared first place. What does that mean? The longest prompt is equally as good as the shortest prompt? Is it all up to random chance during the training? Cosine luck?

How ties were broken

For PaliGemma Longprompt vs No Caption, PaliGemma Longprompt wins for me. It had more second places than the No Caption.

For CogVLM, JoyCaption and Moondream2 I did a separate tiebreaking round with only these 3 models. CogVLM won the most, and then a JoyCaption had more good looking images than Moondream2.

Conclusive Conclusions?

TBD. This is still just a sample size of 1 training run for each of these, and I picked the last epoch for each of them. It seems the winner here is Flux. But this means that the winner here is all of us, for having access to Flux.

What do you think? Please add a comment which captioning method's result you liked the best out of all of these.

Extra Curriculum - Hybrid Captioning - CFG?

I have a theory that a hybrid method of training may be strong. If I have understood training with CFG (Classifier Free Guidance), which is used in Stable Diffusion finetuning, this means that we are training on both a captioned image, but also at the same time we are training on the same image without captions. This is the F in CFG.

My idea is that if we mix captions, some with very long and detailed, some shorter but with a few words, some just a single word, and some without, we may get an even stronger understanding.

This will likely be my next area of testing. If only... see orange section below.

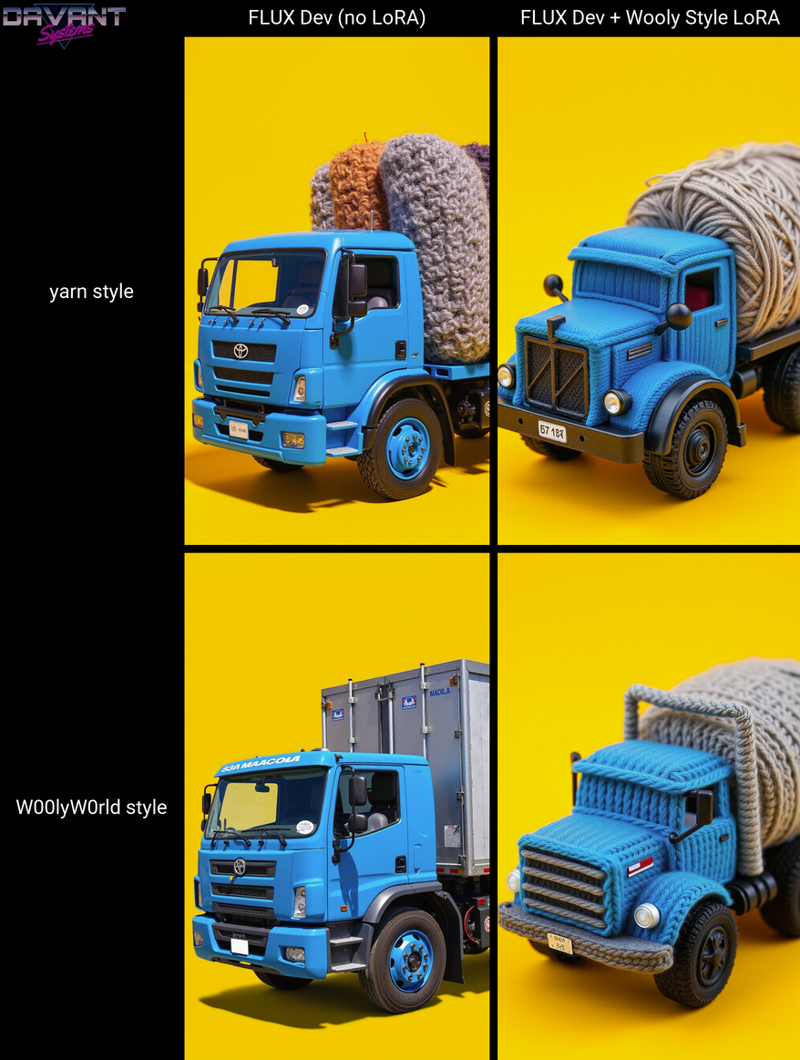

More Extras - Yarn Style

User David_Davant_Systems posted an interesting image.

I believe this image was generated with the SingleWord version of the training. This is the surprising part. The captions for this dataset has no mention of the word "Yarn" anywhere.

To me, this feels like Flux magic of visual concept similarity. It shouldn't be able to be from the words, because the SingleWord dataset uses W00lyW0rld as the trigger word, which is not a reald word and should have a poor token representation, and the similarity to yarn should not be close. But maybe it is?

To me it looks like it understands the visuals, which is why we're able to get such good results from the NoCaption version. What are your thoughts on this?

Impressive article! How can I help?

Thanks for your kind words! You're such a nice person for saying that ❤️

If you like what you just read, (may I ask why you are still reading this part if you didn't?), feel free to drop some ⚡⚡⚡ on this article. Or maybe spend some to download some of the models. It's very much appreciated and it lets me do more fun experiments like this.

If you have some specific training test to sponsor, feel free to shoot me a PM.