Flux Sim v1512 2/18/2025

https://civitai.com/models/1136727/v1512-e12-simulacrum-schnell-model-zoo

I should preface it saying I rushed this version out. I really should have given it a full finetune, so now you all get to watch me fix it after instead of fixing it prior to release.

Flux Sim is a dedistilled hybrid variation of Flux Schnell, with much of the distillation still included. I didn't burn it, I used what is there, and in the process it dedistilled and trained it's later stages along the way. The outcomes have been quite unique and the only counterpart I've seen akin to this design is Shuttle.

Flux Flex is similar, but not the same kind of model. They are very different; and yet they can still communicate. Like distant relatives.

It was trained with a curriculum style training, but in a very specific 128 dim 128 alpha single lora format. In this case, I did not derive from the singular lora, but instead stuck to the same exact lora and released it directly.

It was trained with nearly 6.8 million samples total. The dataset has shifted multiple times, but I've TRIED to preserve the more recent metadata.

The first 5 mil; Trained with Simv4 CLIP_L, which is the post FLUX_1D trained CLIP_L, which was also used to TRAIN the SDXL which created the CLIP_24_OMEGA_L.

After the 5 mil; Trained with CLIP_24_OMEGA_L which is the post SDXL trained CLIP_L.

I actually lost track of this CLIP_L's total samples, it's numbering in the near 40 million or so.

Flux Sim is NSFW capable and unchained. It uses many poses, and requires a final pass solidification for many anatomical features (especially genitals), but it most DEFINITELY can perform many outcomes ADMIRABLY better and far more consistently than it's SDXL counterpart, while many others are still very inconsistent or simply fail. Now, it's nothing to phone home about yet - like say NAI or Pony, but it's definitely starting to show some real prowess beyond the rival capabilities while introducing some similar and many more powerful behaviors. I have high hopes.

I've identified some causal factors with plain English related to this and will be working for remedies in my spare time, but it'll take a while; So just embrace this one as the current version for now. It'll take time to fix, but the fixes will roll out over time, as well as hopefully the addition of a full liminal sector to it. I would like it to have full liminal capability, as I think it will teach the model to deviate from the norm better and introduce a structured insanity. Should be fun.

Pose markers hit 8/10 times.

Bleed from NSFW into poses are as follows;

leg up

standing on one leg

spread legs

wide spread legs

Hair style is inconsistent

Requires a full hairstyle finetune

Outfits kind of in shambles in a way

I think the NSFW outfits may have done more damage to the core outfits than I thought. I'll need a full outfit finetune at some point.

Actors and actresses are pretty much ruined, which was kind of the goal.

They will likely end up being reintroduced again when I introduce a big piece of LAION into the mix for additional context learning, so whatever happens happens.

Age slider has been bumped upward by a considerable amount, physical default form should be adult; even when attempting some shenanigans.

This damages the data for and raises the bar for minimum age in avatar form, in much of the core default Flux age learned tokens and controllers.

Forming a bubble here between nsfw and sfw is crucial to having a stable model, and this version of Schnell v1512 is not stable.

I'll need to run some red vs blue drills on this to be sure, but I think it's in an okay state for right this minute - otherwise I would have pulled it instead of typing this.

If you think it's in an unacceptable state I will pull it and reassess using the new information.

Anime, 3d, and realistic have diverged; and are starting to become more difficult to cross contaminate each other.

This isn't a good result, as the SDXL was built entirely meant to cross contaminate. I guess we go with what we get, so I'll need to devise a new methodology behind this over time.

Anatomy controllers are a bit... too... specific right now.

It's a bit too difficult to control some forms; sometimes having to define whether or not a left or right foot even exists. Let alone having it assume a whole body exists. This can be resolved with a pass with less than a few hundred images hopefully.

Quality has improved, but is also suffering still.

I have a few plans to fix it, and in the process I'll run the same images over the SDXL to see what happens there as well.

Text works still

I've been careful training it after all, so text still works.

SimV4 First SFW/NSFW Regularization Train Incoming 11/30/2024

As of writing this, I've noticed a few flaws with SimV4. It's quite stable, but in the process I had to remove a couple of the earlier loras that went into making SimV32 NSFW, while retaining the core concepts of SimV32 safe. This both lobotomized some of it's capabilities, as well as restored many of the core FLUX capabilities.

For those who really liked the NSFW version, I'm currently planning a way to make it work properly, BUT I haven't had good results training the same NSFW loras with SimV4 as the base model. They usually cause lots and lots of damage in unintended ways, so I've decided to take a new approach to the entire subject.

So currently, the reinforcement training fixed a great deal of problems with simulacrum, but it's a bandaid. The normalization is wonky and out of scope with what I had intended, which invariably causes a cascade problem to manifest itself given further training. So. I've decided, that what we have here is a perfect opportunity to retain our core structure, and introduce a large amount of new information into that structure.

Over the coming weeks I'll be starting a new project at work, and in my side time I'll be working on shoring up the pose, gender, and outfit dataset to match the perfect ratio required to fully saturate a proper image regularization system.

In this case, we'll be including poses for multiple characters, as well as single characters.

You can see in the base of the core and subset PONY models, that a pose regularization was clearly used. There was clearly a substantial amount of training using it as well, which includes many cooperative and combative multi-character poses.

There are new problems that crop up based on this, a large one being that FLUX trains slowly. So adding image regularization to the already slow training, is going to compound the amount of time it takes to train, which is an unfortunate necessity, but also a requirement for creating a true successor to the pony based models.

It NEEDS to have control, at least to some degree. Every time I start shunting large amounts of images into this model, the entire thing destabilizes to a degree that can't be maintained through basic debugging. Even finetuning flux with those images creates a huge problem, due to the nature of the way I trained it on Flux1D2Pro and the outcomes of it's current behavior when inferenced on Flux1D, Flux1D Blockwise, and Flux1D-DeDistilled.

Essentially I'm moving BEYOND the point of simply pointing the beastly sea monster kraken or giving it steroids. We are going full behavioral college for the kraken now. It's going to sit nicely in school, play with it's friends, and not eat ships anymore. However, it'll still have the eat ship command if I'm careful, which means we'll be in full control of this thing given a bit of careful regularization and reinforcement training based on it's original unet differences.

Tagging is paramount to making this work. Correct tagging with SimV4 will either make or break the outcome, so I need to make sure all the tags are uniform with the original system, as well as introduce new details for the new concepts. Like gluing everything together.

If the experiment goes well or badly, I'll release the outcome either way with a burndown and analysis.

INTERACTION TAGGING

These are the TODO for the individual's interactions with the viewport, and the interactions between two people and the viewport. Each of these will need sourced images from multiple angles and multiple offsets to ensure fidelity, as well as bucketing and resizing before training to ensure multires capability.

I'm CERTAIN my pose pack has ALL OF THIS, but it needs to be tagged accordingly and fed into more reinforcement training. As of today I do not have an AI to automatically detect these traits, which means I'm doing data prep to create that AI first. Expanding my detection model list is crucial to making this work.

Video + Animation Tagging:

To train this offset, rotational, and size detection ai, I will likely need to tap into my video library. There is a copious amount of 3d rotation videos that I can use based on someone else recording something. I can train the initial detection AI with those, afterword I can potentially source millions of new images based on cinematics, gameplay footage, 3d modeling, and so on, in relatively no time compared to hand sourcing.

Viewport Offset Tagging:

Each of these require regularization images to ensure solidity. The majority of things are already filled out based on my needs, but if I want regularization based on offsets, we'll need to shore up those numbers.

Currently it doesn't support halves, so lets provide the reinforcement for halves.

offset-left

left half of an image

offset-right

right half of an image

offset-upper

top half of an image

offset-lower

bottom half of an image

It supports corners but it seems to have tied quarter-frame with it, so lets ensure these work too.

offset-upper-left

upper right corner

offset-lower-left

lower left corner

offset-upper-right

upper right corner

offset-lower-right

lower right corner

In the process lets go ahead and train these too.

offset-lower-center

offset-upper-center

offset-middle-left

offset-middle-right

Original size offsets.

quarter-frame

25% of the image, often bleeds over or attaches body parts already.

half-frame

50% of image, same as the other.

full-frame

75% or more of the image.

Supplementary size offsets

These are meant to conform a bit more reasonably to the positioning offset, but there's no guarantees until the training is done.

quarter-frame upper-left

quarter-frame upper-right

quarter-frame lower-left

quarter-frame lower-right

Interaction Tagging will include regularization images for:

cooperative interaction tagging

relative positioning

<relative_position> from behind of <subject>

<relative_position> from front of <subject>

<relative_position> from side of <subject>

<relative_position> from above of <subject>

<relative_position> from below of <subject>

<person1> <interactive_position_offset> <person2>

from front, from behind, from side, from above, from below

5^5 potentials, roughly 3k regularization images with captions for these. Most are already good but needs quality boost again.

relative offset

A relative offset is a screen offset one character will have based on the position of another, like gluing the two characters together in a way.

The tagging is to match

<subject> is <relative_offset> from <relative_position>

<subject> between <relative_offset> and <relative_offset>

a woman between a door offset-left and a dresser offset-right

<person1> is <relative_offset> from <person_rotation> <person2>

a queue of people waiting in line for a meal. the focus is two women, a woman offset left facing to the right is standing behind another woman facing to the right,

The outcome should just be two themed women standing in a line of other people in a cafeteria of sorts. Most of it would be implied by the setting and flux determines the rest by default.

handshakes

<person1> shaking hands with <person2>

<person1> handshake <person2>

hugging

<person1> hugging <person2>

<person1> embrace <person2>

kissing

<person1> kissing <person2>

on lap

<person2> on lap of <person1> facing <relative_direction>

sex poses

<yeah just use your imagination, i'll do all of them>

combative interaction tagging

flight and hovering

punching positioning

I very much like fighting games and anime, so I want this.

kicking positioning

auras, blasts, electrical effects, bleed effects (path of exile style), celestial effects, and so on.

sword swinging

parrying with sword or swords

blocking with shields

multi-positional frame offsets

These sorts of things will work when the regularization training cycle is complete, how well they work is another story.

woman <pose> offset left facing left boy <pose> offset right facing left

relative viewport rotational offsets

Same outcome as the multi-positional frame offsets.

facing to the left, facing to the right,

SimV4 Lora 1 - Face Shuffle 11/19/2024

The single face is driving me a bit mad, so I've sourced a face pack. There are no names so I have no idea who any of the women are, but they are 7000 samples of real women. They seem to have only had a minimal impact on anime, so I'll just release it and see how you guys like it.

No Lora;

Lora 0.4

Lora 0.7

Some more experiments are in order; I think the LR was a bit too high so using the lora might be a bit unstable.

Simulacrum FLUX V4 Assessment 11/17/2024

Some things worked, so very very well. Reinforcing the individual has had a highly profound impact on both the core, and the system's response to everything.

The aesthetic scoring system, style tagging, and association reinforcement all affect and trickle throughout the core system like breadcrumbs, instead of the branding burns that damaged it for V38.

V4 Strengths

INDIVIDUAL character making, character control, scene control, intrusive environment control, object control, hair control, eye control, everything have been shored up and far more responsive than v392.

In simv2 and simv3, the responsiveness to character creation was slowly being bled into other tags. After the reinforcement training, the character specific and subject specific attention and focus have been shifted back to the intended focus.

Version 4 is both a highly depictive response to requests, and a quality increasing utility with just a few tags.

aesthetic and very aesthetic are quite different, which makes for a very interesting experience when using the two in conjunction.

Simply proc those, and you can convert your entire image quality up, or use disgusting, displeasing, or very displeasing to convert your image quality into a lesser state. The outcome of this have been beyond expectations in some ways, and fallen short in others.

Offset/Size/Depiction tags seem to have a huge and lasting impact with posing.

It's akin to having taught CLIP_L how to associate screen position based on offset better, but it requires more specific angling and relational operators for more detailed associations.

Essentially, I taught CLIP_L that it's an idiot, and to suggest details with less confidence due to being uncertain of more details. It suggests less at a higher value, and more at a lesser value due to possibilities and training. It knows more, and it knows less at the same time. More akin to the dunning kruger response to details after a confidence loss. A lot like being handed a marker, when you have been using only crayons.

Increases the potency of the system's various associative tags and the responsiveness of learning.

In response; the pathways are highly refined for reuse;

1girl, character_name, series_name

character_name, 1girl, series_name

character_name, 1boy, series_name

and so on.

Due to the way CLIP_L has been taught so much detail and use of these, the responsiveness to LORA training have been shored up, meaning you need considerably LESS details to integrate these specifics into the core system.

A unique CLIP_L is in the works, with directives and a unique token meant to differentiate and burn those specific character faces into memory, meaning it'll KNOW for certain if those characters exist or not.

This CLIP_L is not ready yet, and will require a custom node in COMFY_UI to function PROPERLY when complete, but this node will not be required. It will just be more responsive with it, due to the node chopping out the unique tokens automatically and using deepghs systems for identification solidification.

Style control has become much easier and more responsive with the base system, and has a much higher response to tagging phrases with the styles.

A multitude of core styles have been diversified into semi-realistic, flat, and more stylish alternatives due to the additional CLIP_L training. It's considerably easier to proc styles due to this.

Loras have become easier to use and superimpose techniques into.

Style loras are absolute chads with this version. Their destructive layered nature makes for a highly intrusive and beautiful outcome with art.

SafeFixers (15k safebooru expansion) is still booming in the core, making an absolutely fantastic anime display when desired.

Proc vegeta, get vegeta, even though there's way too many types of vegeta currently.

Hands, hands, hands. Hands are better, but not for all styles. There isn't enough hagrid images yet. I will be converting HAGRID images to anime, 3d, semi-realism, and realistic. I think the HAGRID images are having a negative impact on the styling due to simply having hand posing, so I'll reinforce the styling so it understands the difference properly.

SIMV4 LORA Training Settings

SIMV4 LORAs require almost no steps and considerably less images, while retaining the information at a much higher learn rate.

30 or less pictures, all showing obvious face;

portrait; from front, from side, from behind

9 images < hairstyles + face + eyes

cowboy shot; from front, from side, from behind

9 images < costumes + hair + face

full body; from front, from side, from behind

9 images < costumes + hair + face + shoes + settings

Tests show 0.0012 is a bit too much learn rate, but;

UNET_LR 0.0009

TE_LR 0.000001

clip_l only < many characters need no clip_l

300 samples total (calculate repeats and images)

characters

dim 2 alpha 4 anime character

dim 4 alpha 8 realism character

dim 6 alpha 12 3d character

three tags; name, 1girl/1boy, series

more tags make it learn more faster.

styles

dim 2 alpha 32 flat style

dim 4 alpha 16 3d style

dim 2 alpha 8 2d style

full captions

These settings produce a quick character LORA that can function in the base Simulacrum V4 system.

Often takes less than 5 minutes to produce a LORA.

3 tag loras aren't always the most responsive, so you should tag eye color, hair color, hair style, and outfits for the best results. This way is reusable without any outfit bindings though, which can be more fun.

V4 Weaknesses

TOO MUCH REALISM. As interesting as it is, I don't want to hit it every single time I proc an image with [aesthetic, very aesthetic], but I also don't want it to be impossible to proc. Simulacrum V392's massive failing WAS it's inability to produce realism, while this one's pendulum seems to have swung the opposite direction.

It needs more 3d. It doesn't have enough 3d, and doesn't have enough 3d styles for 3d series.

Needs a very simplistic base 3d style, currently it doesn't have a properly defined one, which causes it to be all over the board.

I will be speaking with freelancers and artists about this particular one and see what I can come up with in terms of utility.

The 2d style is essentially NovelAI Anime V3 but it's bled between the default NAI FURRY V3 and NAI ANIME V3 styles, which are quite different in terms of quality and styling.

This requires additional images, additional poses, additional anime stylizing in very specific styles to produce the correct images without burning a full style pathway over what I've already done.

Realism was both hurt and helped; the core woman became one of the 7 women, and not the one with the most images, which makes me a bit confused as to why.

A much, much, larger pack of women faces is necessary. I have a few potentials for this, but I think the best will simply be a sourced face pack, similar to how I used the HAGRID hands to fix many hand poses.

Facial expression are often completely unresponsive.

This is on the list of things TODO for version 5, making facial expressions work properly with anime, 3d, and realistic. I now have to worry about sub-styles, which isn't something I expected originally, so hopefully shoring up the linkages will work in that nature, but hopefully isn't good enough so it's on the docket.

As powerful as the newly adopted Depiction/Offset/Size tagging has become, the outcome is uncanny at times and disjointed with the actual poses. Which I believe has broken a multitude of trained poses and character to character associations.

Next training cycle will include a full style function based entirely on which angle the character's body is, their legs are, their arms are, and their head is facing, based on the depicted angle of a character's face.

I haven't decide on the proc system for this yet, but based on the depiction of having characters only using the "from <angle>" as a specific core trait to the viewport, I can make a few assumptions based on what might and might not work.

depicted-upper-left facing-left,

on the upper left of the image, there is a depicted subject's [viewport] facing to the left.

< implies from side >, to the left, and so on. There are many implications with things like this, and it likely won't work without the correct setup.

depicted-middle-center torso facing-right,

looking left, body facing right,

this implies the depicted subject's [viewport] (head) is facing to the left, and the torso of the person is facing right, which means you SHOULD see their back in the middle center of the screen.

depicted-middle-left right-arm at own side,

you get the idea, head is the core of everything. It will build a body from the head's viewport, treating it as though it's built top-down.

If this goes well, I'll incorporate a way to proc the torso as well, to control the torso instead of the head, and the legs and so on.

This isn't set in stone, and testing with tagging is necessary, so we'll see how it plays out with some small tests and then scale from there.

Multiple learned concepts seem to have been unlearned, or have been set into a different state of overlapping or layered use based on the tagging.

Facial expressions, arm positions, offsets, multi-character poses, and so on were damaged and require re-association.

Clothing sticks to a kind of fabric style based more on the style of image rather than the image itself, meaning it's difficult to produce say cotton on a 3d character, and fabric on a 2d character. It all seems to have a kind of gradient shine that doesn't allow much management.

Base FLUX has a similar problem, when you start asking for shine, grit, and so on. The entire image style affects the fabric, instead of the fabric being affected by the setting.

Most noticeably this is with liquid response to anatomy, such as hair being wet, clothes being modified, eye color and eye styles, hair colors, hair styles, and so on. The entire system is affected by this sort of, topical layered style that seems to superimpose itself over everything.

I'll need to research a bit on this front to come up with a proper way to solve it, but it seems to be based on default layering, and the system of FLUX Realism seems to have solved SOME of the core problems of it, but it doesn't fix anything that I need to have fixed, so I'll see about having a word with that team if possible.

Liquids are broken again, which tells me I need to either burn liquids as multiple concepts into the core, or completely revamp the way I handle liquids in general.

I'm thinking I need to teach CLIP_L more liquids, liquid use, gravity, and other details.

Road to V4 - The Steroids 11/5/2024

I'm currently taking some sick time, so I'll post what I prepared last night.

Don't get too attached to the current NSFW version~!:

The core of simulacrum v4 is going to be simulacrum v3 + safe fixers pack + these two packs trained atop the safe fixers lora directly as individual sequel forms.

I'm beginning a full NSFW retrain using 50,000 high quality, high fidelity, realistic, 3d, and anime images; roughly 15k each category. These two packs are like oil and water, and I need them both mixable without a catalyst.

Alongside I'm sourcing and building a similar 50,000 art style pack for the SFW counterpart to train alongside the NSFW counterpart.

Starting for the final stretch to version 4, all future trains will all be tagged specifically using a new tagging style in association with the old style, which includes offset detection for individual tagging.

upper-left, upper-center, upper-right,

middle-left, middle-center, middle-right

lower-left, lower-center, lower-right

These tags were specifically chosen for their avoidance of standard booru concepts, as well as having overlap within the T5 for offset association within the scene.

size tags

full-frame

moderate

minimal

These three tags will be used in conjunction with offset tags to ensure solidity within the images; which have some with booru tags to intentionally bleed into current training.

aesthetic tags

disgusting < 5%

very displeasing < 20%

displeasing < 35%

< 50%

aesthetic < 65%

very aesthetic < 85%

So the idea here is, all the best images will simply become core, while all the normal images will also be core. This will diversify the core and incorporate it better into FLUX and Simulacrum, normalizing and bleeding the top aesthetic concepts to the middle, while the others will alter the core behavior.

temporary image pruning

too big (long or wide comics)

requires a clipper to snip them into pieces automatically

monochrome

requires an automated detailing agent

greyscale

requires a coloring agent to produce colorized images

invalid images

Completely omitting these until I can produce a process to revalidate and produce these images into something.

disgusting

will run these through flux in img2img after the next to train to improve their fidelity and quality, then train them into the next batch.

removed tags

"tagme", "bad pixiv id", "bad source", "bad id", "bad tag", "bad translation", "untranslated*", "translation*", "larger resolution available", "source request", "*commentary*", "video", "animated", "animated gif", "animated webm", "protected link", "paid reward available", "audible music", "sound", "60+fps", "artist request", "collaboration request", "original", "girl on top", "boy on top",These are not useful tags. My tagging system has wildcard capability for tag removal and utility for inclusion or removal from the tagging.

Automated tag ordering template.

"{rating}", "{core}", "{artist}", "{characters}", "{character_count}", "{gender}", "{species}", "{series}", "{photograph}", "{substitute}", "{general}", "{unknown}", "{metadata}", "{aesthetic}"This template is based on a complete compounded list of tags from;

safebooru, gelbooru, danbooru, e621, rule34xxx, rule34paheal, rule34us

notably included; artists, species, character names, copyrights, and so on.

with this, the formatted and utility based tag normalizer is galvanized into steel, which nearly allows full automation from download point to inclusion.

All nonmatching aliases are normalized into a singular tag.

Any tags not present in these lists are moved automatically to unknown.

All captions are automatically placed on top of all of these tags.

The size and offset tags are automatically included as secondary tag sets, which means;

middle-left 1girl, middle-right 1girl

this is two girls, which will be differentiated in the system based on utility and purpose.

I'll be using the SafeFixers Epoch40 as a base for this new train.

Safe Fixers has shown superior context awareness and control of the system, slow cooked for nearly 2 weeks on 2 4090s, and is more akin to a true progression to the base of flux in the desired direction

The the sex pack has shown the opposite. High destructive capability, bad mixing, bad lora association, and lesser context control. Since the desire is to keep context, the outcome has to shift towards the foundational "SAFE" direction, which means I'll be finetuning the safe version with the NSFW images going forward.

Key differences;

Sex pack was trained to epoch 5 using A100s in a quick cook with 15000 averaged source images, review shows the quality of the images is very hit or miss. There's monochrome that snuck in, greyscale, line drawings, some actual AI poison, long comics, and some defective images that took a while to completely pick out.

Safe fixers was trained to epoch 40 with 15000 high fidelity high score human made (mostly) anime images. The quality has shown superior context awareness and control, which can't be understated when mixing concepts.

Even at epoch 5 the sex pack was already too destructive to continue training, while the safe fixers stayed strong until epoch 40.

Learnings:

Since I worked with these two packs, I've learned a highly important element;

Image sizes cannot always be reliably bucketed on every device.

I've made a software to resize

Prune images that are too tall or too wide

corruption, validity, and sanity checks for sussing out image bombs and corrupt downloads that would otherwise go unnoticed until the training program hits the point of $100 sunk cost.

Tag order is crucial. The system itself understands tags better if they are formatted in a specific order to create a specific scene.

I've customized my in-house tagging software to ensure the tag order fits within a specific paradigm from this point forward.

I've begun tagging everything with aesthetic and quality.

Automated NSFW detections.

The Simulacrum Core 11/3/2024

The Core

Current Core:

I completely lost track of the trained sample count 1024x1024 goal ratio with bucketing. It's UPWARDS of 2 million.

UNET LR - 0.0001

CLIP_L LR - 0.000001 (mostly)

Trained using Flux1D2pro as the base model, meant to be inferenced on Flux1D and Flux1D-DeDistilled.

Current versions and model merges are available on my page.

Simulacrum V38 is unstable, and very fun to play with, but it still holds together ENOUGH to be solid enough to be useful.

Nearly half of all of the samples are purely synthetic or generated FROM another AI model, specifically oriented to include foundational information for additional detailing and structure.

COUNT: Last estimate is nearly 80,000 unique tags either touched on, trained basics of, or simply taught a connection to within CLIP_L. This has had both positive and negative outcomes, so I'll need to be monitoring this closely to make sure CLIP_L doesn't completely implode.

Some tags were taught excessive details, which will require refinement in the future and additional testing.

TAG LIST: Providing a full tag list is very difficult, as my organization isn't the best for counting everything used together like this, but I'll try to get a FULL TAG LIST and nearly exacting count uploaded today at some point, which includes all the artist names.

If you are an artist and you'd like to have your images removed from the dataset, please contact me directly via DM and I'll exclude your art from additional trainings and refinements. It probably won't cut through though since this is foundation rather than substance, so if I can't prompt it I will assume your artist name requires additional refinement and simply not include additional images.

If I sourced your art automatically and you do not want this, know I'll be making no money from this, and it's only cost me thousands of dollars so far.

CLIP_L - I have touched on or trained some basic examples to the custom CLIP_L, providing entry points to additional training and weight counts to tags that weren't directly ingested or solved during the initial CLIP_L training process. CLIP_L was fed an absolute ton of images in it's core training from what I've read, but I'm having trouble finding an exact count.

Some of the notable tag utilities retrained or turned into utility:

cowboy shot

on

if you've ever tried base flux, on all fours puts your human on top of a horse, usually outside in a field or something of that nature.

on bed, on face, etc. everything is based on something being overlapping, structurally linked to, or simply resting atop.

using the term on should work more accurately, however it's also broken some baseline T5 inference, which will likely require a form of new association in the future.

with

If you have something with something else, T5 assumes side by side every time. However, with booru tags, you can have many things with many other things, male with male, male with female, futa with male, and so on. Everything of this nature has been trained into the CLIP_L and the overlapping problems have started to crop up with T5 so there's another problem that will need to be addressed.

from

associative directional assessment from the camera viewport, have been allocated to the subject viewport as per assessment.

this conflicts with the baseline booru tagging, as the FROM is often used from "direction", which is muddy and full of confusing behavior, which can be camera related or utility related with the viewport.

the core itself treats this as camera viewport, so simply treat it as though you're using camera viewport. this is how it's been structured and trained, and this is how it will always be treated.

the conflicting associations have started to cut through, meaning you'll probably get both from both, and it'll need to be burned into the core again later for a full structural solidifier.

RULE OF THUMB;

Avoid linkers of this nature if possible, I messed up with some of the core tags by trying to teach FROM and VIEW, since the view from something is so distinctly different than assessed originally, with the outcomes of the T5.

I will likely need to teach the T5 the difference using associative linkages, which will cause unknown damage.

T5xxl - Untouched. However, the outcomes from T5 are starting to interfere with CLIP_L so there will likely need to be a form of assessment and convergence for V5 more likely than not. I'm uncertain today. Not today though, we'll see how the conjunction and subject association tags develop over time. It may not need to be touched ever. CLIP_L most definitely needed to be though. It had excessive overlapping problems, conjunction problems, linker problems, booru tag usage problems, and so on.

CORE LORAS:

Simulacrum V23 epoch 10:

This is trained using the Simulacrum V17 core, which was a heavy training on a small unet, entirely based on providing a solid foundational piece for additional training. I expanded the dims upward from 8 to 64 and fed it 15000 images specifically selected due to their attention potential for introducing more tags into the core system.

The majority of the tags don't cut through without the correct order yet, which is totally fine, but it retains the information.

The goal here is; the more associative linkages provided, the more pathways will be accessible to the tags that are already trained into the core, until eventually the majority of tag associations will become nebulous and directly meshed into the core flux system.

So far the goal's outcome is showing tremendous progress, as each generation the new information both unlocks old information, and provides additional information.

FemaleFixers v15 epoch 10:

This is an additional 5000 specifically formatted and sourced images for the female form, which is primarily SOLO focused, so the individual character can be given additional solidity, details, and tag patterns. The outcomes from this fixed a lot of the early cropping up futanari problems, and associated the core's attention back to the correct gender tag associations.

FemboyMale Fixers V1 epoch 30:

This was about 500 images specifically sourced to teach the difference between a femboy, a male, and a futanari to the core model. This wasn't as successful as I had hoped, but they definitely provided the necessary structure when paired with the female fixers, to get rid of the majority of errant male genitals and traits.

Doggystyle V1 epoch 10 (sex pack 1):

This was about 1000 images intended to specifically teach the the model the difference between sitting, and SITTING, this relates to a multitude of angles, and was a fairly successful first interaction detailer when teaching it the more complex interactions between two individuals that aren't simply kissing, hand holding, arm holding, and so on.

This pack introduces the from behind, rear, issues that are currently present, where you can't prevent certain elements from displaying; you say from behind, you will often SEE the ass, rather than the associative location, and this can often slice a character into two halves, completely omitting half, or forcing them into a structure.

Sex Pack 2:

This one was about 18000 images specifically sourced to introduce a multitude of sex poses and some side themes to see how the model would respond to a large influx of data of this nature.

The synthetic folder name is misleading, everything in there has tagging.

So there's quite a bit of fun stuff to play with in there.

Safe Fixers v1 - Epoch 30:

Roughly 10000 images with 30 epochs, 2x 4090s, 2 batch each, roughly 10k samples per epoch. This one alone maths out to about 300k samples.

Why, did I train this?

The model isn't supposed to be ENTIRELY NSFW, but I had gotten carried away with NSFW. I'm a primal being at my core, and I think we all are at least a little. So, I had to clean up my mess, and it worked very well.

This was information primarily sourced from safebooru. The highest quality images I could find essentially, and the importance of them is based on artstyle and utility rather than anything related to sex, pose, or whatnot.

absurdres, high res, are regular tags.

Version Roadmap:

Version 1: Core Model proof of concept

Version 2: Core Differentiation divergence

Version 3: Core expansion, growth, and refinement.

Version 4: Core mass expansion

Version 5: associative tag refinement and solidification, additional fixers

Version 6: species, characters, series, copyrights, artists, additional fixers and refinement

Version 7: settings, common themes, styles, stories, processes, additional fixers and refinement,

Version 8: world building, complex situations, complex interactions, complex associative connections, finalizing,

Version 9: setting the core in solid stone and steel, finalizing for full scale training for detail, quality, score, text displays, screen displays,

Version 10: version 10 quantification and testing to every single common quantification for compartmentalization and reuse on weaker devices. Full dataset release, full process writeup, full outcome assessment.

Update 1 - 9/15/2024: The Tagging Process:

I broke through. I found the route. The path to the final goal.

After an absolutely astronomical amount of experiments and painful experiences with successful and failed tagging systems, I found a balance that works to implement the desired outcome. Way, too, many. Way too many tags. Let me explain here.

Our tagging system will be simple. We need a subject or subjects, we need their interaction with the world around them, and we need the effects the world around them is having on the subjects.

Simple enough in concept and I've devised a simple and concise methodology behind tagging images in this way. I dub it the mixed triple prompt.

Prompt 1: We identify the core and most important fixated attributes of the image. The person, the apple, the chair, the floor, the wall, or whatever is entirely focused on in the image as the focal point. This can be generic or complex. Simple or highly detailed. You can include your danbooru tags here, or you can include them later. You can tag them INTO your natural language or simply omit them until after.

Prompt 2: We identify the core aspects of the background, the scene, the basic necessities the scene has on the subject, and the entire fixation of lighting and emotional state that the image is intended to invoke on an observer. This is where you put everything in the background. This is where you put everything of lesser but still important importance. This is where you put the walls behind the subjects, the systems that line the walls with wires, the slot machines and their text. Whatever your image has goes here.

Prompt 3: The booru brick wall. We flood it. We give it everything that the large tagger identifies, and then we can prompt everything from it here after the first two paragraphs. You can write a full physical third paragraph if you want to identify even more important attributes on the attributes.

This is, the prompting. This is where our LLMs such as LLAVA 1.5 will help us build our world.

2 million image brute force

Based on the unsuccessful small testing outcomes and the HIGHLY successful small testing outcomes, I've carefully and methodically devised a potential experiment that will be a full scale test.

The entire process will be documented and stored on Hugging Face to ensure posterity and transparency throughout.

I have been building up to this plan for quite a while. It's a good combination of observed successes and failures.

The philosophy

Subject fixation, object fixation, interaction fixation, task fixation, order fixation, and careful row based organization. I wrote an article based on subject fixation recently here.

Pony and the like entirely fixate on singular human and object subjects. They are often completely inflexible when attempting to prompt anything special or out of their direct line of training data using plain English based on those subjects. SDXL inherently doesn't suffer from this.

Transparent backgrounds, carefully planned out subject ordered sentences, and complex interaction capability with open ended sentences will help solve those problems.

If the system is tagged based on the concept of subject fixation, subject task, object fixation, and so on; the entire system will simply meld into flux rather than burn away all of the important details with careful planning. Flux knows what a subject is. Flux knows what an object is. Simply smacking the two lightsabers together is arbitrarily based on the request.

Flux understands layered complexity.

My tests in both small and large show that Flux fixates on large portions of images when prompted with 3d portions by default. I'm uncertain if this is a training data result, prepared manually, or if it's some sort of emergent behavior currently. I've yet to get the answer for this.

a ceiling of a room with a ceiling fan, a light on the ceiling to the right,

Essentially, this image prompt just sliced the image above into three. The light cannot be on the ceiling to the right due to the slice seemingly only allocating it to the floor. The room is now fixated and superimposed, the wall is the ceiling, and so is the floor, and so is the ceiling.

The more tagging rows you produce, the more image slices you produce until it hits a threshold, which I'm uncertain what that threshold really is currently. I do know I get mixed results after 3, so It's likely the rule of 3 policy. squints at Nikola's rule of 3

"a ceiling of a room with a ceiling fan, a woman standing to the left and light on the floor to the right,"

The slices can continue using commas to slice rooms and add elements in more complex fashions until we can form successful 3x3 just with base core Flux.

"an empty portion of ceiling, a ceiling of a room with a ceiling fan and glow in the dark stars, an empty portion of ceiling with shadows on it, a woman standing to the left, a bed and a bedroom, a light on the floor to the right, a woman standing to the left is wearing clown shoes, the bed in the middle has a monster under it, the light on the floor to the right is sitting on a table,"

Multi-interaction complexity due to row based tagging.

Fixation applies to both inverting and repurposing subjects as shown here.

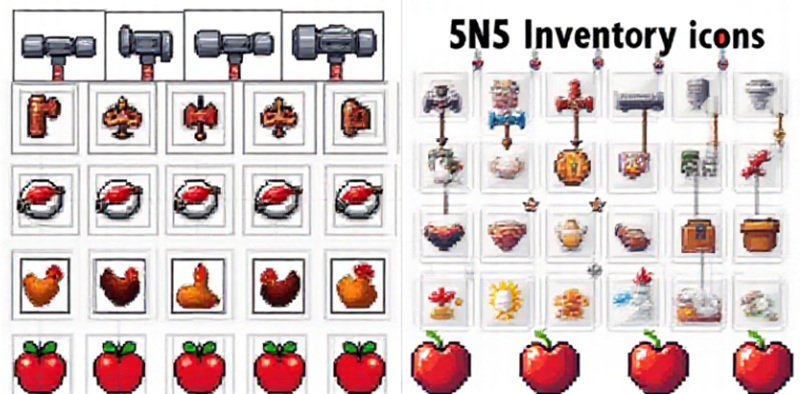

Small scale tests have shown flux when prompted with area conditioning, to respond fairly effectively even untrained for the task. My small scale lora training experimentations with sprite sheets have shown a lackluster response after a 3x3 grid without area prompting, and a highly increased capability up to 5x5 with area prompting using rows.

The 5x5 without area prompting and no lora:

The icons when they should be in rows, start following the snail shell circular approach bleeding to other rows and columns.

After the area conditioning is applied with the correct sequential tags and the purpose of a row, the model responds and produces the row of icons using the conditioned area.

I'm not even using a lora for that. That's just flux and a bit of area prompting.

The outcome is even stronger when using masks. So much so that it doesn't even need a lora, just a proper set of comfyui nodes to generate entire spritesheets with the correct prompts.

Sequential tasks provide sequential purpose and flux understands this along with the connection of those tasks with the overarching image structure.

Prefacing experiments showed living subject and object fixation is reversible. Object superimposition over living subject through transformation. Manipulating and altering subjects based on parameters.

This includes entire environments like rooms, placements of things within those rooms, objects within those lines of sights of those placed objects. Photographs of objects within those lines of sights of those objects. There's a bit of a depth limit, but it's kind of hard to get creative enough with words beyond a point to actually make it do what you want.

Suffice it to say, it does a great job at it.

Text responsiveness.

All images with any text can in fact be used in training, but the images and their text must be carefully controlled and documented. Flux and T5 will introduce their own informational emphasis on them based on the requested prompt.

With region and prompt control trained into a core model, full story based comics will be fully possible to inpaint and generate using area conditioning.

Planning

As a more complex training and a more complex solidification of the model potentials, I plan to generate 1 million images using multiple runpod pods and a specific formatted wildcard setup using Pony Autism + Consistency V4, Zovya Everclear V2, Pony Realism + Pony Realism Inpaint, Yiffy v52, and Ebara.

The tagging methodology:

1,000,000 total synthetic images.

1,000,000 / 5 = 200,000 images per model.

200k / 9 scores = 22,222 images per score.

20k images per core tag.

We have a ratio of 20k tags to play with. Everything must revolve around that 20k tag ratio for our formatted synthetic data.

I require additional research on regularization capabilities and impacts on large training models before I can provide an exact required regularization images and tag count.

Each chosen model and their purpose:

Pony Autism + Consistency V4:

The purpose of this model will be to generate poses with high complexity interactions. It will be the crux and the core of the entire engine's pose system.

Everclear v2 + Pony Realism Inpaint:

This generates a high fidelity realism with a very unique style. The model is similar to the base flux model in a lot of aspects, so this model's outputs will be very useful for clear and concise clothing, colorations, and any number of other deviations based on high quality realism.

Yiffy:

The entire purpose of this one will be to introduce the more difficult to prompt and yet important things to make sure a model has some variety. Cat girls, anthro, vampires, skin colors, nail types, tongue types, and so on. Essentially this will be the "odd" details section.

Ebara:

Ebara's job is to produce and solidify the stylistic intent of anime with the more stylish backgrounds and interesting elements associated with it. It's images will represent score 5-9 2d anime with backgrounds.

Each model will produce an equal number of images using their own score gradients. Yiffy however, does not have a score system. It's manual. I'll need to devise a score system to prompt Yiffy properly as it's a bit finnicky, but Yiffy 52 should produce quality comparable to the others with a little tweaking.

I have yet to find a good candidate for full 3d capability.

On a single 4090 I can generate batches of 4 1024x1024 images per minute with background remove, triple loopback for context and complexity, segmentation, and adetailer fixes.

A single 4090 should be capable of generating roughly between 7k and 11k images per 24 hours, depending on hiccups and problems.

4090s are about 70 cents an hour on runpod, which is about 16 dollars a day give or take. The total inference to generate a million images should be around $2200 give or take. I'm going to estimate over $4000 just to be on the safe side barring failures, mishaps, and problems.

After testing and implementation of an automated setup using my headless comfy workflows, it should fully integrate and be fully capable of running on any number of pods.

Each pod will be sliced off a section of the master tag generation list based on the currently running pods and their currently generating goals.

A master database will contain the necessary information needed to allocate the majority of the tag sequence based on the last generated image stored and the currently allocated images to the other pods.

When the pods finish executing they will give the all clear sign to the master pod which will delegate their roles or terminate the pod depending on need.

I'll be carefully testing the two core released Flux models for a candidate. FLUX Dev has shown inflexibility in a lot of situations, so I need to know if I can get better results from it's sister before I begin this.

Adetailer will be ran on hands, face, and clothing.

Segmentation will remove and leave backgrounds transparent for a percentage of all of the images.

Wd14 tagging + the base wildcard tagging will be applied.

The additional 1 million images will be sourced from the highest end data in the gelbooru, danbooru, and rule34 publicly available datasets accordingly.

ALL TAGS will be fragmented, wildcarded, and transformed into sentence fragments using a finetuned llm for this particular task. Score tags will be superimposed as a post generation post necessity calculation based on one of the many image aesthetic detection AIs around.

Each tag order will be relative to the region of an image from top to bottom, first iteration being a two sentence pass where each image is sliced in half into two comma delimited rows of complete sentences above the clump of baseline tags.j

This is a sentence here., This is also a sentence here.

All images will be classified based on a NSFW rating of 1-10 based on the standard NSFW detection software.

rating_safe is between NSFW 0-5

rating_questionable is between NSFW 6-8

rating_explicit is between NSFW 9-10

All images will be issued a score tag based on their user rating value. The higher the rating the more important their score tag will be

I'll manually go prune the top 10000 or so to be sure it's not an absolute diaper load of a mess. After that it should sort itself out.

This will essentially be it's own divergent form of FLUX that I'm codenaming "FLUX BURNED" for now.

The training will be an iterative burn building from a high learn rate on the lower score images in an iteratively decreasing learn rate for every finetuning phase after.

I'll definitely run smaller scale tests to ensure that this isn't a huge waste of time and money, so smaller 50k tests will be in order before a full bulk training like feelers. See how Flux responds. Then iteratively build up from there.

I'll adjust the learn rates accordingly based on the first 5 score values to determine if the model is sufficiently prepared for score 6-10.

This process will be based on divergent and important learned development that will be carefully logged and shared through the process.

This model will have a special emphasis on screen control. The entire model is going to be based on grid and section control, allowing for the most complex interactions possible in the most complex scenes.

Training this with baseline images will likely be substantially less reliable for realistic people and substantially more reliable for anime and 3d based characters.

Prompting will be easier and more similar to pony, while still allowing a full LLM integrated Q&A section.

This requires additional research, testing, and training of a few other models before this can begin so it'll be a while. I'll be working on it though.