Following on from my last post where I went into my training settings, I wanted to delve deeper into captioning images and how it affects the results in your Lora.

Flux is still in it's early days (how is it less than a month since we were given this gift?!) and getting to grips with training a new model is a learning process for everyone. What worked in SD1.5 and XL might not work in Flux. Some things might work better. One thing that there's a lot of discussion and confusion around is how people should caption their datasets.

Captioning data

Generally there are four different approaches which people use to this:

No captions

Just train on the raw images without any caption file describing what's in the image.

Tags

Also known "booru" tags after those found on the danbooru image sharing site. These worked perfectly for SD1.5, Pony, and to a lesser extent SDXL, but because newer model are trained on more natural language descriptions, they don't tent to work so well in things like Flux / SD3 / PixArt Sigma. An example of tags would be "1girl, long hair, purple hair, blue eyes, mischievous smile, bangs, summer dress, grassy field, sky".

Basic captions

A very simple description of the image. This might be something like "a man" or "two people sitting".

Natural language

These are highly descriptive captions about everything in the image. You write them as a full sentence (or multiple sentences). So for the image below, I might write something like:

split_panel_style showing a different four anime woman. In the top-left is a woman with red ponytail hair and eyes wearing a light-brown jacket over black shirt. In the top-right is a woman with blonde hair and yellow eyes with a black and purple top over white shirt. In the bottom-left is a woman with long brown hair with braided bangs and blue eyes, wearing a loose black v-neck top. In the bottom-right is a woman with short black hair and yellow eyes laughing, wearing a sleeveless black top with yellow trim in the centre.In most cases you'll also use a "trigger word" to activate the Lora and bring your subject or style into your image. Some style Loras don't use a trigger word and activate automatically when you apply them, but a trigger word for a style can help reinforce the style in the image and also helps when testing your Lora so you can check how much your training has affected the base model.

Notice above that you still include the trigger word for your Lora even in natural language captions. In the example above I might be training a Lora to create images in four panels, and I want to use the trigger word "split_panel_style" to activate it.

What does a caption even do?

When training a model or Lora, captions tell the training run what's in your images. This helps the training code understand what it's looking at and which parts of the image are the important thing it should be learning about. Generally speaking, you caption things that you want to exclude from the training. So if I have an screenshot image in my dataset of a character from a show and it has objects around and in the background, I probably don't want it learning about those other things. In that case I'd write captions for that, and use a trigger word to identify the character I want the training to learn about.

For example:

aerith is shown in a side profile with a contemplative expression, her head slightly tilted downward. The background features a colorful mural with flowers and abstract shapes, adding a lively yet contrasting element to her thoughtful demeanor.I have my trigger word ("aerith"), and then I describe that she's in side profile view (I don't want all my generated images to be shot that way), her expression (I don't want her to always be frowny), and what is in the background (I don't want her to always be standing in front of a mural). What I don't put in the caption is anything about her hair or outfit, as I want it to learn those things. Those are the things that will be associated with the trigger word.

Style, Character, or Object

How you caption your data also depends on the type of Lora you want to make. I'll try and follow up with another in-depth post covering this, but if you're training on a character or an object, you almost definitely want to be captioning your files in some way for the reasons I went into above. You want it to learn the character, but not everything else in the picture.

What I want to focus on here is just style Loras. These are a little more nuanced, as learning a style is kind of like learning everything about an image and then applying it in new situations. It's also the one I've seen the most disagreement around - should you caption images for a style Lora or not?

My general experience so far has been that, no, you don't need to caption images when training a style for Flux (although I do use a trigger word). Flux seems incredibly capable of flexibility when trained on raw images in the same style. As long as you have a diverse set of images in a consistent style, I've been getting good results.

But I wanted to do some concrete testing on this and see exactly what difference it makes. Maybe my assumptions are incorrect. The only way to tell is with science!

Example dataset - Fullmetal Alchemist Style

I wanted an example style which would be recognisable, so that it would be easier to tell which training settings were working better. For this I chose Fullmetal Alchemist (the original series, not Brotherhood). I gathered 20 screenshots of different characters and settings - you can find all the training images attached to this post.

Example captions for the image above:

No captions

fma_styleBasic captions

fma_style, boy, headshotTags

fma_style, 1boy, headshot, blonde hair, yellow eyes, determined, grinning, black collar, red coatNatural language

fma_style, A boy with blonde hair in bangs and tied back in a braid gives a smug expression. He has yellow eyes, and is wearing a red jacket over a black shirt with high collar and white trim. There is a beige wall in the background.I created similar captions for each image, and then did a training run for each of them using the same settings (full config attached). I've set it to run to 4000 steps, but I expect it to converge on the style at about 2500-3000 steps. I'm just pushing it a little beyond that just in case. I settled on a learning rate of 1.7e-4 after some initial testing - 1.5e-4 wasn't converging quite quick enough for my liking.

Learning rate: 1.7e-4

Steps: 4000

Rank: 16

Alpha: 16

Scheduler: flowmatch

Optimiser: afamw8bit

Batch size: 1And so begins a couple of days of training. Each Lora took about 3.5 hours to train, plus a little extra to generate the samples. Annoyingly I can only set a single training run going overnight and queue up another the next morning. I want to put together an end-to-end automated process (dataset collection, captioning, config preparation, training run and output Lora file), so watch this space and follow me to get notified as I want to do another article on that later.

Results

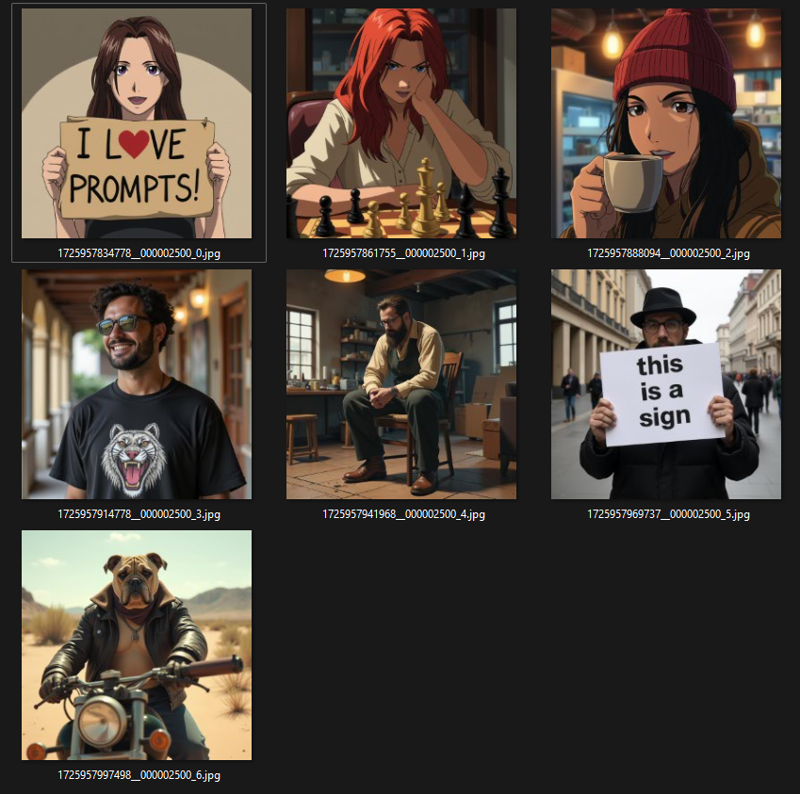

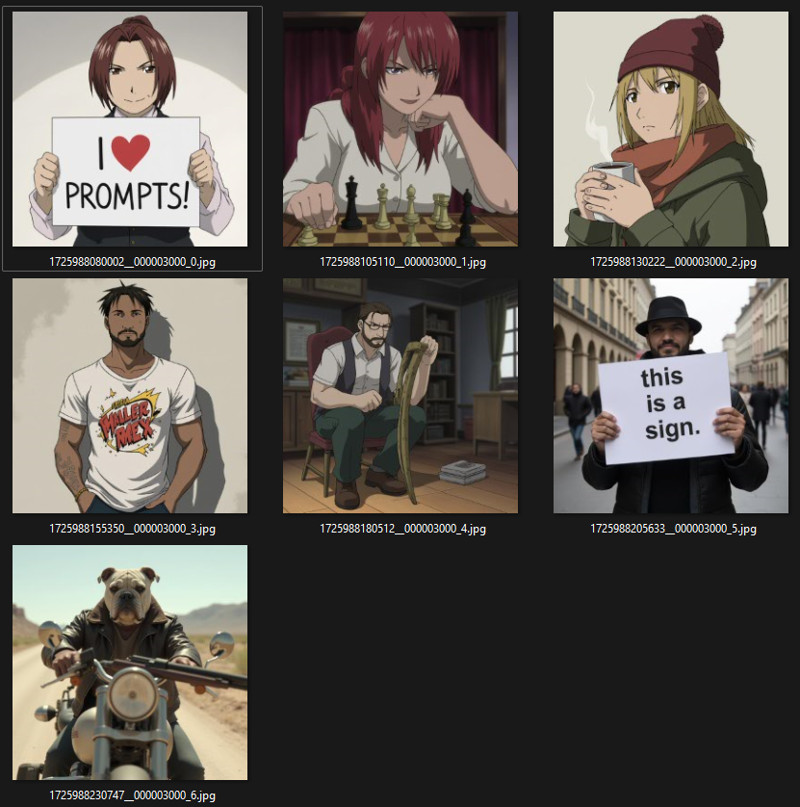

I used the same sample prompts from my previous post to gauge how well the styles were coming along.

- "fma_style, woman holding a sign that says 'I LOVE PROMPTS!'"

- "fma_style, woman with red hair, playing chess"

- "fma_style, a woman holding a coffee cup, in a beanie"

- "fma_style, a man showing off his cool new t shirt "

- "fma_style, a hipster man with a beard, building a chair"

- "a man holding a sign that says, 'this is a sign'"

- "a bulldog, in a post apocalyptic world, with a shotgun, in a leather jacket, in a desert, with a motorcycle"This checks a few things - mostly text comprehension, style bleeding when not using the keyword, and the "showing off a cool t-shirt" one in particularly is a tricky one to get right. If that falls into place, we're usually good to go. Note that the last two images should not be in the style I'm training - they're there to test if the style is bleeding into the base model when it shouldn't be.

No captions:

This training run stabilised around 2500 steps. After that it started reverting back to a more photorealistic style before coming back at the end in 4000 steps. I was having issues with male subjects which might be due to the training data being more heavily weighted towards women. The style itself though on the female subjects was looking good. If I were doing this in my normal process I'd probably try upping the learning rate to about 2e-4 and re-running again to get a better activation rate.

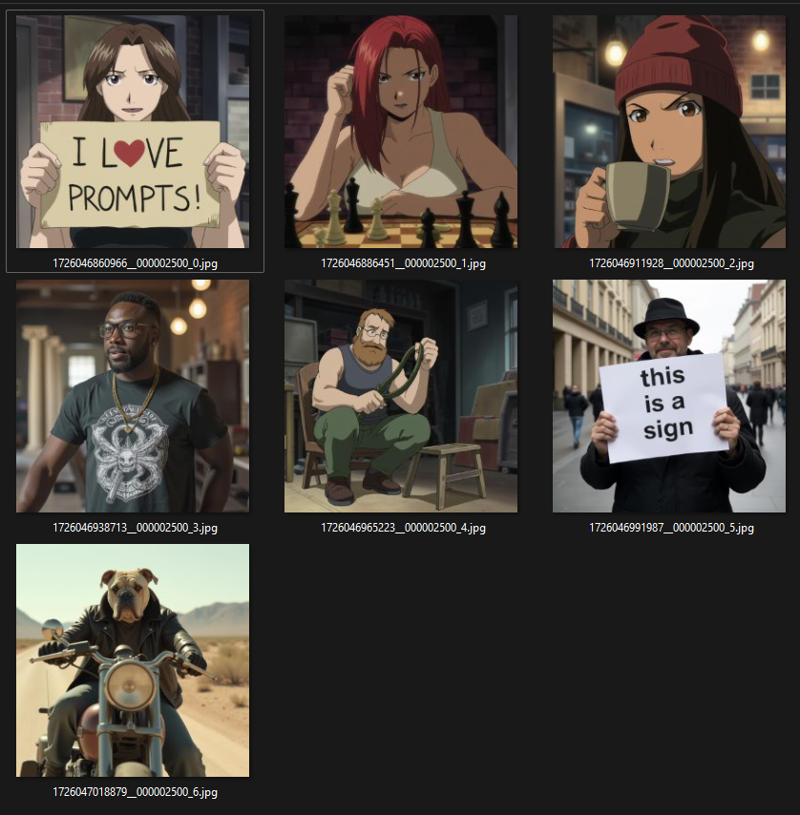

Basic captions:

Slightly better handling of one of the male subjects and more consistent style. Still had issues with activating on all images as before, and it's definitely got a bias towards brick walls in the background which it's picking up from the training data. Maybe captioning the subject has focused it's attention more on the background?

Tags:

About the same style consistency as before, but it's still struggling with activating across all the images it should do. It also has more issues with anatomy (especially hands) than the previous runs. It has lost the brick wall backgrounds though, which is a good indicator of flexibility for the model.

Poor t-shirt guy, he just can't catch a break.

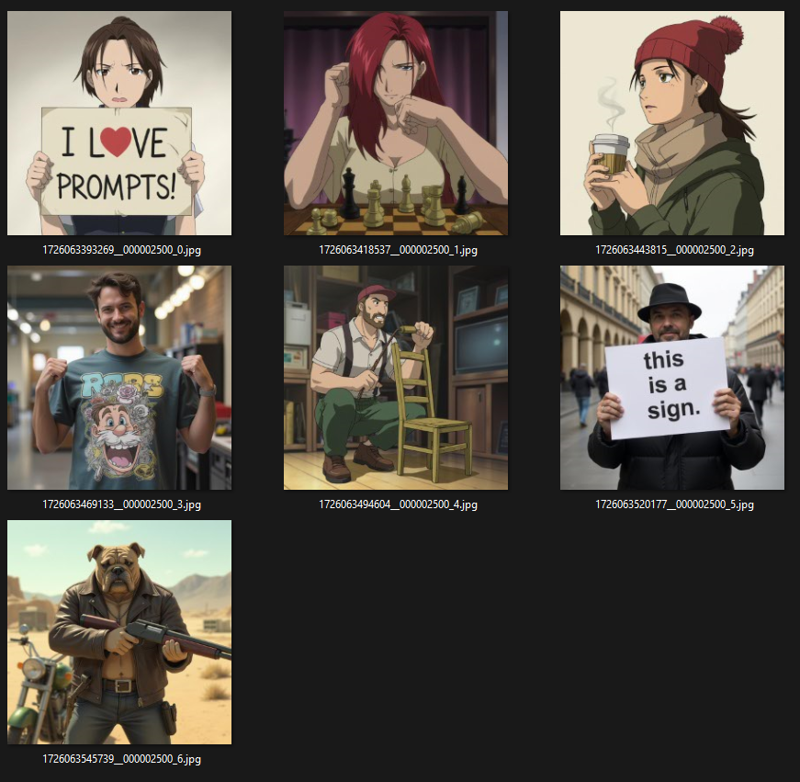

Natural language captions:

Much quicker to start showing the style - even at 500 steps it was already moving towards an anime style across all the relevant images, but it was having issues with deformed hands early on. Slightly quicker to stabilise (2000 steps), and the results were activating more consistently on male subjects too. The likeness at 3500 steps was slightly better, but it started having issues with hands and legs and overfitting the training data at that point. I am impressed though that the test image of the dog on the motorcycle kept it's realistic style over the whole training run. Usually this gets some style bleed into it.

Summary

You can find the final Lora here which was trained on natural captions.

This was a productive exercise. You can definitely get a good style without worrying about captions (something I'd already seen in previous training attempts). You may need some trial and error with the learning rate (again, I'd already experienced this on different training runs). So it's good to confirm all of this. If you don't want to worry about captions and just fire and forget a style, then this is definitely a viable option.

However, there is a clear improvement when captioning data in natural language. Not so much in how closely the style matches your expected results, but definitely in how well it can be applied to things outside the training data. You still need to have a diverse dataset with a good range of image subjects, angles, etc., but where my uncaptioned run struggled more with male subjects, the natural language captioned run coped much better.

I picked up on this last time when talking about my Flat Colour Anime Lora - the dataset is leaning heavily towards waifus which is why captioning the data gave me better consistency on subjects outside of that. I didn't really fully understand why at the time but having gone through this process it's become clearer.

So I'm adding an amendment to my recommendations from my previous post.

It's still true you can get good results without captioning - I've seen this many times on training runs I've done. But if you can caption the data well in natural language prompts, then it will help with consistency and subjects outside your dataset. Captioning images can take a lot of time to do well (and you should do it well - bad captions can tank a training run fast), but if you take the time to do it properly then you can increase the quality of your output.

By how much it improves your output is going to be very dependent on the style you're training on and you'll need to make a judgment on whether it's worth it to you. But from now on I'm going to start captioning all my datasets in natural language, even it takes a little more time.

What can I say - I have a lot of time to kill while waiting for training runs to complete 😆

Author's note

I don't paywall any of my content, but if you found this useful and you feel like supporting me then any donations are very much appreciated 😊 I'm currently working towards building a dedicated ComfyUI training/render rig. Everything goes back into helping me learn and produce more.

https://ko-fi.com/todayisagooddaytoai

❤️

CrasH