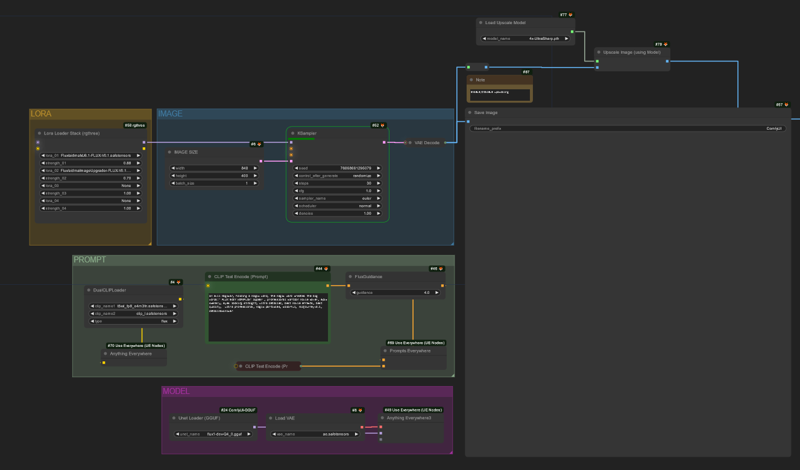

Effective Workflow for GGUF Variants with LORA and Upscaling

Despite the release of Flux some time ago, finding a functioning workflow for the GGUF variants has proven challenging. I’m sharing this simple yet effective workflow that supports both LORA and upscaling.

For the FLUX-schnell model, ensure that the FluxGuidance Node is disabled.

Installation:

Use ComfyUI-Manager to install missing nodes: ComfyUI-Manager

Models used in workflow:

FLUX GGUF: flux-gguf

-> Place in:/ComfyUI/models/unetFLUX Text Encoders: flux_text_encoders

-> Download

-> Place in:

/ComfyUI/models/clipUpscaling Model: 4x-Ultrasharp

-> Place in:/ComfyUI/models/upscale_models/Lora Models:

-> Place in:

/ComfyUI/models/loras/

![FLUX EASY WORKFLOW [LOWVRAM] [GGUF]](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/c2c254a8-62ba-4b32-bb45-e626a113bfa5/width=1320/ComfyUI_01824_.jpeg)