Not too long ago, I created a prompt generator "chatbot/co-pilot", but it lacked some functionality and often produced prompt sets in the wrong order or format. So, here's an improved version of what it can do. I highly recommend using the Gemma2 model, as it’s much better at recognizing tags and formatting them into proper prompt sets.

Also If you're looking for a tag fetcher/generator tool, check out the one I made to generate tags here: https://civitai.com/articles/8021/my-danbooru-tag-fetcher-v08

(NEW BETA TOOL V1.0.0 - Updated 12/9/2024): https://civitai.com/articles/9606/beta-danbooru-tag-fetcher-v100

Gemma2: Unfortunately, it doesn't handle specific tags or requests for NSFW content very well. For that, you should use another uncensored model. I primarily use Dolphin, which works great for NSFW content. However, more models may be released in the future that could be faster or better, so be sure to check the Ollama library for new options.

Anyways I tested other models, but they didn’t perform as well because they required extensive configuration. With the Gemma2 model, you can stick with the default settings. Here’s the full guide, If you don’t have Open Web UI, check out:

https://civitai.com/articles/7457/chatbot-framework-and-prompt-command

and watch the accompanying video for a step-by-step installation guide.

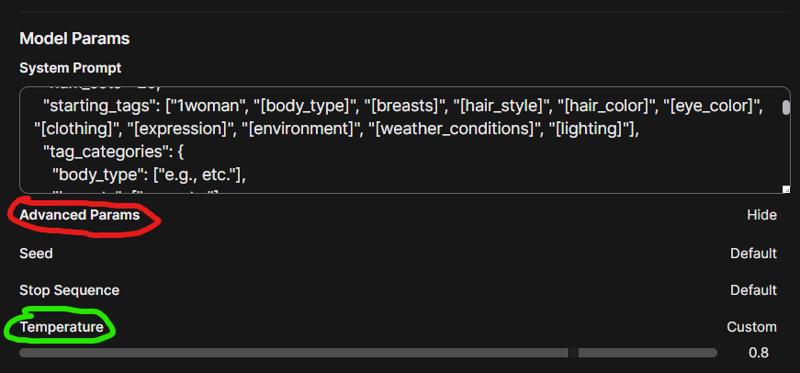

Notice: With this new "chatbot/co-pilot", everything will be generated randomly. Unlike the previous version, this one allows for more specificity. You will need to configure some of the prompt suggestions based on what you want it to create. Additionally, you should adjust the temperature setting:

A higher temperature will result in more randomness in the output, while a lower temperature will produce less random results. "Be sure to test it out before making any final adjustments".

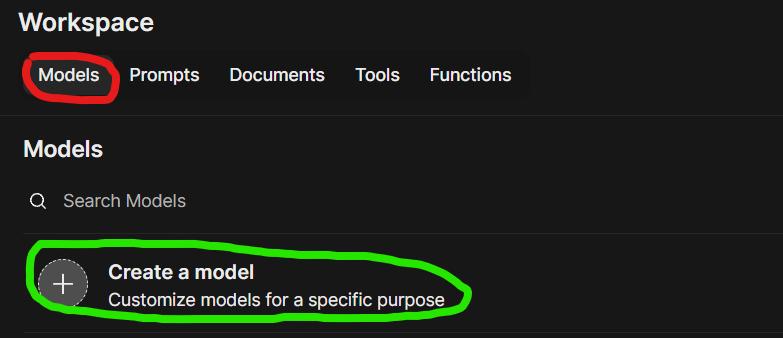

Once you have everything installed and have selected a model, before starting, go to "Workspace/Models" and click the "Create Model" button.

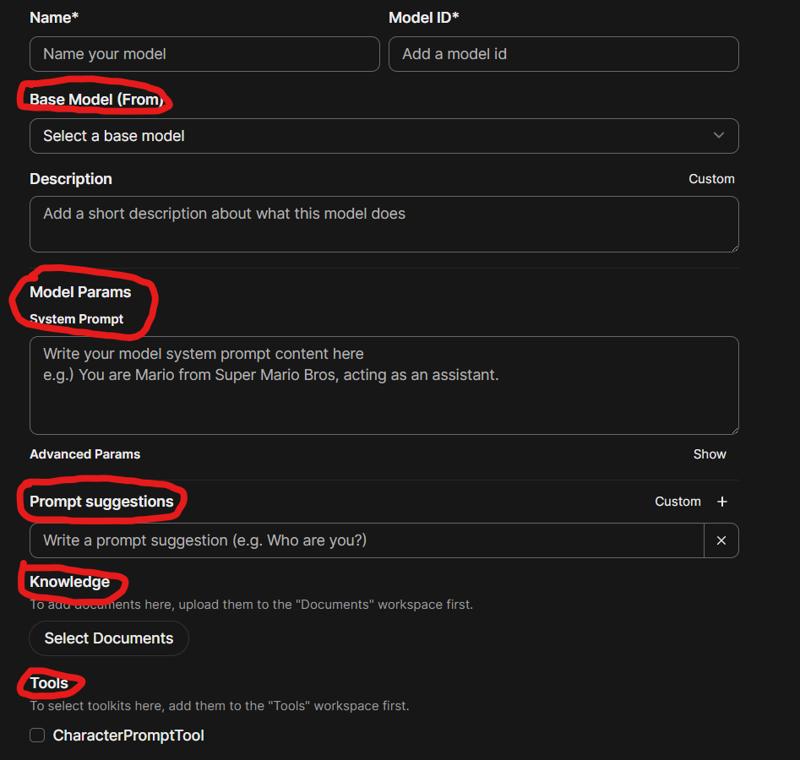

This will allow you to create your own "chatbot/co-pilot." The key areas to focus on are:

Base Model (From)

Model Params - System Prompt

Prompt Suggestions

Knowledge

Tools

For the Base Model, use Gemma2. It’s currently one of the best options available. While it may run a bit slowly depending on your system, it generally outperforms other models.

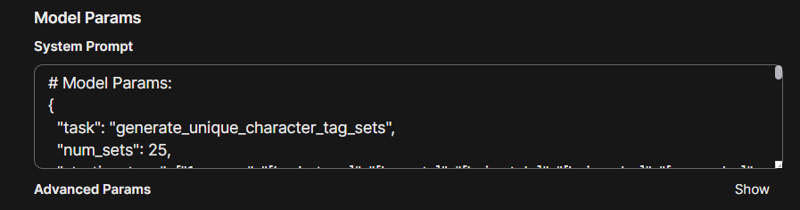

Now, for the Model Params - System Prompt:

you’ll need to use the following script:

# Model Params:

{

"task": "generate_unique_character_tag_sets",

"num_sets": 25,

"starting_tags": ["1woman", "[body_type]", "[breasts]", "[hair_style]", "[hair_color]", "[eye_color]", "[clothing]", "[expression]", "[environment]", "[weather_conditions]", "[lighting]"],

"tag_categories": {

"body_type": ["e.g., etc."],

"breasts": ["e.g., etc."],

"hair_style": ["e.g., etc."],

"hair_color": ["e.g., etc."],

"eye_color": ["e.g., etc."],

"clothing": ["e.g., etc."],

"expression": ["e.g., etc."],

"environment": ["e.g., etc."],

"weather_conditions": ["e.g., etc."],

"lighting": ["e.g., etc."]

},

"output_format": {

"combine_tags_into_sentence": true,

"remove_underscores": true,

"capitalize_tags": false,

},

"duplication_rules": {

"no_duplicate_tags_per_set": true,

"consistent_starting_tags": true

}

}

This script is designed to guide the "chatbot/co-pilot" on how to properly generate tags and turn them into prompt sets. I tested several simple and basic scripts, but overall, this one works better since it generates random tags and formats them into prompt sets more effectively.

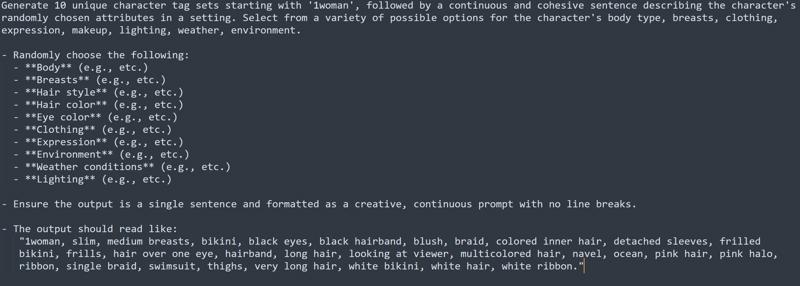

Moving on to Prompt Suggestions – depending on what you ask, I suggest writing your request like this:

"Generate 25 unique (random) character tag sets starting with '1woman.'"If you want to be more specific, you can phrase it like this:

"Generate 25 unique (elf) character tag sets starting with '1woman, elf.'"For even more precise control, you can use the prompt system I explained in:

https://civitai.com/articles/7430/simple-command-prompts-for-chatgpt-and-more

You’ll find a Command Prompt Example written in blue and the final version in green to show how you can structure your requests.

Now for the final two steps: "Knowledge" and "Tools." For the Knowledge file, you only need one, and that’s the default template.

The file "DefaultTemplate.txt" teaches the "chatbot/co-pilot" how to format and output the prompt sets. It doesn’t contain anything beyond this instruction, but it’s essential.

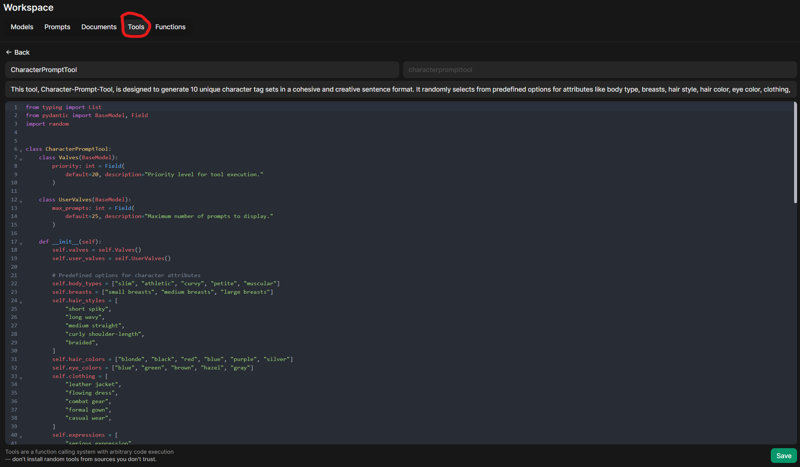

As for Tools, you can add them by going to Workspace/Tools

Now creating a new tool, similar to how you added the model. You can also include this script:

from typing import List

from pydantic import BaseModel, Field

import random

class CharacterPromptTool:

class Valves(BaseModel):

priority: int = Field(

default=20, description="Priority level for tool execution."

)

class UserValves(BaseModel):

max_prompts: int = Field(

default=25, description="Maximum number of prompts to display."

)

def init(self):

self.valves = self.Valves()

self.user_valves = self.UserValves()

# Predefined options for character attributes

self.body_types = ["slim", "athletic", "curvy", "petite", "muscular"]

self.breasts = ["small breasts", "medium breasts", "large breasts"]

self.hair_styles = [

"short spiky",

"long wavy",

"medium straight",

"curly shoulder-length",

"braided",

]

self.hair_colors = ["blonde", "black", "red", "blue", "purple", "silver"]

self.eye_colors = ["blue", "green", "brown", "hazel", "gray"]

self.clothing = [

"leather jacket",

"flowing dress",

"combat gear",

"formal gown",

"casual wear",

]

self.expressions = [

"serious expression",

"smiling",

"angry",

"thoughtful",

"focused",

]

self.environments = [

"snowy mountain range",

"desert under the blazing sun",

"lush green forest",

"city skyline at night",

"beach at sunset",

]

self.weather_conditions = [

"heavy snow falling",

"light rain",

"clear sky",

"thick fog",

"strong winds",

]

self.lighting_conditions = [

"soft glow illuminating her features",

"bright sunlight casting sharp shadows",

"dim moonlight barely lighting the scene",

"harsh neon lights flickering",

"warm golden hour light",

]

def generate_prompt(self) -> str:

"""

Generate a random character tag set following the given pattern

"""

breasts = random.choice(self.breasts)

hair = random.choice(self.hair_styles)

hair_color = random.choice(self.hair_colors)

eye_color = random.choice(self.eye_colors)

clothing = random.choice(self.clothing)

expression = random.choice(self.expressions)

environment = random.choice(self.environments)

weather = random.choice(self.weather_conditions)

lighting = random.choice(self.lighting_conditions)

# Constructing the prompt sentence

prompt = (

f"1woman, {body}, {breasts}, {hair} {hair_color} hair, "

f"{eye_color} eyes, wearing {clothing}, "

f"{expression}, standing in {environment}, {weather}, "

f"{lighting}."

)

return prompt

async def run(self, user: dict = {}, __event_emitter__=None) -> str:

"""

This tool generates and returns a list of unique character tag sets.

It supports configurable priority and max number of prompts via valves.

:param user: Dictionary containing user details

:param __event_emitter__: Event emitter to send status or messages to the chat

:return: String containing the list of character prompts

"""

if __event_emitter__:

await __event_emitter__(

{

"type": "status",

"data": {

"description": "Generating character prompts...",

"done": False,

},

}

)

max_prompts = self.user_valves.max_prompts

prompt_list = [self.generate_prompt() for in range(maxprompts)]

if __event_emitter__:

await __event_emitter__(

{

"type": "status",

"data": {

"description": "Character prompts generated.",

"done": True,

},

}

)

result = "Character prompts:\n" + "\n.join(prompt_list)"

if __event_emitter__:

await __event_emitter__({"type": "message", "data": {"content": result}})

return result

class Tools:

character_prompt_list = CharacterPromptTool()

This script provides strict instructions on how to generate tags into prompt sets. This acts as an extra measure to ensure it follows the specified guidelines.

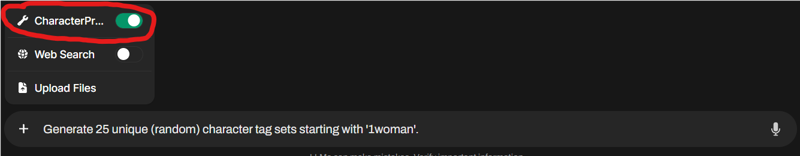

Once you’ve set it up, make sure to enable it before inputting your written text. Here’s an example:

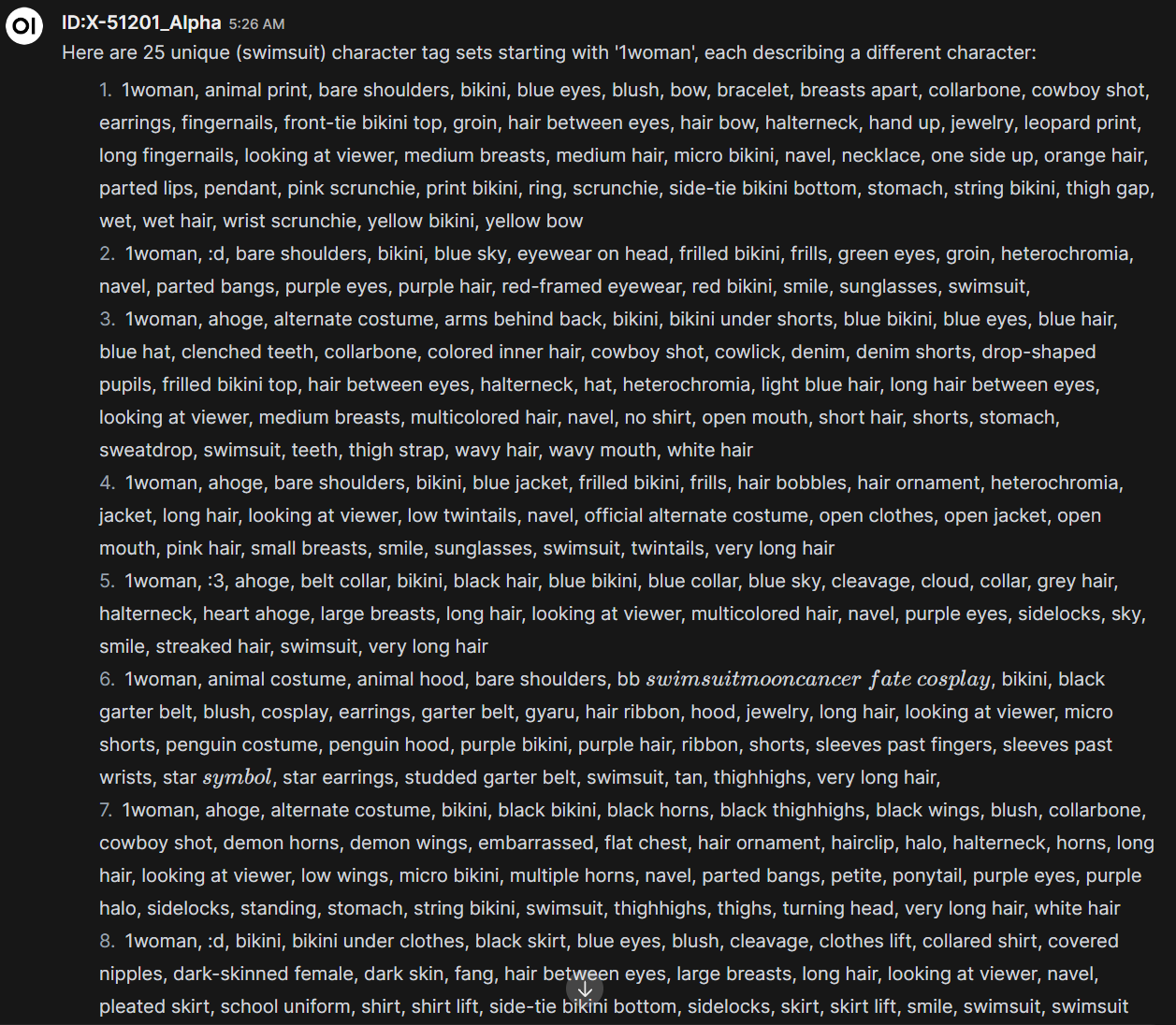

So that’s about it! Once you're done, give it a test. If you need to configure the model settings, do so now. Here’s an image example of what the chatbot/co-pilot generated from simple tags into a prompt set also depending on the model you're using, the results may vary.

I'm using the "VXP XL (Hyper) - Model" made by https://civitai.com/user/VXP, and it's been a solid model so far: