About

This is going to be a series where I post my results and experiments regarding creating OC LoRAs using a single base image. My fundamental goal is to find a method to create a consistent character with minimum effort. If you're willing to spend time, you can probably create a consistent character using techniques from here and here.

This article discusses the creation of the the OC for Isabella Norn in the link below.

https://civitai.com/models/100044/single-base-image-oc-lora-tests

This method isn't exactly new since it's based off of the "Dreambooth LoRA" method found the in the LoRA reentry article.

General Scope

Since character designs can be pretty complex, I am of the opinion that using only one base image has it's limits and that turnarounds or more images will be needed for more consistency. For the context of these experiments, I am avoiding the use of generative fill and training loopbacks since I don't want to create anymore base images at this time. I will be adding close-ups of the same image since it's a pretty simple process that doesn't involve cherrypicking images. Determining the smallest workable dataset will probably be a subject in the future as I do expect to hit a wall with this method.

Disclaimer

As a general disclaimer, I am new to training LoRAs so there's a good chance that I'm wrong or just got lucky. I do hope that this can be a basis for those who are more knowledgeable about training or those who want to refine the technique in this particular subject. I apologize in advance I have something wrong.

At the present stage, a single image LoRA is workable for an inpainting workflow since it's serviceable enough to capture the basic details. Your milage may vary as I haven't thoroughly tested every setting due to my lack of training knowledge and model overfitting.

You can see that there are color mismatch issues but I do have the flexibility of being able to rotate the character without extra weighting. I am not going to focus too much on color fidelity since I noticed a lot of character LoRAs also struggle in that area.

Character Design

I didn't do anything too complex for this character. I just wanted a few complex details to make the character somewhat unique. The only other thing to note here is that while this is a full body view, it isn't a full frontal view. This image was generated at 512x512 upscaled by 2x and then another 2x pass with MultiDiffusion and then finally downscaled to 512x512. From my tests, the higher base resolution did help the LoRA learn the shape of the hair more quickly but I used the 512x512 image so that it wouldn't overfit.

This character has these particular characteristics:

Blue Hair

Diamond Shape Earring

Green Eyes

Long Hair

White Hat with green bow

Flat-ish curve for the eyelashes

Ribbon

Corset

White Capelet with Two Green Stripe Pattern

White Skirt with Two Green Stripes with frills at end

Asymmetrical hair line with roots visible on the right side.

These are the characteristics that I was looking for when I was testing the LoRA. I am using a full-body view since I wanted to capture as many details as possible. A guide for making a LoRA with just the upper body already exists. (Expanding an upper body into a full body image using outpainting could be another topic I that could write about in the future).

Dataset

Dataset consists of two images

Full Body view from above

Close of the face from the full body image

Without the close-up of the face, the LoRA struggles to capture the hairline accurately. However, it can still occasionally capture it without but it's more inconsistent. I could have included other closeups of the clothing details for more precision but I wanted to do the bare minimum in this first attempt.

Captioning

I tried multiple techniques for this and the best version I found was to tag everything as a much as possible, including things like facial expression, outfit color, art style and camera position but pruned character sensitive details such as hair color, eye color, chest, and hair length. Using bare bone captions made the original outfit incredibly overfit when trying to change outfits.

I set the trigger word as isabellaNorn,1girl

Closeup caption file

isabellaNorn,1girl,close_up,portrait,hat,hat_bow,earring,bow,white_background,solo,closed_mouth,frown,white_shirt,anime_screencap,anime_color,sad,bow,capelet,turtleneck,sweater,jewerly,collar,neckcollar,looking_at_viewerFullbody caption file

isabellaNorn,1girl,full_body,white_background,looking_at_viewer, solo, standing,closed_mouth,frown,hat,hat_bow,earring,white_capelet,ribbon,green_bow,white_dress,skirt,black_pantyhose,black_boots,cuffs,long_sleeves,corset,anime_screencap,anime_coloring,frill_skirtClass Name and Repeats

I only had one folder here. I will need to try experimenting with weighting repeats in the future.

Repeats: 10

Class_name: isabella

I'm not sure if I choose the correct class name. I have seen from some discussions that you need to choose a unique class name or need to pick a class_name that is the same type as the subject that you are training. In my case, I believe that is was supposed to 1girl with the folder named to be 10_1girl instead of 10_isabella. I tried testing with it later and didn't find any noticeable difference. The word "isabella" had some enhancement effect on the lora paired with that class_name when it was used. Personally, I don't have a good eye for this so I passed it off as hard to tell.

Regularization Images

I randomly generated 60 images (512x512 with hi-res fix 2x) with images of one girl in different poses using the same artstyle as my character. (Not sure if that has any impact). I used the wildcards from this video but only used the expression and orientation wildcards. Afterwards, I removed the images with complex designs such as cleavage cutouts, back cutouts, and anything that did not include a person. I ended up with 56 images afterward. Finally, I just used WD1.4 autotagger to automatically tag the images and placed into a folder with:

Class_name - girl

repeats:1

The folder name looked like

1_girl

KohyaSS gave a warning that not all images were used so I'm not sure if I did something incorrectly. I didn't have a particular reason for using 1 repeat. Using regularization images, did have noticeable impact on changing the pose, outfit and reducing overfitting problems with the LoRa. I did encounter another problem where the LoRA would try to make a long side stand more often than usual.

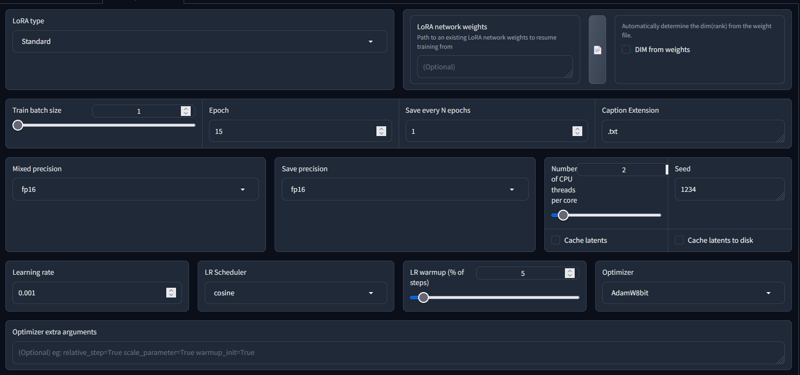

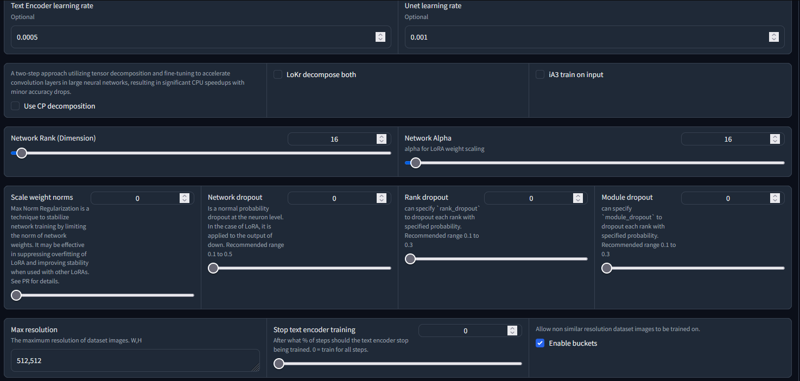

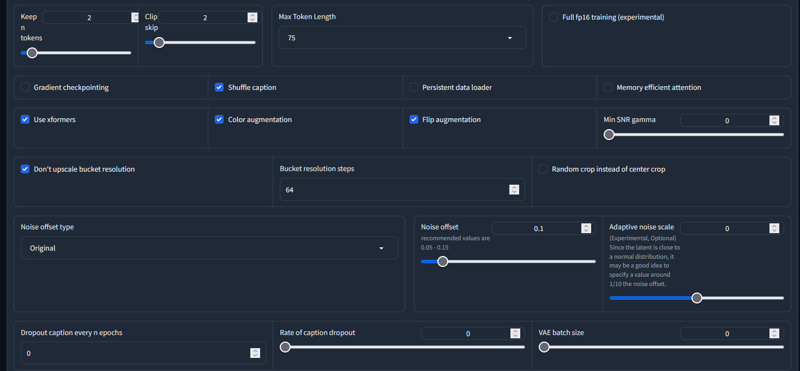

Training Parameters

These are my settings that I used for KohyaSS. I can't really explain why these work due to my lack of knowledge. I just went with the approach where it's better to not train as much to avoid overfitting.

Model

I trained my model on NovelAi due to the research where the oldest ancestor provides better compatibility with models.

Settings:

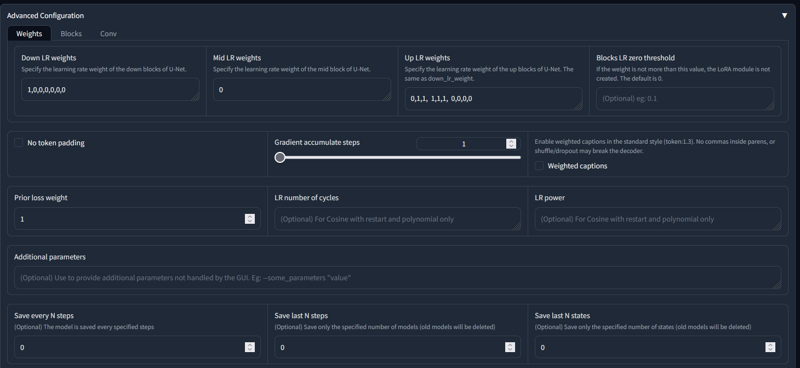

Weights

I tried to mirror the OUTD preset for the weights but got a warning from kohyaSS where it filled the missing weights with 1? I tried mirroring the OUTD preset since I had the best results with this preset for preventing stylebleed with other LoRas.

I am just posting this here just in case something changes with kohyaSS. You should look at the weights from the image below for my exact settings.

Down_Lr : [1.0, 0, 0, 0, 0, 0, 0, 1.0, 1.0, 1.0, 1.0, 1.0]

up_lr: [0, 1.0, 1.0, 1.0, 1.0, 1.0, 0, 0, 0, 0, 1.0, 1.0]

Color Augmentation and Flip Augmentation

These settings supposedly add more variants to your training set which helps with reducing colorburning issues and overfitting.

Learning Rates

I saw a lot of recommendations to use a high learning rate but that led to the LoRA being overtrained quickly or I got NaNerrors. So I just used the same learning rates from other LoRA guides.

LR: 0.001

LR: Schedulr: Cosine

LR Warmup : 5

Text Encoder: 0.0005

Unet LR: 0.001

Epoch

I ended up using the 15th epoch, although I think I could have gone lower. I tried to pick a high epoch that did not have a problem with overfitting.

Overall Settings

Overfit Testing and Picking an Epoch

This is the most time-consuming aspect and requires a rather careful eye. Ideally, you want to choose an epoch that isn't overfit but still captures a good amount of character detail. I personally just recommend picking on that looks good enough for your preferences since your understanding of the LoRA can change as you stress test it. Overfitting can be a feature so it's very subjective.

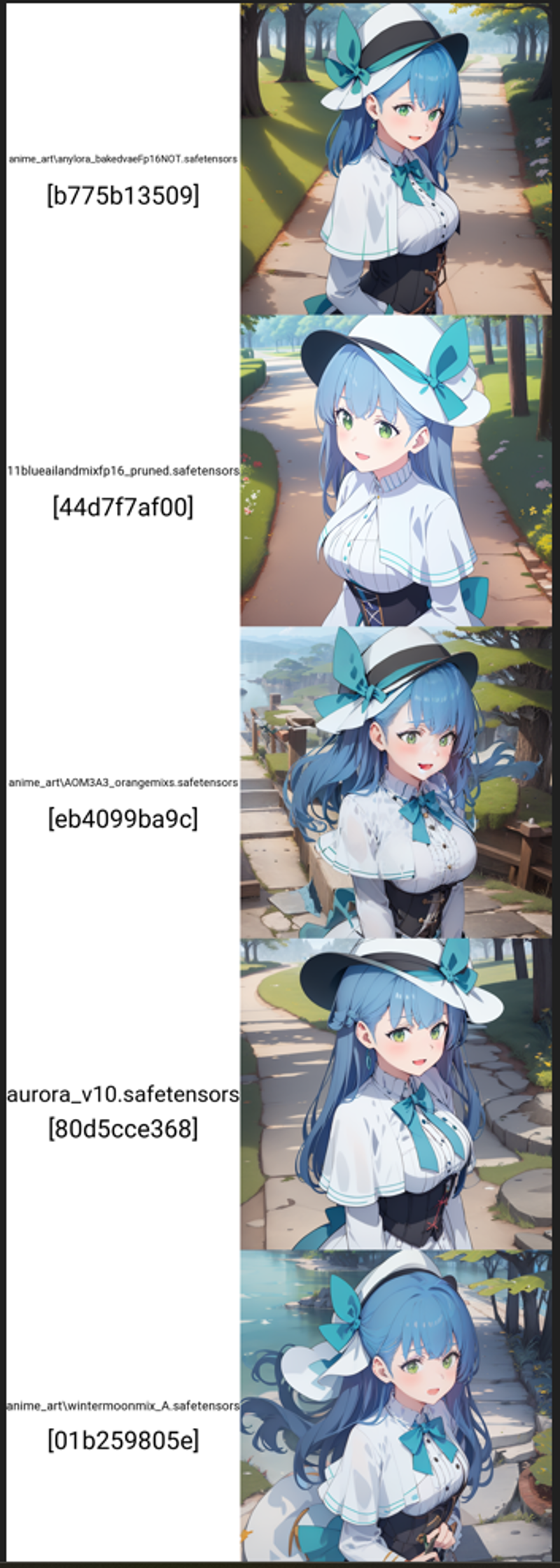

My approach to this was to create an XYZ chart against multiple epoches and several checkpoints of varying types such as pastel, pseudorealistic, screencap, and illustrative models. I specifically used wintermoonmix,aurora,AbyssOrangeMix3,AnyLora, and BlueAilandMix (this is my own personal mix.) Ideally, you should try to test multiple models at once since every model behaves differently.

These images are not from the LoRA that was uploaded to civitai but everything that was made in-between during testing. Regrettably, I did not keep a good track of what process fixed what issue so this section is more of things to watch out for.

Generally, speaking you should not aim for a lora that has a look that is too overly similar to the starting image. You also see that the image is also messy indicating the LoRA is overtrained. Ideally, the pose should be slightly different without the messy artifacts on the hat and hands. Rule of thumb here is to see if you can change the background without any additional prompt weighting and lowering the LoRa weight. If that doesn't work then the LoRA is overtrained.

However, even if you are able to change the background, the LoRA can still have adverse effects. In the image below, I specified forest as the setting but the white background bleed into the image and created a snowy forest or added negative space. This confirms my old theory where poorly trained LoRAs can negatively affect the image.

Example of forest being able to work at high lora weight without extra emphasis. (After regularization, and block weight adjustment)

AOM3 has a distorted mouth but that's not really a problem.

Changing Outfits

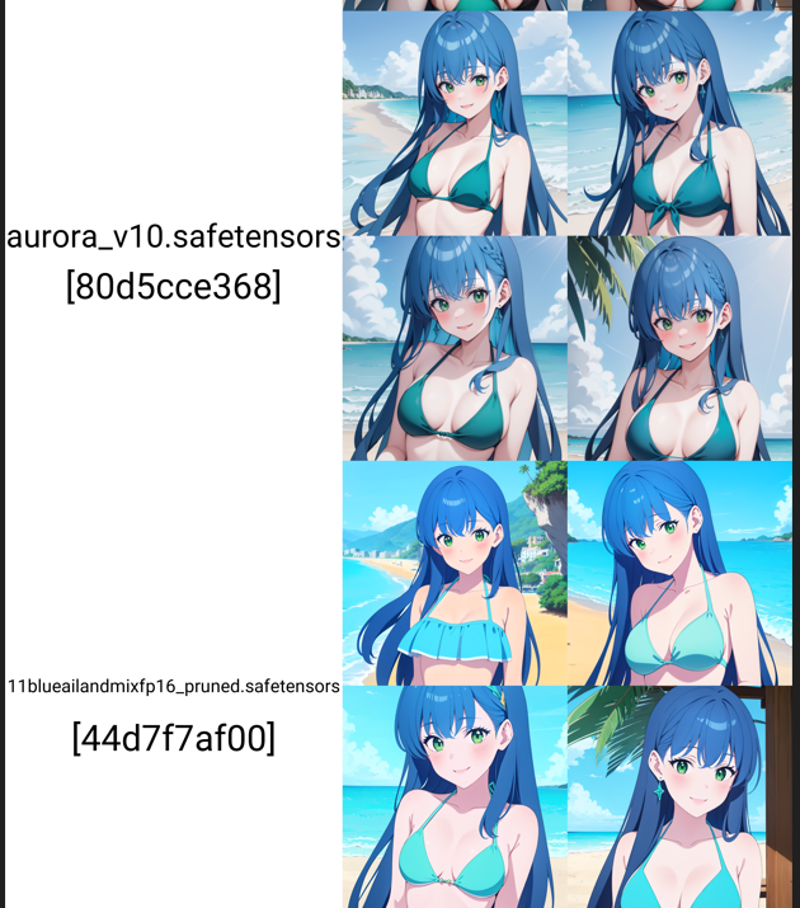

For my test with changing outfits, I chose using swimsuits since it's very easy to tell if the LoRa is overfit since the original outfit will persist. Here, the capelet, ribbon, and hat still remain along with a white background. This indicates that the base clothing will be hard to remove. I have noticed that is more of an issue with captioning so need to very precise with your captioning if you want to be able to swap outfits.

Like the forest, there can be weird concept bleeds. In the example, below, the double striped pattern had a strong bias with adding strips to the bikini top. However, this can be an example of overfitting that you can ignore.

It's also fairly important to check this across multiple models since it can appear differently. Aurora and AnyLora created stripes but AOM3 recreated the hat.

In addition, don't rely on a single fixed seed for testing. Some seeds just work better for some models. Another thing to keep in mind is that since the LoRA is based only on one image, it might not be able to guess the proportions correctly. Below, blueailand made the face look too young on the first seed but made her look older on other ones.

Things that can rescue the LoRa overfitting issues and add more work

Color Burning

If you see colorburning on the mouth, you use the adetailer extension to automatically inpaint the face and that will usually fix the issue. This can unexpectedly allow you to use higher epochs.

LoRA Block Weight Extension

Despite turning off the weights according to OUTD, you can still use that preset with the extension and it will change the output. This highly likely applies to other block weight configuration as well.

Underfitting Issues

I am not entirely sure how to fix these issues but I feel that it's proper to explain the weaknesses of this method. Due to the lack of images, this LoRA struggles with custom outfits for anything that isn't a frontal view.

In this example, you can see that character detail such as hair length, hair line, and hair color have completely collapsed and it now looks like a different character.

You can still get the pose to work but it requires trial and error.

Overall Issues that I found:

Overfitting

Eye Position is overfit

LoRA will always try to place two eyes for side and back view

Eyebrow is overfit

frowning facial expression

Underfit

Character detail collapses with poses in custom outfits

Long hair (? - could make easier if I wanted to change up hairstyle)

Does not work well with short prompts. Requires detailed and very specific prompts to work well.

Lack of color precision

Doesn't seem to capture the asymmetrical hair line well. It appears to flip the hairstyle instead.

Conclusion

It's not perfect but it's an interesting start.

Thanks for reading and maybe this helped you out!