A heavy finetune with nearly 500k samples on strictly a smaller liminal dataset to refine BeatriXL into an artistic and tasteful liminal image generating monster.

This tool is far beyond simply generating depictions of interesting areas or sectors of an image; you have advanced control of your world in many shapes and forms. Albeit stubborn at times, this beast can be tamed; as it's compatible with most loras if you set the lora to low strength from most SDXL models.

You won't just get regurgitated art from a list with this one. It's generating unique cross-entropic contaminated sectors more often than not; and the outcomes reflect high complexity differences - beautiful differences if you want them to be.

Euler or Euler A usually works, but I advise using the RES4LYF sampler pack to really make this model's showcase powerful.

https://huggingface.co/AbstractPhil/Liminal-Full/tree/main/Beatrix-LORA

https://huggingface.co/AbstractPhil/Liminal-Full/tree/main/Beatrix-LORA-V2

You can pick the mix out yourself if you want. It's a few loras; where one was merged into the core and then the final lora epochs trained from that.

liminal, no humans, stuffThe dataset was trained with a multitude of timesteps for a long time with multiple different datasets. Careful though, BeatriXL is still fairly unstable - the refinement helps a whole lot, but if you aren't careful you'll see things you don't want to see. However, this version is a lot more SFW by default than the others - meaning it's more likely to default to a SFW topic than a NSFW topic, but there are no guarantees.

If you use humans you're probably going to see something you don't want to see, so be careful with those.

Roughly 5k different images; so not many. However, those 5k images were issued a series of different captions from plain English to full description.

Joycaption 2 + siglip, GPT4o, LLAMA2 LLAVA B-OMEGA + Siglip500, CLIP_L interrogation, CLIP_G interrogation, and pure raw tags from the WD14 systems.

The curriculum training went really well.

For the fp8_e4m3fn

if your ComfyUI gives you any major errors place these in your gpu launch bat;

--fp8_e4m3fn-unet --fp8_e4m3fn-text-enc --fp32-vaeIt's not a true fp8 utilization; as ComfyUI did not autocast to fp8, but instead cast to bf16 manually for me.

model weight dtype torch.float8_e4m3fn, manual cast: torch.float16Yet I definitely launched with float8_e4m3fn as the unet. I really don't know what to say about that, other than it's likely not supported by a 4090 so it'll only run on higher-end cards.

The text encoders run just fine on fp8.

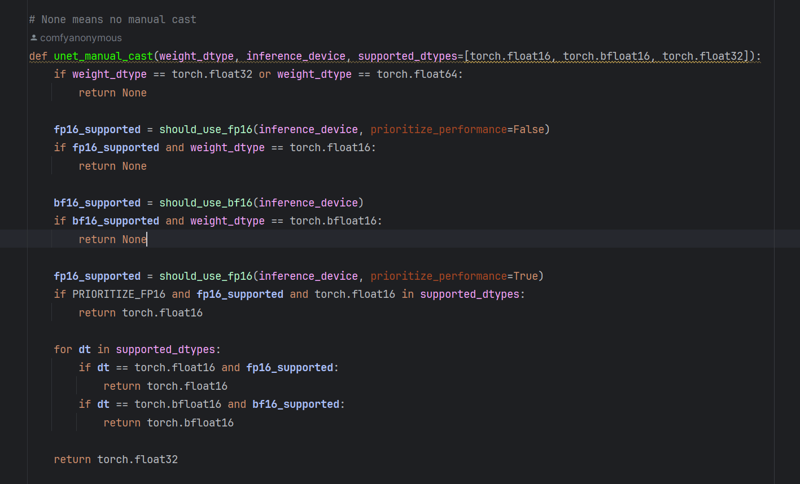

Likely in this code here, as it's running "should_use_bf16", which probably forces it into bf16 mode. Until I have a proper workaround it'll have to be upscaled to bf16, but at least the size is tiny.

Likely in this code here, as it's running "should_use_bf16", which probably forces it into bf16 mode. Until I have a proper workaround it'll have to be upscaled to bf16, but at least the size is tiny.