Updated: Dec 8, 2025

styleCover images are raw output from the pretrained model, at 1MP resolution. No upscale, no hands/faces inpainting fixes, even no negative prompt.

(10/21/2025): Time to look forward...

This model will not be actively updated. SDXL is just too ... old, it was released two years ago (June 2023).

Why not try new models. They have newer architecture, better performance, and can be much more efficient. For example: Lumina 2. If you just heard about Lumina 2, quick info:

Released at Jan 2025. Open source. Apache License 2.0.

DiT architecture (the most popular arch nowadays, same arch as Flux.1 etc.).

Small and efficient. Only 2B parameters. Full (fp16) model 5GB, Q8 model only 2.5GB means you can even load and run it on a gtx1050 without losing quality.

Use the same Flux 16ch VAE, mathematically 4x better than SDXL 4ch VAE.

Use Google Gemma 2 2b as text encoder (yes, also a fully functional chat LLM). 10x better than good-old CLIPs in SDXL which can only understand tags. As a chat LLM, it understands almost everything... English, Chinese, Japanese, typos, slangs, poetries... E.g. You can do this by just prompting it: (Note: this is one image, not four, see the full image and prompt here, idea comes from here.

I've trained a "Enhancement bundle" LoRA. Name changed, less misleading, but the dataset is the same.

I've trained a "Enhancement bundle" LoRA. Name changed, less misleading, but the dataset is the same.

Also, I don't think Civitai will support Lumina 2. I've also uploaded the model to TensorArt. You can find me at here. And try Lumina 2 models online. If you don't have a local setup.

Useful links:

Models:

Neta Lumina: Base model, anime-style finetuned model trained on danbooru and e621 dataset.

NetaYume Lumina: Base model, further finetune with newest dataset.

Optimizations:

TeaCache: https://github.com/spawner1145/CUI-Lumina2-TeaCache

Lightning LoRA, 2x faster (experimental): https://civitai.com/models/2115586

Scaled fp8 models: https://civitai.com/models/2023440

Stabilizer

What is it?

A mid scale finetuned model with 7k images.

Many specialized sub datasets, such as close-up clothing, hands, complex ambient lighting, traditional arts ...

Only natural textures, lighting, and finest details. No plastic glossy AI style. Because no AI image in the dataset. I handpicked every single image. Not a fan of training on AI image. It's like playing the "telephone game" ; training AI with AI images will only cause the images to lose more information, making them look worse (plastic, glossy).

Better prompt comprehension. Trained with natural language captions.

Focus on creativity, rather than a fixed style. The dataset is very diverse. So this model does not have default style (bias) that limits its creativity.

(v-pred) Better and balanced lighting, no overflowing and oversaturation. Want pure black 0 and white 255 in same image, even at same place? No problem.

Why no default style?

What is "default style": If a model has a default style (bias), it means no matter what you prompted, the model must generate the same things (faces, backgrounds, feelings) that make up the default style.

Pros: It is easy to use, you won't have to prompt style anymore.

Cons: But you can not overwrite it either. If you prompt something that does not fit the default style, the model will just ignore it. If you stack more styles, the default style will always overlap/pollute/limit other styles.

"no default style" means no bias, and you need to specify the style you want to guide the model, by tags or LoRAs. But there will be no style overlapping/pollution from this model. You can get the style you stacked exactly as it should be.

Effects:

Now the model can accurately generate the style you prompted, rather than over simplified anime image. No style overlapping/shifting, no AI faces, only better details. See comparison:

https://civitai.com/images/84145167 (general styles)

https://civitai.com/images/84256995 (artist styles, notice the face)

If you want to know what is "style shifting and Al faces". See:

this model: https://civitai.com/images/107381516

other model: https://civitai.com/images/107647042. female face and terrible glossy background.

See more xy plots in cover images. A xy plot is worth a thousand words.

Why is this "finetuned base model" a LoRA?

I'm not a gigachad and don't have millions of training images. Finetuning the whole base model is not necessary, a LoRA is enough.

I only have to upload, and you only need to download, a tiny 40MiB file, instead of a 7GiB big fat checkpoint, which can save 99.4% data and storage.

So I can spam update it.This LoRA may seem small, it is still powerful. Because it uses a new arch called DoRA from Nvidia, which is more efficient than traditional LoRA.

Then how do I get this "finetuned base model"?

Load this LoRA on the pretrained base model with full strength. Then the pretrained base model will become the finetuned base model. See below "How to use".

Share merges using this model is prohibited. FYI, there are hidden trigger words to print invisible watermark. I coded the watermark and detector myself. I don't want to use it, but I can.

This model only published on Civitiai and TensorArt. If you see "me" and this sentence in other platforms, all those are fake and the platform you are using is a pirate platform.

Please leave feedback in comment section. So everyone can see it. Don't write feedback in Civitai review system, it was so poorly designed, literally nobody can find and see the review.

How to use

Versions:

nbvp10 (for NoobAI v-pred v1.0).

Accurate colors and sharp details.

nbep10 (for NoobAI eps v1.0).

Less saturation and contrast comparing to v-pred models. Standard epsilon (eps) prediction limits the model reaching broader color range. That's why we have v-pred later.

illus01 (trained on Illustrious v0.1, but still recommend NoobAI eps v1.0).

If you are using this model on top other finetuned base model. Beware that most (90%) anime base models labeled as "illustrious" nowadays are actually NoobAI (or mainly). Recommended to try both versions (il01 and nbep10) to see which one is better.

Load this LoRA first in your LoRA stack.

This LoRA use a new arch called DoRA from Nvidia, which is more efficient than traditional LoRA. However, unlike traditional LoRA which has a static patch weight. The patch weight from DoRA is dynamically calculated based on the currently loaded base model weight (which changes when you loading LoRAs). To minimize the unexpected changes, load this LoRA first.

Two ways to use this model:

1). Use it as a finetuned base model (Recommended):

If you want the finest and natural details and build the style combination you want, with full control.

Apply the LoRA on top of pretrained base model. Note: pretrained model means the original model, no finetune. E.g. NoobAI v-pred v1.0, NoobAI eps v1.0

2). Use it as a LoRA on other finetuned base model.

It's a LoRA after all.

But beware:

This is not a style LoRA, literally you are going to merge two base models. The outcome is not always what you want.

It does not work on hyper merged AI style polluted 1girl overfitted 50 versions of Nova furry 3D anime WAI or whatever. This model can't fix those glossy shiny plastic AI style. Use a pretrained base model if you want to get rid of AI style.

This is what Craft Lawrence (from spice and wolf) should be, if you've seen the anime: https://civitai.com/images/107381516

This is what those AI style polluted 1girl overfitted model generated: https://civitai.com/images/107647042

FAQ:

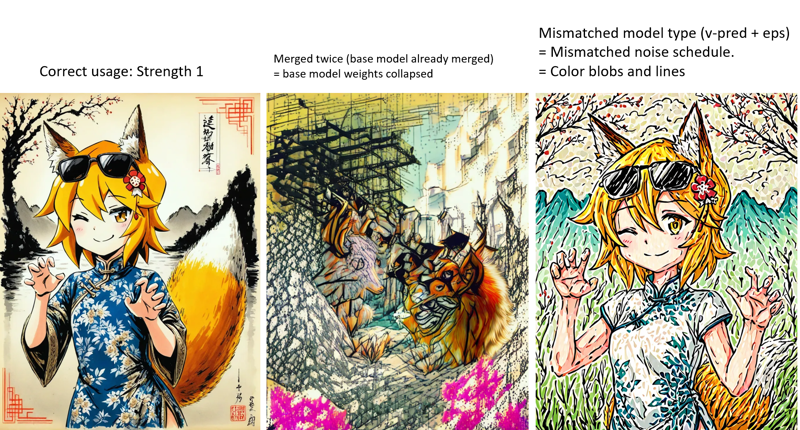

Cover images are raw output from the pretrained model, at 1MP resolution. There is no magic plugin, no upscale, no hands/faces inpainting fixes, even no negative prompt. Some users said they can't reproduce, that's skill issue. (Mismatched base model or added too many "optimizations")

If it destroyed your base model even at low strength (e.g. <0.5). That's your base model's problem. Your base model has already merged this LoRA (and you merged it twice). And the model weights got multiplied and collapsed. Beware of fake base model creators, aka. thieves. Some "creators" never do the training, they only grab other people's models, merge them, wipe all metadata and credits, and sell it as their own base model.

Other tools

Some ideas that was going to, or used to, be part of the Stabilizer. Now they are separated LoRAs. For better flexibility. Collection link: https://civitai.com/collections/8274233.

Dark: A LoRA that is bias to darker environment. Useful to fix the high brightness bias in some base models. Trained on low brightness images. No style bias, so no style pollution.

Contrast Controller: A handcrafted LoRA. Control the contrast like using a slider in your monitor. Unlike other trained "contrast enhancer", the effect of this LoRA is stable, mathematical linear, and has zero side effect on style.

Useful when you base model has oversaturation issue, or you want something really colorful.

Example:

Style Strength Controller: Or overfitting effect reducer. Can reduce all kinds of overfitting effects (bias on objects, brightness, etc.), mathematically. Or amplify it, if you want.

Differences between Stabilizer:

Differences between Stabilizer:

Stabilizer was trained on real world data. It can only "reduce" overfitting effects about texture, details and backgrounds, by adding them back.

Style Controller was not from training. It is more like "undo" the training for base model, so it will less-overfitted. Can mathematically reduce all overfitting effects, like bias on brightness, objects.

Old versions:

You can find more info in "Update log". Beware that old versions may have very different effects.

Main timeline:

Now ~: Natural details and textures, stable prompt understanding and more creativity. Not limited to pure 2D anime style anymore.

illus01 v1.23 / nbep11 0.138 ~: Better anime style with vivid colors.

illus01 v1.3 / nbep11 0.58 ~: Better anime style.

Update log

(10/21/2025): Noobai v-pred v0.280a

Special version, "a" means anime. There is a default 2D anime style. So it should be easier to use if you don't want to prompt style. Also the dataset changed a lot, the effect may quite different than previous version.

(8/31/2025) NoobAI ep10 v0.273

This version is trained from the start on NoobAI eps v1.0.

Comparing to previous illus01 v1.198:

Better and balanced brightness in extreme conditions. (same as nbvp v0.271)

Better textures and details. It has more training steps on high SNR timesteps. (illus01 versions skipped those timesteps for better compatibility. Since now all base models are NoobAI, no need to skip those timesteps.)

(8/24/2025) NoobAI v-pred v0.271:

Comparing to previous v0.264:

Better and balanced lighting in extreme condition, less bias.

High contrast, pure black 0 and white 255 in the same image, even at same place, no overflowing and oversaturation. Now you can have all of them at once.

(old v0.264 will try to cap the image between 10~250 to avoid overflowing, and still has noticeable bias issue, overall image may be too dark or bright)

Same as v0.264, prefer high or full strength (0.9~1).

(8/17/2025) NoobAI v-pred v0.264:

First version trained on NoobAI v-pred.

It gives you better lighting, less overflowing.

Note: prefer high or full strength (0.9~1).

(7/28/2025) illus01 v1.198

Mainly comparing to v1.185c:

End of "c" version. Although "visually striking" is good but it has compatibility issues. E.g. when your base model has similar enhancement for contrast already. Stacking two contrast enhancements is really bad. So, no more crazy post-effects (high contrast and saturation, etc.).

Instead, more textures and details. Cinematic level of lighting. Better compatibility.

This version changed lots of things, including dataset overhaul, so the effect will be quite different than previous versions.

For those who want v1.185c crazy effects back. You can find pure and dedicated art styles in this page. If dataset is big enough for a LoRA, I may train one.

(6/21/2025) illus01 v1.185c:

Comparing to v1.165c.

+100% clearness and sharpness.

-30% images that are too chaotic (cannot be descripted properly). So you may find that this version can't give you a crazy high contrast level anymore, but should be more stable in normal use cases.

(6/10/2025): illus01 v1.165c

This is a special version. This is not an improvement of v1.164. "c" stands for "colorful", "creative", sometimes "chaotic".

The dataset contains images that are very visually striking, but sometimes hard to describe e.g.: Very colorful. High contrast. Complex lighting condition. Objects, complex pattens everywhere.

So you will get "visually striking", but at cost of "natural". May affect styles that have soft colors, etc. E.g. This version cannot generate "pencil art" texture perfectly like v1.164.

(6/4/2025): illus01 v1.164

Better prompt understanding. Now each image has 3 natural captions, from different perspective. Danbooru tags are checked by LLM, only important tags are picked out and fused into the natural caption.

Anti-overexpose. Added a bias to prevent model output reaching #ffffff pure white level. Most of the time #ffffff == overexposed, which lost many details.

Changed some training settings. Make it more compatible with NoobAI, both e-pred and v-pred.

(5/19/2025): illus01 v1.152

Continual to improve lighting and textures and details.

5K more images, more training steps, as a result, stronger effect.

(5/9/2025): nbep11 v0.205:

A quick fix of brightness and color issues in v0.198. Now it should not change brightness and colors so dramatically like a real photograph. v0.198 isn't bad, just creative, but too creative.

(5/7/2025): nbep11 v0.198:

Added more dark images. Less deformed body, background in dark environment.

Removed color and contrast enhancement. Because it's not needed anymore. Use Contrast Controller instead.

(4/25/2025): nbep11 v0.172.

Same new things in illus01 v1.93 ~ v1.121. Summary: New photographs dataset "Touching Grass". Better natural texture, background, lighting. Weaker character effects for better compatibility.

Better color accuracy and stability. (Comparing to nbep11 v0.160)

(4/17/2025): illus01 v1.121.

Rolled back to illustrious v0.1. illustrious v1.0 and newer versions were trained with AI images deliberately (maybe 30% of its dataset). Which is not ideal for LoRA training. I didn't notice until I read its paper.

Lower character style effect. Back to v1.23 level. Characters will have less details from this LoRA, but should have better compatibility. This is a trade-off.

Other things just same as below (v1.113).

(4/10/2025): illus11 v1.113 ❌.

Update: use this version only if you know your base model is based on Illustrious v1.1. Otherwise, use illus01 v1.121.

Trained on Illustrious v1.1.

New dataset "Touching Grass" added. Better natural texture, lighting and depth of field effect. Better background structural stability. Less deformed background, like deformed rooms, buildings.

Full natural language captions from LLM.

(3/30/2025): illus01 v1.93.

v1.72 was trained too hard. So I reduced it overall strength. Should have better compatibility.

(3/22/2025): nbep11 v0.160.

Same stuffs in illus v1.72.

(3/15/2025): illus01 v1.72

Same new texture and lighting dataset as mentioned in ani40z v0.4 below. More natural lighting and natural textures.

Added a small ~100 images dataset for hand enhancement, focusing on hand(s) with different tasks, like holding a glass or cup or something.

Removed all "simple background" images from dataset. -200 images.

Switched training tool from kohya to onetrainer. Changed LoRA architecture to DoRA.

(3/4/2025) ani40z v0.4

Trained on Animagine XL 4.0 ani40zero.

Added ~1k dataset focusing on natural dynamic lighting and real world texture.

More natural lighting and natural textures.

ani04 v0.1

Init version for Animagine XL 4.0. Mainly to fix Animagine 4.0 brightness issues. Better and higher contrast.

illus01 v1.23

nbep11 v0.138

Added some furry/non-human/other images to balance the dataset.

nbep11 v0.129

bad version, effect is too weak, just ignore it

nbep11 v0.114

Implemented "Full range colors". It will automatically balance the things towards "normal and good looking". Think of this as the "one-click photo auto enhance" button in most of photo editing tools. One downside of this optimization: It prevents high bias. For example, you want 95% of the image to be black, and 5% bright, instead of 50/50%

Added a little bit realistic data. More vivid details, lighting, less flat colors.

illus01 v1.7

nbep11 v0.96

More training images.

Then finetuned again on a small "wallpaper" dataset (Real game wallpapers, the highest quality I could find. ~100 images). More improvements in details (noticeable in skin, hair) and contrast.

nbep11 v0.58

More images. Change the training parameters as close as to NoobAI base model.

illus01 v1.3

nbep11 v0.30

More images.

nbep11 v0.11: Trained on NoobAI epsilon pred v1.1.

Improved dataset tags. Improved LoRA structure and weight distribution. Should be more stable and have less impact on image composition.

illus01 v1.1

Trained on illustriousXL v0.1.

nbep10 v0.10

Trained on NoobAI epsilon pred v1.0.