Sharing Training Data on CivitAI

I always love seeing other people's training data, so I figured, let's start sharing my own, and maybe other people will be interested in what I used to train my models. Be the change you want to see etc.

There is actually a category to upload training data on Civit. I wish it was a bit more exposed and explicit. If you agree, you can always thumb up the Feature Request.

If you upload your own training data, I would love to see it! Please post a link below and I will link to it to the article as a resource for all training data on Civit.

How to add your own training data to CivitAI

Would you also like to share training data? Do it!

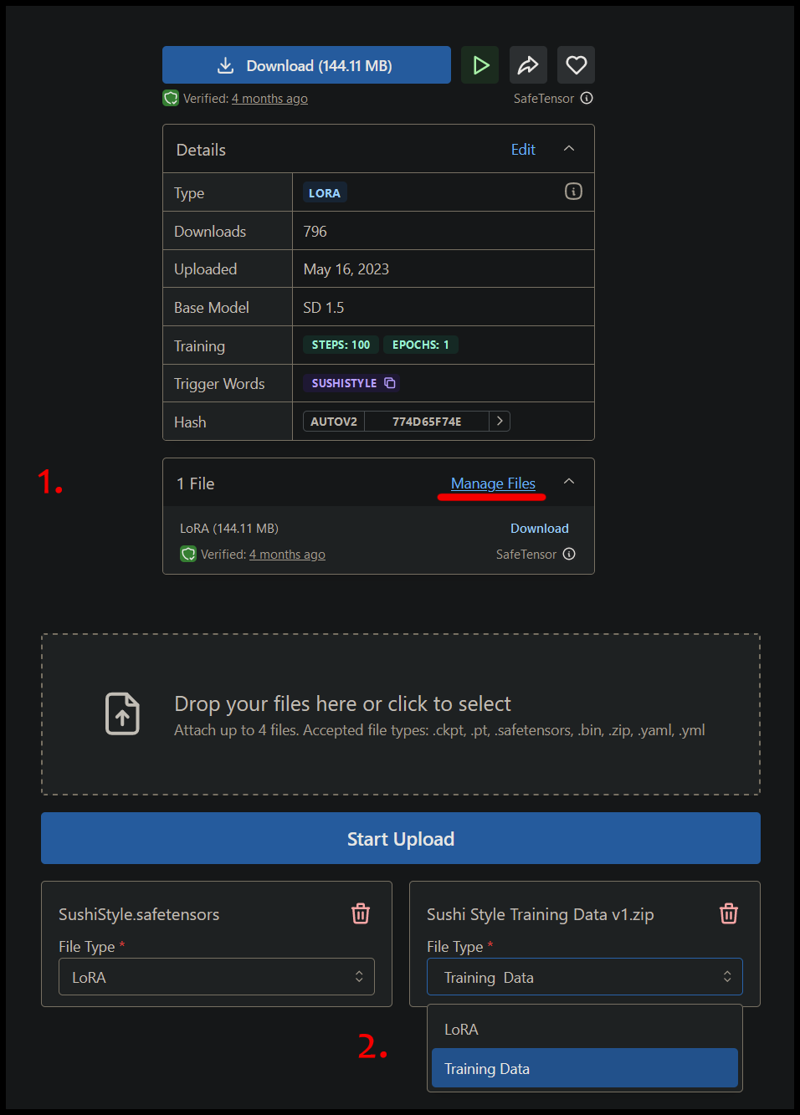

Click on Manage Files on your model

Upload your training data in a zip-file and choose "Training Data" as the type

Links to my dataset gathering articles

ChatGPT Plus Dataset Generation

ChatGPT API Dataset Generation

ComfyUI "One Click" Dataset Generation

Links to my training data and lessons learned

In the Attachments section of this article, you'll find my current Kohya_ss LoRA training data config (kohya_ss Example Config - CakeStyle.json). Remember to change the name, file paths, settings and sample info before using it. This is not a LoRA training guide.

Disclaimer:

My learnings below are just my own theories and understanding. This is not a technical guide, and this just documents my process of making models. If you follow the steps of my earliest models, you'll get worse results. Also, so far I've mostly made styles. My musings below are not useful for things like characters, art styles and other concepts.Sushi Style

This was my first model. I had no idea what I was getting into, and how addictive it would be. I just wanted to see if it could be done. On my first few days with SD, I found Konyconi's style LoRAs and was blown away of what you could do. So my first idea was to sushify something. I read Konyconi's style creation guide and the video guide from Olivio Sarikas.

Easy, right? Well, my initial results weren't great. I used way to few images, and too low quality. I assumed that the trainer would pick out the good parts of the images I provided. This is not how it works. It picks up all parts. Including stuff I didn't want in my sushi. Like grass.

Anyway, like 12 versions later, we have the v1 model I uploaded. If I were to re-train it, I would get much better and many more images from Bing. This is currently the best way I know to train models. I also have better settings now.

Gelato Style

This model took 4 versions to get to this result. A few images were removed where I didn't like their influence on the style.

The whole model works, but is istill nflexible. If you try to make a "tree", you WILL get the tree that's part of the training data, unless you prompt it more and specify in detail what you want it to look like. I later learned that this is called memorization. Meaning that my model has memorized my very specific image, rather than learned and understood the concept of a gelato tree. It means I need more variations of the concept to show it, and I need less repeats of my data in the training. So all in all, I need a lot more images for my training data.

Later on, I also learned that if you don't add environment or locations to your training data, the trainer will figure out the most logical location for your data, and place generations there if the generation prompt doesn't specify it. This means that your images will end up at an ice cream shop with this model if you don't prompt the location.

Chocolate Wet Style

With this one I started experimenting with shapes. I figured, if I give it geometric shapes like a cube, a sphere and a pyramid, maybe it can extract a lot of useful shape information from this, and therefore learn it pretty well. I'm still not sure about it, but I tend to include it in all my styles anyway. It's not everything you need but I think it helps.

I also started adding more copies of each item here. You can see there are several tree images, to get less memorization get more variety of that subject. You can see from the removed images that I removed all dog images I had. This was because all animals started to look like that and I didn't like it. It does animals fine without any animal training data anyway, but I'm thinking to start incorporating it in my training again. With more varied training images.

Whipped Cream On Top Style

The purpose of this one was to combine it with my other food LoRAs, and to see if rather than transforming the subjects into a type of food, it would instead add something on top. I think it kind of works, but it gets boring pretty quickly.

The images are a mix of Leonardo.ai and Bing, with some real images mixed in too.

I decided to make an adult version too, which almost works like a "whipped cream censor" model funnily enough. The attached training data is for the SFW version.

Peach Fuzz

With this model I wanted to see if you could force more visible vellus hair (the name for peach fuzz).

I used images of blonde female vellus hair as it's more subtle than hairy male bodies for example.

The dataset is from stock photo sites and some googling. No generated images are used.

I have no rights to the images used, so this LoRA is not commercially usable.

Pink Skin

I had the idea to have a way to control the phenomena of having red elbows and knees. Usually seen in colored manga images to get some color variation on the skin tones I think. You will also see it if you walk on your knees and elbows for a bit. Probably from irritating the blood vessels in there or something. It looks a little bit like a sun-burn, which could be useful too.

The images are a mix of stock photos, and some generated images. I painted on the effect on all body parts in Photoshop. In the first attempt I made everything way too red, and I ended up with something like this for a more subtle effect. I also used WD14 captioning, for no particular reason, I was just testing around.

I have no rights to most the images used, so this LoRA is not commercially usable.

Cake Style

This dataset was created using mostly googled images, and a few generated ones from Bing and Leonardo.

I have no rights to most the images used, so this LoRA is not commercially usable.

Semla Style

This was the first model where I trained using Bing images exclusively. I was still doing random subjects without any plan here. I was using some geometric shapes, and a lot of animals for this set. I do not have the prompt I used, but it was pretty much something like:

A [parrot] made out of semlaSo pretty straight-forward and to the point. Sometimes you don't need much more than that.

Croissant Style

Most of the images from this one were generated by Bing. A few from some other sources. If I would tweak it, I would like to figure out how to make it not convert the skin of humans to crispy croissants. That grosses me out so much. I should probably remove the examples of faces made out of croissants from the data.

There were a few images removed (included in dataset sharing). They contributed too much of a "cute face" to early versions of the model. So I removed them from the dataset.

Waffle Style

This dataset was generated mostly using bing image generation with various random subjects for the prompt. It's less cohesive and more random than my more recent datasets, and I think the model suffers for it.

It has learned animals in a poor way, since there is not enough good variation in there for it to understand the connections, so instead the model overfits on the images of animals that are included.

Abstract Pattern Style

This dataset is important and pivotal for my progress in image model creation.

My early versions of this style was trained only on the images in the root of the image folder. It's only 26 images, but this didn't train well at all.

I reached out and asked for some help from @navimixu (https://civitai.com/user/navimixu/) and they taught me a lot, and we collaborated on the rest of the model.

We both generated half of the rest of the dataset, and as such this model is a bit varied in its results. Sometimes you can see the training come through from the prompts I used (a bit more colorful), and sometimes from the ones Navi created.

I also added a couple of images that were good from my test generations using my original training run.

Whitebox Style

This dataset was generated using Bing image creator.

I set out to generate simple environmental sketches in a whitebox / ambient occlusion style, to simulate early game development pieces.

The idea was to be able to help come up with interesting shapes from blockouts, and add a few simple details on top of it.

The results are alright. I think the dataset need to be a bit more varied and slightly less blocky, to be a little bit more useful for things outside of environments.

I also included images that I removed from the training, due to them being too colorful. I could probably just have desaturated them :)

Fluffy Style

This dataset was created using the Bing training method. It was captioned with one or more colors for each concept, to make it more responsive to color prompting during generations.

It's quite a small dataset, but it seems to be enough for the concept.

Melted Cheese On Top Style

This dataset was also created using the Bing training method. It was captioned with one or more colors for each concept, to make it more responsive to color prompting during generations.

I had to rename the captions and retrain the model to avoid the dreaded furry word from CivitAI's auto-NSFW policies :)

Barbiecore

This dataset was generated using the Bing image generator. There is no single image of a an actual barbie in the dataset, nor any actual toys. The synthetic data used is inspired by the plastic toy aspects and the pink/white color scheme prompted for.

I decided to use the word "Barbie" in the model trigger word to unlock already existing knowledge of Barbie and include that in my model. This is why we are getting blonde girls often when using the model.

Carnage Style (NSFW, gore / violence)

The training data is SFW though. It's mostly just tendrils, teeth and glowing. No real photos or gore.

I made the style with Bing Image Creator. Here's the prompt I ended up using for a lot of it:

styled after marvel's Carnage, photorealistic [tree in a forest], made out of glowing red spirals, swirls, long sharp teeth fangs and claws, white glowing eyes, tendrils, red slimy skin, brooding horror and darkness, evil energies, slimy gutsIn this case, I would mostly swap out "tree in a forest", to the next concept.

For some of them, you have to adjust it. Things like the environment, or water, where I didn't want to have as many faces and mouths/teeth in general.

If you don't specify a location, it's likely to just be a black background. If you train only on white / black backgrounds, it will be absorbed by the training data and will generate without an environment unless you prompt for it. So I try to always have some kind of background in most descriptions.

I went with 40 concepts for this model. I'm including a few new concepts for micro, macro and "ethereal" types of connections in the model. Such as [macro, cells, microscope], light rays, magical energy, mystic runes, grass, forest, water, planet.

Galactic Empire Style

The same as I did for the Carnage Style, I tagged everything as "white", in hope of increasing color control. I'm gonna make a new version without this and compare. I'll update this once I have some results.

My prompt for Bing Image Creator was:

studio photography in a style and spirit and sleek design of star Wars imperial regime, a Photorealistic white plastic [airplane]. The Empire from Star Wars. sleek plastic retro futuristic sci Fi look, black secondary details, [soaring the blue skies among the clouds]I often put the environment description last. To make sure to get the style for my SUBJECT, rather than the ENVIRONMENT. Unless the subject I'm going for is an environment, or the environment is a key part of it.

NES-Style

It's possible that the training data is a bit too abstract in it's shapes. I feel like often I'm not getting what I'm hoping for, but sometimes I very much do. If I were to re-train it, I think I would get new data for zoomed out images. Hopefully with more of a plastic feeling.

taintedcoil2 trained a version of it that got slightly different results. I'd be interested in seeing more people attempting to re-train this one and sharing their version.

My prompt for Bing Image Creator was:

A photorealistic RAW photo of a [toaster] in the style of vintage video games, NES, Nintendo Entertainment System, with sharp geometry and edges, gray plastic. With red, white and dark gray details, [in a kitchen]. Controller. Game system.You have to switch parts of it around, and remove some parts for different prompts of course.

Minion-Style

This dataset is generated with different colors in the prompting and captions, which makes it color-changable.

The Bing prompt for this one was quite hard to deal with. It had to be changed a lot depending on the results I got. Very often I got the Minion characters, instead of the thing I was prompting for.

a photorealistic, [silver castle], styled inspired the Minions from Illumination, big white eyes with metal goggles, anthropromorphic hybrid creature, 3D CG Render, movie, cute, with yellow details, [on the countryside]

photorealistic, [pink magical energy], pixar style, 3D CG Render, movie, with yellow details

photorealistic, black [macro, cells, celldivision, microscope view], pixar style, 3D CG Render, movie, big white eyes with goggles from The MinionsKeep in mind when developing a look that you are essentially art-directing, but you have to imagine what the results will look like after the model is trained. You're not gonna get an exact replica your input as output.

For this one I always had to consider how much yellow details to include in the other colors, I ended up having yellow "minion"-skin on some of the images, but not all. Not sure how much it has affected the training or not.

Spy World 1950s

This model set out to make a style that integrated hidden cameras and microphones into everyday things. The prompting was pretty straight-forward when generating training data with Bing:

1950s spy themed and espionage flavored Photorealistic [toaster in a kitchen]. with the feeling of intelligence Gathering, unknown situations and a sense of dread, retro scifi gadgetry with lens, spy camera and hidden wiresIf I were to gather data for another version of this set, I think I would tone it down to be much more subtle with the cameras. To make them really hard to detect. But I would worry that the training may not be able to pick up on it.

Science DNA Style

This model ran on a mix of mostly 2 Bing prompts:

a phororealistic [toaster] made out of genetic sequences and building blocks of life. proteins, enzymes, cells, chromosomes, Hi-Tech, DNA molecule, glowing science discoveryInspired by science, a phororealistic [car] made out of genetic sequences, proteins, enzymes, cells, chromosomes, Hi-Tech, DNA molecule, science discovery, scientific diagram, DNAI had this idea of a very transparent style that would almost work like an X-Ray on objects, but showing exaggerated versions of the "building blocks" that makes the object up.

In the end the result is more tangible, but still fairly ethereal.

Expedition Style

The images for this dataset are very pretty. I'm not super happy with the result of the model. I feel like it didn't pick up enough of the style compared to the images.

I may try to figure out a way to capture more of the style. Not quite sure how.

The Bing prompt was for the most part:

In a style of adventurous explorers and archeologists, a Photorealistic [toaster in a kitchen]. Giving the feeling and sense of adventure, exploring the unexplored, mysterious tombs and temples, archeological digs and traps. the spirit of ancient knowledge in dusty tomes, with some magical elementsCyberpunk Style / World

This dataset is a bit generic. The data is generated from Bing with this prompt:

a cyberpunk inspired, netrunner styled motorbike, in neo tokyo, Inspired by cyberpunk art, Augmented reality. High tech. Low life. Cybernetics. Crime rate.I then decided to train on it twice. They are both trained from the same data. The only difference is the trigger keyword. One uses the actual word of "Cyberpunk Style", so it "inherits" more information about this subject from the base model you're using. The other one uses a made-up word which should make the training more pure and it should inherit less knowledge about what cyberpunk means, outside of the training data. In theory at least.

From my test generations so far, I feel like the "Style" one is generally more creative and unique feeling. This was the one with the weird activation trigger (C7b3rp0nkStyle).

Halloween Glowing Style

This model was a part of the Halloween Collaboration between myself, navimixu - DonMischo - taintedcoil2. Our goal was to each train a Halloween model, and also train a model with the training data from all our datasets.

We wanted to see what the results would be when multiple "related" art styles were merged into one model.

This is my contribution to that model.

This model was trained during the transition from Dalle2 to Dalle3. So the training data may be a little bit inconsistent, as the original prompting for the model stopped working for Dalle3.

The dataset is pretty good, but it ended up with too many pumpkins. I wanted more sinister glows and eyes to be the focus, but it was nearly impossible to get that without also getting pumpkined to hell.

It's the largest style dataset I have gathered so far, 751 images as I wanted to cover all the concepts that the other collaborators were using. It is certainly overkill, but it also produces very stable results. For the merged model, I feel like the glowing eyes were very prominent, as the number of images from this set were larger than the other ones.

Halloween Collaboration Model

This model was a part of the Halloween Collaboration between myself, navimixu - DonMischo - taintedcoil2. Our goal was to each train a Halloween model, and also train a model with the training data from all our datasets.

We wanted to see what the results would be when multiple "related" art styles were merged into one model.

This is the resulting model of everything combined!

A total of 1738 images, in 5 different styles, all trained at the same time.

The result is actually a fairly consistent, yet flexible model that actually takes from all styles.

It is a little bit desaturated, but it definitely works well!

Swedish Desserts

A simple object LoRA. It was trained with images from Google. It trained on 14 different subjects (different desserts), at the same time. The amount of images for each subject was different for each one, between 7 and 19. Collaboration between myself and @kvacky.

While it's trained on specific subjects with keywords, it still picked up the "feeling" of Swedish cakes and desserts, so it can also be used as a dessert/cake enhancing model which adds some Swedish flavors to it.

Cinnamon Bun Style

This was the second model I created entirely with the use of ChatGPT and DallE-3.

The image prompt is something like this:

Photo of a delicious-looking cinnamon-bun [SUBJECT]. The entire aircraft is constructed from fresh, golden-brown cinnamon bun material, with swirls of cinnamon and icing visible.I use a handcrafted prompt to automate the generation of the dataset images.

Piano Style

The training data from this model was gathered mostly from ChatGPT. I used some kind of starting prompt, but ChatGPT changes the prompt a bit with each one. I used prompts like:

Photo of a shiny luxurious sleek and elegant coffee machine in a kitchen, styled after the aesthetics of a piano.and

Photo of a sleek coffee machine in a kitchen, exuding the aesthetics of a piano. The machine captures the reflective quality of a piano's surfaceBatmanCore

The images from this set were generated with DallE3. Most of them were generated fully automatically with my DallE Image Generator script: (https://github.com/MNeMoNiCuZ/DallE-Image-Generator). A guide on how to use it should be up on the github, as well as in a CivitAI Article (https://civitai.com/user/mnemic/articles).

The prompt I used was mostly:

Envision a [SUBJECT], with an ultra-modern aesthetic, characterized by a sleek silhouette and aggressive geometry. Inspired by the dark knight. The design should incorporate a monochromatic palette, dominated by a deep, matte black and accented with elements that suggest cutting-edge technology. With textures reminiscent of kevlar or carbon fiber. Subtle bat motifs or insignia should be integrated into the design, suggesting a connection to a dark and mature bat-themed super hero.Overall I think the dataset is fine, but the results are often not too interesting. It keeps on producing way too many armored men if I use the word "Batman" as part of the triggering keyword, and if I train without it, I don't feel like it got the style good enough. There's probably something that can be done to improve it, but I'm not quite sure what.

Transformers Style

The dataset was generated with a mix of Bing and ChatGPT (DallE-3 for both).

The prompt used was something like: A photorealistic RAW photo, a semi-realistic artstyle, photo manipulation of a red and blue spaceship in outer space, in the style of by Michael Bay's Transformers, metallic-looking sharp design, shiny two toned, dual colors scheme, metallic parts transforming it, high-class luxury itemThe new experiment here was generating images with mixed colors, and captioning them as such. I think the results are quite positive. If prompted with multiple colors, it will usually split the design up in a reasonable way. Example captions:

blue, white, red, airplane

red, gold, cameraChristmas Postcard Style

The style was developed with Bing with DallE 2.5. But I didn't get around to generating the images then. They were instead generated with ChatGPT and DallE3. I ended up with a prompt like this for DallE3:

In a style and spirit of christmas, a Photorealistic [cup of coffee in a kitchen] making you feel a sense of joy and happiness from the celebratory holiday season. sparkling effects, winter atmosphere and Christmas feeling, snow covered, with sparkling lights and a lot of red feelingThe goal was to get MAXXIMUM CHRIXXMAS, very much over-the-top joy and merryment. I'm not loving the visuals of the result, but I can't argue with the learned style, it very much is exaggerated Christmas. I ended up naming it Christmas Postcard since all the decorations and framing makes it useless for most other things.

Christmas Winter Style

This dataset was created as a bit of an experiment. I used 38 different concepts, but only one image of each concept. This means that the model is a bit poorly trained, and you don't get a lot of variety within each concept unless you specify your details. For example, all images of cities are very similar.

As I did use the word "Christmas" and "Wintery" in the model name, it did of course maintain a lot of knowledge about these subjects. Which is perfectly fine in this case.

The dataset was generated using my DallE Batch Image Generator: https://civitai.com/models/195318/

It generated the dataset in 10 minutes, all pre-captioned. (except for 2 images that I took from me developing the prompt in Bing).

Neon Christmas Style

This is a merge of three models from MNeMiC and DonMischo.

The images that comes from the Christmas Postcard Style are quite overwhelming. This is likely becacuse there are more of them (4 for each concept), so they are more consistent.

The Christmas Wintery style also merges quite well with it, so even though there's only 1 image for each concept, it comes through quite a lot.

The Neon Christmas style comes through less automatically, but if you use words like "neon", the trained data will show up quite clearly.

ComfyUI One Click Generator

From this point on, I will mostly be using the ComfyUI One Click LoRA method as outlined by this walkthrough guide on civit. Mirror.

Davy Jones Locker Style

This model started as a DallE 2.5 style, and ended up in a ComfyUI learning experience.

It uses the IPAdapter functionality to extract the style from a source image, and apply it to the generated image. And with combinatorial prompts, I can quickly batch through prompts designed for the concepts I use for training. This is also the first time I've used SDXL to generate training images.

The ComfyUI workflow is shared along with the training data, as well as the Style Image used.

The prompt I used to pull out the style from the source was:

found at the bottom of the sea, covered in barnacles, tentacles, moist, dark, damp, wet, dirty, filthy, untouched for centuriesDeadpool-Style

The dataset for the Deadpool style was generated in ComfyUI using my Style Generator workflow (still to be shared). It came from one source image from DallE3, and I then generated the dataset using the following style prompt with SDXL in ComfyUI:

(red:1.4) colored, black and white details secondary color, modern sharp design, made from luxurious materials, leatherwork, stitches, deadpool-styleNES Voxel Style

The dataset for the this style was generated in ComfyUI using my Style Generator workflow (still to be shared). It came from one source image from DallE3, and I then generated the dataset using the following style prompt with SDXL in ComfyUI:

a colored, photorealistic (3D:1.2) (Voxel:1.3) hi-tech [SUBJECT], 3D-pixel, (wireframe:1.3), In the style and spirit of Nintendo NES pixel art, AR, 3D, thick outline, wireframeWrong Hole Generator

To generate the dataset for this model I used the IPadapter workflow for ComfyUI. I just added a reference image of a suitable hole to use as a reference, and gave it a list of wildcards to generate holes onto different things..

Hornify Style

Used the ComfyUI One Click LoRA method as outlined by this walkthrough guide on civit.

I forgot to remove the goats/deers that I wasn't happy with from the first generated dataset. So I did a second version shortly after to fix the model. Both datasets are uploaded on their respective model.

Jedi Style

Used the ComfyUI One Click LoRA method as outlined by this walkthrough guide on civit.

The prompt for generating the set was:

inspired by the Jedi, blue light, Glow, soft edges, hi-tech, sci-fi, [SUBJECT], sci-fi, tech, soft, beige, white designCardboard Style

The images for dataset was generating using Bing and ChatGPT using DallE-3.

The prompt was usually something like:

A realistic, life-sized,(SUBJECT GOES HERE), it is entirely made out of cardboard. It should have a detailed, cardboard construction, appearing like a large-scale model. Its texture and appearance should clearly show that it is made of cardboard, with visible corrugations and seams, large chunks of cardboard, oversized, detailed backgroundDark Charcoal Style

This dataset was generated with the Bing dataset generation method.

My prompt was usually something like this:

a dark charcoal painting of a bookshelf in a living room. Professional master of charcoal, dark strokes, rough lines, full screenSemla Style v2

A new dataset generated with the Bing dataset generation method. I realized the old version wasn't up to par when I tried to re-use it for SDXL training. The old model was also a bit unreliable.

Gaelic Pattern Style

An initial image was generated using Bing using this prompt:

a creative fantastic creative depiction of a detailed and Lá Fhéile Pádraig-themed, "industrial boiler in a warehouse". Inspirid by celtic culture, iconographic patternsAnd a resulting image was then used with the Comfy Workflow and a similar prompt to create a synthetic dataset resulting in the model.

Element Earth

This dataset was created using the bing dataset method. The dataset is fine, but I feel like the model didn't perform as well. It's not as creative and dirt-like as I would have hoped. I think it's beacuse I kept the word "earth" in the name. It's too mixed of a concept, considering it means both the dirt as well as our planet.

Element Fire

This dataset was created using the bing dataset method. It's a little bit generic, but overall it plays nicely. It can create quite impressive objects engulfed in flames. It handles people especially well.

Element Wind

This dataset was created using the bing dataset method. I find the outputs of this model stunning and lovely. I would have liked a little bit less desaturation and brightness of the model, so that's something I may incorporate in a future version. A more natural-color behaving version.

One thing to notice from the dataset is that I changed the prompt a bit mid-way through to incorporate more color. You can see the shift between "grass" and "industrial boiler" (with effects and explosion as special cases added after).

The results of this model quite literally blow me away :)

Element Water

This dataset was created using the bing dataset method. The effects of the model are quite fine, but a bit generic. Since it's a common concept (water), there's already a lot of knoweldge in there which gets blended with my added data. It can definitely deliver some splashy images, but for a future version I would definitely train it on multiple colors.

Element Mix

A mix of the 4 other elemental models. I trained 2 versions of this. The first version came out very tame and not very interesting. For this version I just used the same trigger word for everything, like this: Elementsmix airplane. The results were just boring. A shame I already trained 3 versions of this :)

For version 2, I added the individual elements to each image for each element. Meaning I have captions like Elementsmix fire airplane and Elementsmix earth spaceship. This way I got much more interesting results.

The colorful outputs of this model is off the charts. It's quite vivid and expressive. I very much like the result, and when I use it I often randomly allocate the elements and with different weights, to get different results. A very fun model to play around with!

Sackboy Character Style

This dataset was created for a bounty using this model (https://civitai.com/models/221798/sackboy-maker-concept). It's an alright dataset, but not great. With the SDXL model, a new dataset would produce much greater result.

It was captioned automatically using WD14 tags and with for 3 different body types (sackboy, sackgirl, sackbaby).

Split Heart Necklace

Inspired by the marketing material for the Deadpool and Wolverine movie, I generated this dataset with the bing image creator. Prompting for different materials and subjects for the two halves. The prompts used was something like this:

A split broken heart metal necklace heart, cloth strap chain, closeup, the left side is themed around [cat design]. Split in the middle. On the OTHER SIDE, the right side heart is themed around [bird design], on a tablePareidolia Concept

This dataset was trained on images found on google and other websites. As such I have no rights to the data and neither do you :)

This model and dataset is shared only for research purposes.

Tiny Planet

This dataset was generated using Bing Image Creator.

I did not follow any existing template, instead I just prompted for different location types, environments, and then for some specific subjects, like animals and people doing things. It was a really fun dataset to craft and the results of the model were satisfying.

The prompt used was:

a sphere 360 panorama tinyplanet image, fisheye, depicting a [castle on the countryside, mountains]Game-Icons.net Style

This dataset is downloaded with a CC BY 3.0 licence from https://game-icons.net/.

I strongly recommend this site for your icon needs for prototyping games.

The images were captioned based on the file names as the amount of icons was so huge.

The training took a few tries, as the results weren't good when there was transparency in the images, and a few other factors.

Semi Soft Style

This dataset was generated using a mix of Dreamshaper XL Lightning and Envy Starlight XL Lightnning Nova.

The first pass was generated using Dreamshaper, and then Nova was switched to for the highres fix to get the soft touch I wanted for this style.

The captions were created using a hybrid technique of wd14 captions, mixed with doing ~20 moondream captions with different questions, and then using an LLM to combine and condense this information to a complex and information-dense prompt.

Glimmerkin Style

A simple character generated by Bing Image Creator.

Painterly Fantasia Style - Flux - SDXL - PDXL

This dataset was generated with MidJourney, and provided by user:

https://civitai.com/user/wildcake

dAIversity Detailer - SDXL - Flux - Flux Checkpoint

The images for the dataset were generated with Dreamshaper XL Lightning using some random prompt wildcards (https://civitai.com/models/1095990/200k-random-prompts-wildcard-flux-sdxl).

The captions were created in a long multi-step process.

Example image:

I used Moondream v1 with 21 different prompts per image, to capture details about specific aspects of the image. These 21 queries, were then combined into one long description, which a language model (I think Llama 3.0), compressed down to the essentials of the image, for a long caption.

Example of the outputs of these queries:

{"Art Style": "The image is a digital art piece featuring a white chihuahua dog dressed in a knight's armor, complete with a cape and a shiny golden shield. The dog is posed in a way that makes it appear as if it is a character from a video game or a work of art.", "Background": "A dog is depicted in the image, wearing a golden armor and a red cape. The background features a wall with a pillar, and the dog is situated on a floor.", "Dominant Color": "The most dominant color in this image is white, as it appears in the dog's fur, the armor, and the background. The white color is also present in the dog's eyes, which adds to the overall visual effect of the scene.", "Colors": "The image features a white dog dressed in a golden armor, with a red cape. The dog is wearing a golden shield, and the armor is adorned with gold designs. The colors used in the image are predominantly white for the dog's coat and golden for the armor and shield, with red accents on the cape.", "Element Composition": "In the image, a white dog is dressed in a golden armor, resembling a knight. The dog is sitting on a stone floor, which serves as the background. The dog is positioned in the center of the image, with its eyes and ears facing forward. The armor covers the dog's body, emphasizing its regal appearance. The stone floor provides a solid and stable surface for the dog to sit on, while the background adds context to the scene.", "Composition": "The image is a white dog dressed in a golden armor, sitting on a floor and looking at the camera with a regal pose.", "Contrast": "In the image, there is a contrast between the dog's attire and the surrounding environment. The dog is dressed in a golden armor, which is a combination of gold and red colors, and it is sitting on a stone floor. The stone floor and the dog's outfit create a visually striking contrast against each other. Additionally, the dog's white fur stands out against the golden armor, further emphasizing the contrast between the dog and its surroundings.", "Cultural Indicators": "In the image, the small white dog is dressed in a golden armor, which could be a representation of a medieval or fantasy-inspired theme. The armor suggests that the image might be inspired by a specific culture or historical period. Additionally, the dog is wearing a red cape, which could be another cultural indicator or a decorative element to enhance the visual appeal of the scene. The presence of a fire in the background might also be a cultural element, such as a fireplace or a symbol of warmth and comfort in the depicted setting. Overall, the combination of the dog's attire and the fire in the background contribute to the cultural context of the image.", "Emotions": "The dog appears to be feeling proud and confident, as it is dressed in a golden armor and standing tall with its head held high.", "Focal Point": "The focal point of the image is a white dog dressed in a knight's armor, sitting on a stone floor.", "Description": "This image features a white dog dressed in a knight's armor, complete with a shiny suit and a cape. The dog is sitting on a stone floor, looking regal and majestic. The armor is made of a shiny material that reflects light, giving the dog an impressive appearance. The dog's attire and pose make it seem like a character from a story or a work of art.", "Lighting": "The image features a white dog dressed in a golden armor, sitting on a stone floor. The light source is positioned behind the dog, shining on it. The light creates a spotlight effect, focusing the light on the dog and highlighting its golden armor. This creates a visually striking and dramatic scene, drawing attention to the dog's attire and making it the focal point of the image.", "Main Objects": "The main objects present in this image are a dog and a dog suit.", "Mood": "The image of a small white chihuahua dressed in a knight's armor and cape conveys a whimsical, playful, and imaginative atmosphere. The dog is dressed in a regal and majestic costume, which is not typical for dogs, and it is sitting on a fire pit, adding a touch of humor and creativity to the scene. This image can evoke feelings of joy, amusement, and a sense of wonder, as it combines elements of a beloved pet with a fantastical or fairy tale theme.", "Perspective": "The image is taken from a close-up perspective, focusing on the dog's face and outfit.", "Pose - Short": "A dog in a golden armor posing for a picture.", "Pose": "A dog is wearing a golden armor and a red cape.", "Race": "The character in the image is a white chihuahua.", "Setting": "In the image, a white dog is dressed in a knight's armor and cape, standing on a floor with a fire behind it. The dog appears to be in a castle-like setting, with the armor and cape giving it a regal appearance. The fire behind the dog adds to the ambiance of the scene, creating a dramatic and majestic atmosphere.", "Time of Day": "In the image, a small white dog is dressed in a knight's armor and standing on a stone floor. The dog is wearing a cape and a shiny golden shield, giving it a regal appearance. Although it is difficult to determine the exact time of day from the image alone, the presence of the cape and the knight's attire suggest that the photo might have been taken during a time when people dressed in medieval-style clothing were more likely to be out and about, such as during a festive occasion or a historical reenactment. However, without additional context or visible elements in the image, it is not possible to pinpoint the exact time of day.", "Time Era": "The image is set in a medieval-like era, as evident by the dog's golden armor and the overall appearance of the dog dressed as a knight."}This was then combined with some original WD14 tags for compatability and to create a tag + caption based approach.

Example output:

armor, looking_at_viewer, shoulder_armor, red_cape, pauldrons, animal, cape, blurry_background, no_humans, blurry, sitting, solo, furry, indoors, animal_focus, breastplate, dog, white_fur, brown_eyes, A small white chihuahua dog is dressed in a knight's armor, complete with a golden shield, standing regally on a stone floor. The background is dark and blurry, with a hint of a fireplace in the distance, suggesting a medieval-like setting. The dog's armor is adorned with gold designs, contrasting with its white fur and creating a striking visual against the dark background. Its red cape flows behind it, adding a touch of color to the image. The dog looks directly at the viewer with a confident expression, as if it's ready to embark on a heroic quest at any moment.This is what was used to train the the dAIversity Detailer LoRA for SDXL (https://civitai.com/models/477442), Flux (https://civitai.com/models/700837), which was also merged into the Flux dAIversity Checkpoint (https://civitai.com/models/711900).

I also used the same dataset to create the paligemma-longprompt vision model (https://github.com/MNeMoNiCuZ/paligemma-longprompt), which then generates captions in this style from scratch.

Prompts

I learned a few tricks to manipulate the outputs of Moondream along the way.

Use simple descriptions of what you want

No need for correct grammar, the model is pretty daft

Starting with the term "short words" makes it output cleaner shorter results without babbling on about other details

Examples:

{

"prompt-title": "Pose - Short",

"prompt-category": "Pose, Character",

"prompt-text": "Short words describe the pose of this"

}

{

"prompt-title": "Lighting",

"prompt-category": "Description, Atmosphere",

"prompt-text": "Explain the lighting in the image. Where is the light source, and what effects does it create?"

}

{

"prompt-title": "Focal Point",

"prompt-category": "Composition, Detail",

"prompt-text": "Identify and describe the focal point of the image."

}

{

"prompt-title": "Main Objects",

"prompt-category": "Objects, Detail",

"prompt-text": "List the main objects present in this image."

}

{

"prompt-title": "Race",

"prompt-category": "Race, Character",

"prompt-text": "What race or species for this character, summary only"

}Link to article - https://civitai.com/articles/14046/the-caption-process-of-daiversity-detailer

Many Many

This dataset was synthetically created with DallE3 and Gemini Image Creator.

A couple of items needed cleaning up. The dataset is more versatile than the model seems to output. It has a heavy bias into adding characters with arms, even though the intent was to teach the concept of having many of something. The original idea was to create a Deva character generator.

Slice of Life

This synthetic dataset was generated with the gpt-image-1 model from sora.com

The prompt used was variations of something like this:

A realistic illustration depiction of a sliced mechanical ww2 tank, side view, sliced in thin chunks on the length side, Showing the insides of the object, The parts are sliced and separated, so you see each part by itself. Exploded viewA bunch of clean-up was needed, and I think the dataset needs much more diversity. The model overfits on these few examples unless you use a weight of around 0.5.

Abstract MS Paint Style

Essentially I generated the dataset with a language model. I made a python script for the language model to make "tool calls" to, so that it requested shapes and such, and this output is the dataset. I strongly recommend checking out the training article.

Noctichrome

I created this dataset using DallE with Bing Image Creation.

The prompt used was usually something like:

a creative colorful dark illustration of a [SUBJECT] in [LOCATION] dark atmosphere, warped, lineartWith the occasional tweak and adjustment. A bunch of subjects didn't work with this, so I ignored them and trained anyway.

Color Ink Splat

This dataset was generated using my ComfyUI-One-Click-Dataset-Generator.

https://civitai.com/articles/3406

https://openart.ai/workflows/serval_quirky_69/one-click-dataset/QoOqXTelqSjMwZ0fvxQ9

The intent was to have a watercolor style similar to Sumi-e, with a clean background, and no lettering or stamp symbols on it.

Additionally the idea was to have the watercolors dripping down and wet, to get some fun details. Like color splats of the relevant colors used for the subject. The dataset didn't quite come out this way, so the style is not fully what I intended, but it is still quite nice looking.

Art Deco / Noveau Style

This model was for a bounty (https://civitai.com/bounties/2935/art-deco-for-pony-andor-pony-based-models). I wanted to go in a colorful and creative direction for it since I've been enjoying the results of colorful models more recently.

I once again went with a bing created dataset since the process is pretty smooth and delivers high quality. The bing prompt I used was something like this:

a creative fantastic creative depiction of a art deco inspired [wooden chair, decorative, simple background]. Inspirid the Decorative Arts in a style of visual arts. Arcs, shapes, architecture, and product design, and bold shapesOthers Training Data

demoran - LoRA Guide - List of Character Models with training data

tvange365 - Lots of female character training data