So far, the experiment verification of the v1.4 automated training process is nearing completion. Hence, the primary purpose of this article is to summarize the practical effects of the v1.4 automated training process and provide an overview of the upcoming v1.5 process.

BTW: For the pivotal tuning (the training method I'm using with LoRA+pt), there is an article and experiment of it, check it out: https://civitai.com/articles/2494 .

Data Analysis of v1.4 Process

For technical details regarding the v1.4 automated training process, you can refer to this article. The first v1.4 process model we successfully trained was the character Chtholly Nota Seniorious from the anime Sukasuka, completed training on August 29, 2023, and uploaded to the Civitai platform.

Since then, until the last v1.4 version (including its derivative version v1.4.1), we trained a total of 1540 LoRA models, and this section will analyze the actual situation of these models.

Web Images vs. Anime Screenshots

In this large-scale training, we trained on two different types of anime characters:

Web-based Training, using images scraped from image websites (such as danbooru, sankaku, pixiv, zerochan, etc.) for automated training.

Anime-based Training, where characters were extracted from anime videos using object detection algorithms and sorted automatically based on character data features for training.

To further improve the facial details training quality of characters, we employed a 3-stage cropping method, capturing the full body, upper body, and head of characters for training.

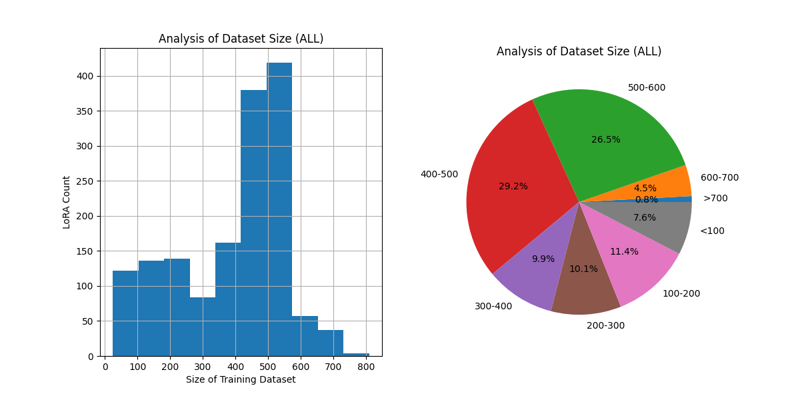

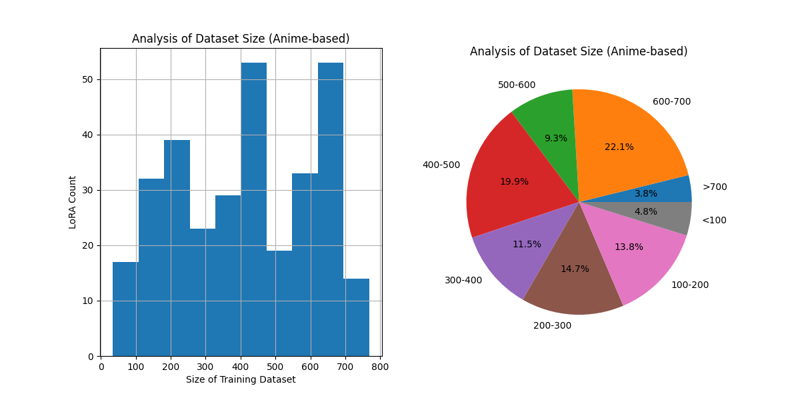

Here is the distribution of the entire training set:

Below are the dataset size distributions for Web-based Training and Anime-based Training:

In summary, several facts can be observed:

In the v1.4 training process, the majority of the training used massive datasets.

However, there is still a demand to train with relatively few images (meaning less than 200 images), particularly evident in anime-based training. This is an area to focus on improving in future iterations.

How is the Fidelity of the Training?

The paramount metric for training anime characters is fidelity, defined as "how faithfully the model reproduces the original character's image."

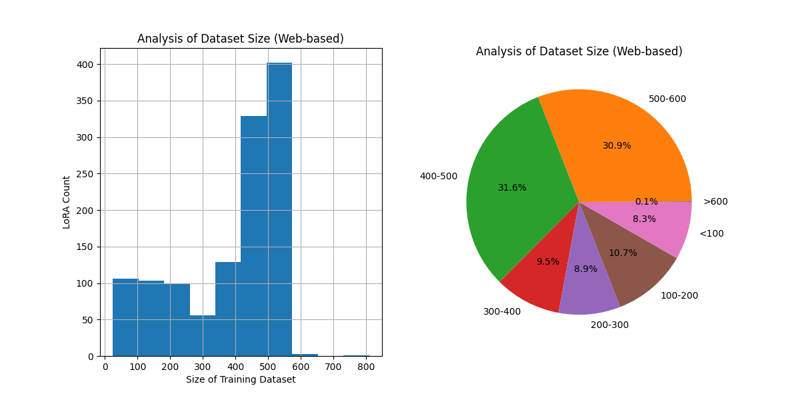

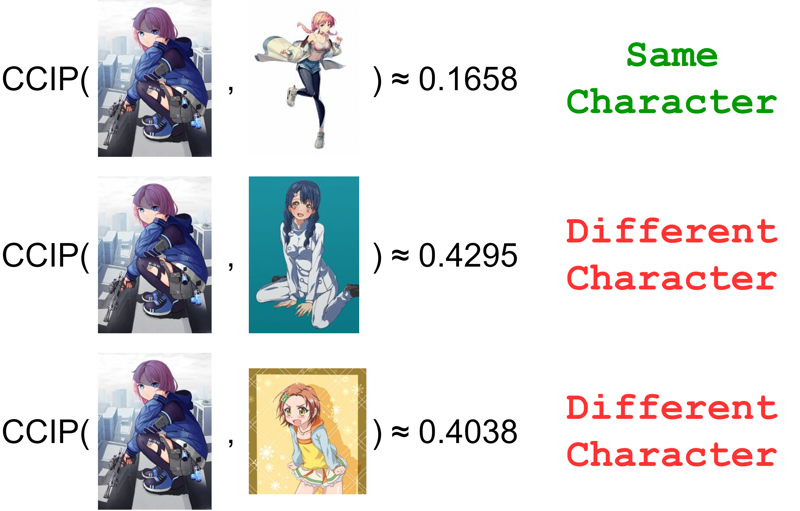

In the v1.4 version of training, we employed a contrastive learning model called CCIP for this evaluation. CCIP is an image-to-image contrastive learning model that takes two individual images as input and outputs the visual difference in characters between the two images. When this difference falls below a specific threshold, it indicates that the characters in the two images are the same.

The image below visually demonstrates the capability of CCIP (you can also try it out yourself with the online demo):

To train CCIP, we utilized a dataset comprising 260k images from 4000+ different characters, which is available here. CCIP has already demonstrated reliable performance in various applications, including evaluating the fidelity of characters generated by the LoRA model. The specific approach involves using the character model to generate images, calculating the CCIP difference between the generated and dataset images, and then calculating the proportion of samples below a certain threshold. We refer to this metric as the Recognition Score, or RecScore, illustrated in the image below:

In simple terms, a higher RecScore indicates that the generated images are more likely to be recognized by CCIP as the same character as the images in the dataset, representing a higher fidelity to the original character.

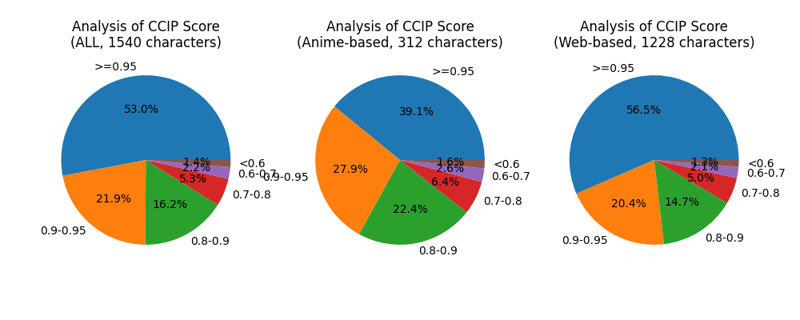

Based on this metric, we conducted a statistical analysis of the RecScores for the v1.4 version models at the optimal steps (where they achieved the highest RecScore). The three pie charts below display the RecScore statistics for all characters, Anime-based characters, and Web-based characters:

Generally, a RecScore not lower than 0.7 can be considered to have good fidelity. Therefore, it's satisfying to note that the models in this batch exhibit a commendable performance in replicating character images faithfully. For the few cases with exceptionally low RecScores, we meticulously investigated them, most of which were attributed to the inherent disorderliness of the dataset, causing issues in CCIP recognition.

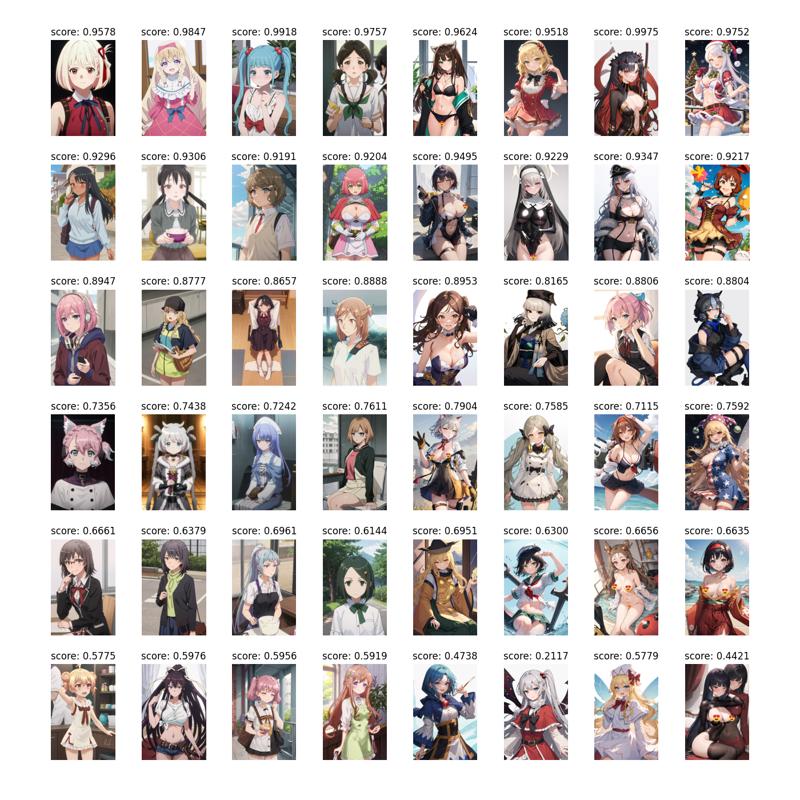

To visually display samples at different RecScore levels, we presented previews of models at varying proficiency levels at the optimal steps in the form of free.png images:

How is Overfitting Addressed in the Training?

Once we confirm the fidelity of the model in replicating the character's image, we aim for a well-balanced training where overfitting does not occur. To put it more specifically, we certainly wouldn't want the model to persist in showing characters still dressed when prompted with porn words.😈

We pondered over this issue, but in the v1.4 version, we temporarily lacked a comparably sound metric for measurement. Later during the training process, we found that the prompt "beach + starry sky + bikini" has a significant sensitivity to overfitting of character LoRA—if there is a coupling with clothing, generating images with appropriate attire becomes easier; conversely, if overtraining damages the artistic style, it becomes apparent due to the starry sky. In contrast, prompts like "kimono", "suit", "maid" and "nude" have broader coverage and are less affected, unless the model suffers from severe overfitting.

To address this, we defined a metric called the bikini score to roughly measure the degree of overfitting. The calculation involves using the weights of the "bikini" label obtained using the wd14v2 image tagging model. A higher value signifies a more prominent presence of bikini elements in the images, implying a lower degree of overfitting for that character.

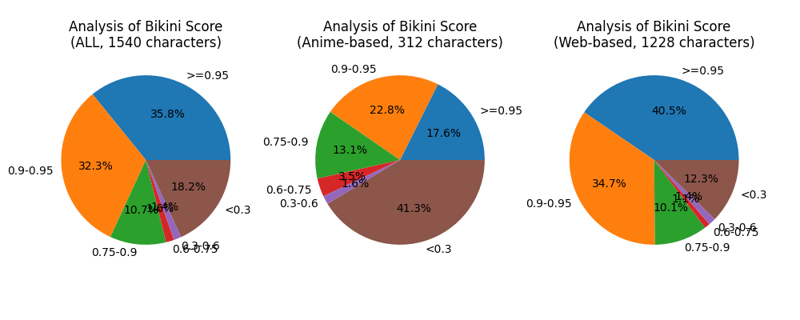

Below is the statistical overview of the bikini score:

The results are surprising—a considerable proportion of character LoRA have a bikini score below 0.3, with an astonishing 41.3% for Anime-based characters.

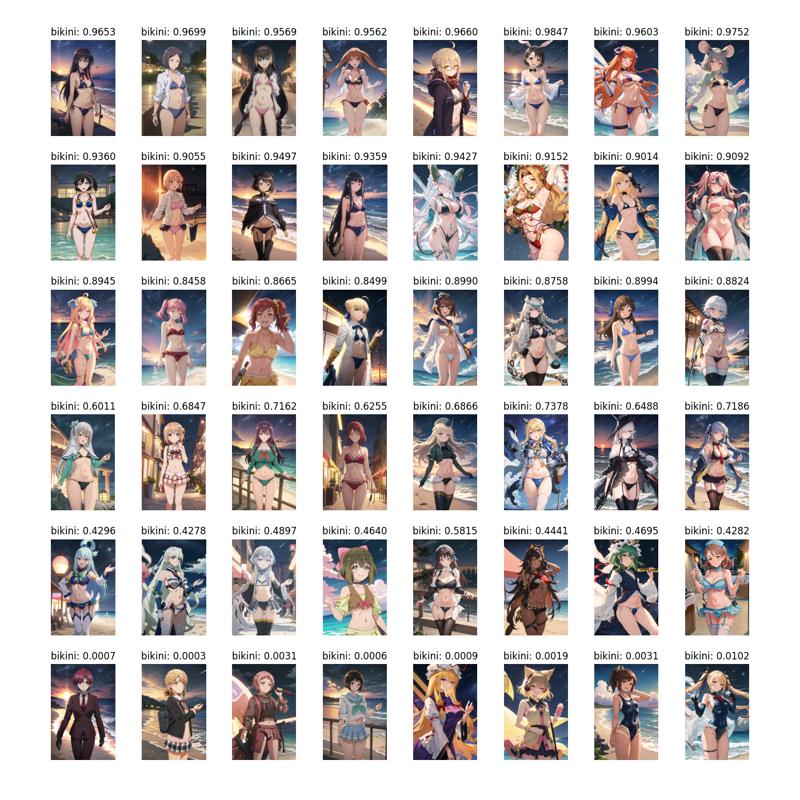

The visualization below presents different levels of the bikini score:

It's evident that for scores below 0.3, when using a bikini prompt, the characters in the images tend to retain attire similar to that in the training dataset, rather than wearing a bikini. The lower the bikini score, the more irrelevant elements appear on the characters or in the background.

Upon investigating models facing the aforementioned issue, we discovered the following facts:

For web-based characters, overfitting tends to occur when characters in the web images predominantly wear similar clothing.

The same holds for anime-based characters, with a higher occurrence due to the highly consistent attire of anime characters compared to game characters.

Therefore, we believe that overfitting related to character attire and background elements is a pressing issue that needs to be addressed and is a focal point for future work.

Moreover, it's worth noting that the bikini score is not an entirely scientific method for detecting overfitting. We believe that a more reasonable approach involves using models like CLIP to measure the alignment between the content in the images and the prompt used, which aligns with the notion of controllability mentioned in related papers. This approach would allow us to capture issues related to elements such as the background and starry sky.

Training Volume and Efficiency

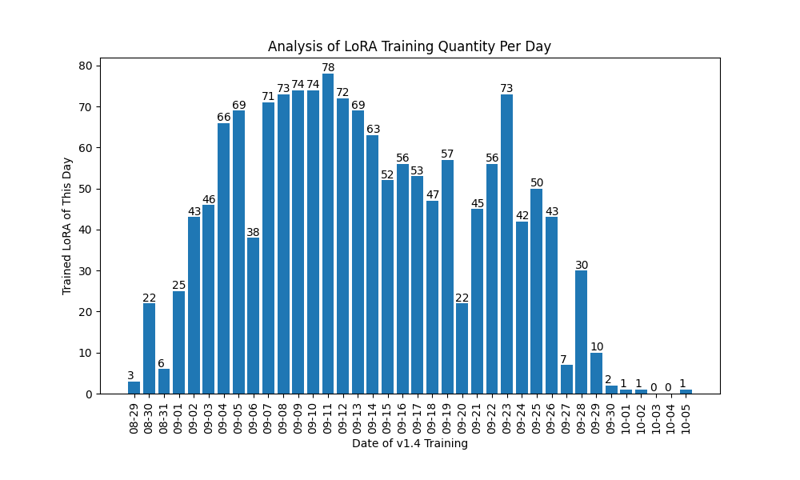

Given the fully automated nature of training, training volume and efficiency are crucial indicators that deserve significant attention. We conducted a daily-based statistical analysis of the time each model was submitted to Hugging Face, as shown below:

In summary, our v1.4 version pipeline took 37 days from start to finish, producing a maximum of 78 models in a single day. Our computational power in use included one 80GB A100 GPU and two 40GB A100 GPUs. Due to certain technical limitations within the cluster, these three GPUs couldn't be used for multi-GPU training. Instead, we had to use three separate containers on Kubernetes (k8s), along with an additional scheduler container for task distribution, to execute distributed training.

To be frank, this is not a very satisfactory outcome. Despite utilizing only around 7% of the available GPU power (as we are a research team with a limited portion of computational resources for such interests), this implies that even with high-performance GPUs like the A100, the v1.4 training method can yield approximately 25 models per day on a single GPU.

In reality, what we aspire to achieve is the ability to use the computational power of 6 A100 GPUs and attain a monthly model training volume that matches the total upload count of the top 50 on the leaderboard (excluding narugo1992) in terms of all-time uploads. Clearly, we have a long way to go to reach such a goal.

Dataset Crawling Efficiency

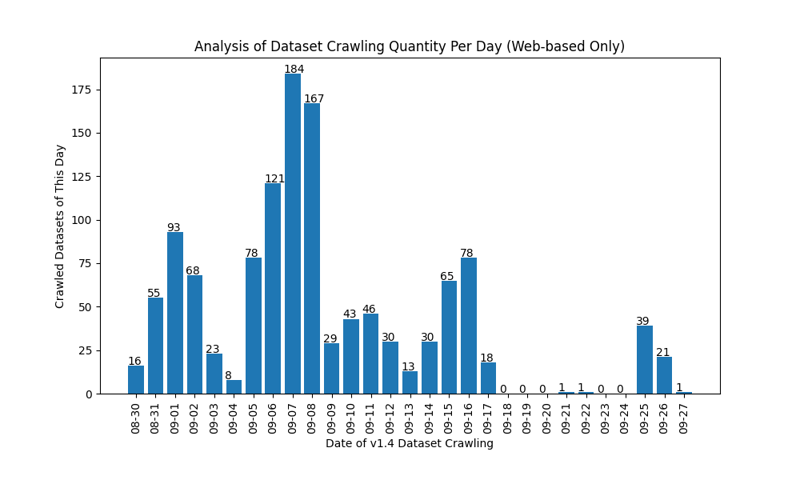

Similar to the previous section, this is another area of significant concern. We gathered data regarding the time it took for all web-based character datasets to be uploaded to Hugging Face, as depicted in the table below:

This indeed demonstrates a fairly reasonable efficiency, with the highest crawling day exceeding 150 dataset crawls. Notably, we utilized only four 2-core 6GB k8s containers for the task, without utilizing GPUs. Furthermore, we've implemented a scheduler for distributing tasks in our distributed crawler. Given that CPU and memory are not scarce resources, this implies that we can increase the number of crawler containers almost infinitely if needed—potentially up to 100-200 containers. This would enable us to crawl datasets for thousands of characters every day.

However, this also underscores a fact—for web-based character training, the current bottleneck in LoRA's productivity is still the model training phase. This is evident both in terms of output efficiency and the scalability of operations, as the dataset crawling phase surpasses it significantly from both aspects.

What Are the Best Training Steps?

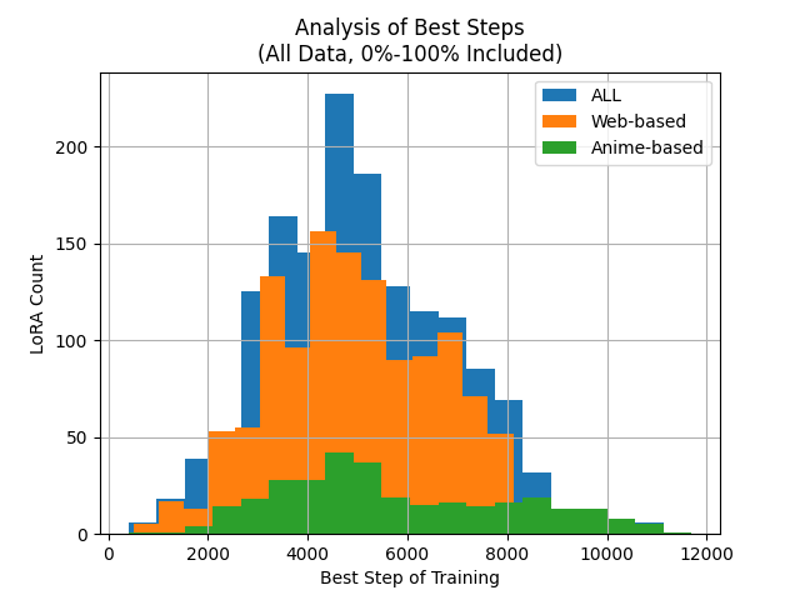

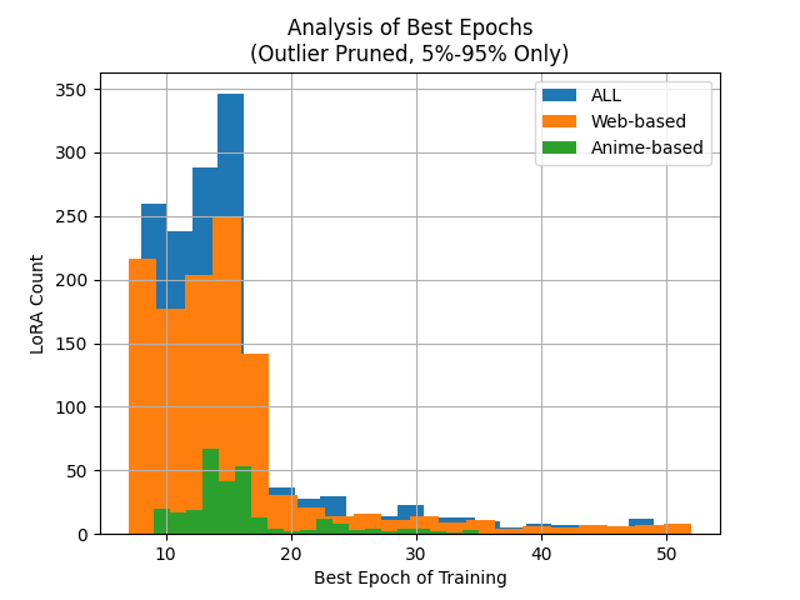

Based on the defined character fidelity metric (generalization was not included in the v1.4 process), we attempted to answer this question. For this, we tracked the steps and epochs at which each model achieved the highest fidelity score during training.

The distribution of steps achieving the highest fidelity score is shown below:

The distribution of epochs achieving the highest fidelity score is shown below:

Conclusions can be drawn as follows:

Evidently, the optimal number of steps exhibits better clustering, while the best epochs show significant divergence (the chart has excluded outliers, otherwise the distribution relationship would be almost unrepresentable). Hence, during training, setting the training steps rather than epochs is indeed a more reasonable approach.

However, it's worth noting that there is still no specific value for the optimal steps; a wide range of steps can be considered optimal (this is particularly noticeable in Anime-based training). Therefore, during the training process, after setting an appropriate maximum number of steps, a reasonable evaluation metric will be crucial. Directly using the maximum number of steps will likely lead to overfitting or underfitting (as seen in the v1.0 and v1.3 processes previously).

Additionally, it's worth mentioning that the academic term for the training method we used, involving pt+LoRA, is called pivotal tuning. This method has been proven in several papers to perform better than main stream LoRA. However, according to our experimental results, there is a significant difference in the optimal training approach between main stream LoRA and pivotal tuning. In the v1.0 process, we followed the training approach of main stream LoRA, resulting in widespread underfitting. Hence, our training experience is not directly applicable to the training of main stream LoRA.

Other Interesting Discoveries

We also conducted some simple analysis on the dataset's conditions. As mentioned in previous articles, we applied 3-stage-cropping to the original images. This means that the dataset used for training would include full-body images, half-body images, and close-up shots of the head.

We defined the following two concepts:

Original Dataset, representing the dataset without 3-stage-cropping

3-stage Dataset, representing the dataset after 3-stage-cropping

We hypothesized that the proportion of close-up shots and similar images could reflect the quality of the character dataset to some extent. This is because high-quality datasets tend to have higher resolution, which means we could obtain more large-sized close-up shots (we filtered the size of close-up shots and half-body images during data preprocessing). To further describe the related indicators, we defined the following three concepts:

Head Ratio, representing the ratio of close-up shots in the 3-stage dataset to the total number of images in the original dataset

Halfbody Ratio, representing the ratio of half-body images in the 3-stage dataset to the total number of images in the original dataset

Dataset Ratio, representing the ratio of the total number of images in the 3-stage dataset to the total number of images in the original dataset

Furthermore, since there isn't a highly accurate dataset quality metric, we believe that overall, characters that are more popular tend to have more and higher quality fanart produced by skilled artists. Therefore, we used the number of character images on major websites as a rough measure of dataset quality, which aligns with common sense.

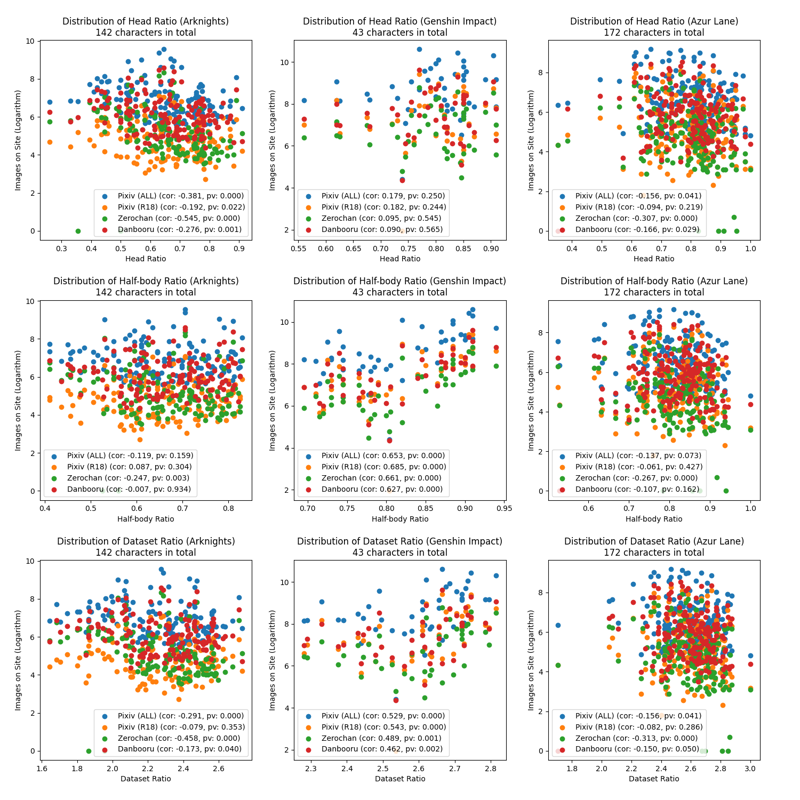

We analyzed the correlation between the three ratios mentioned above and dataset quality. We expected to find a positive correlation. For this, we chose three popular mobile games—Arknights, Genshin Impact, and Azur Lane—as our experimental subjects. We used the Spearman correlation coefficient to describe the correlation, and for ease of presenting the distribution trend on the scatter plot, we logarithmically transformed the number of character images on the websites.

Here are the distribution plots we obtained:

It must be said, this is indeed a highly unexpected finding—whether it's the head ratio, half-body ratio, or dataset ratio, all are negatively correlated with character popularity. Particularly, the number of images on Pixiv, Zerochan, and Danbooru websites generally show a high-confidence negative correlation. Zerochan is the main source of our data crawling, hence exhibiting the strongest negative correlation.

We're not sure of the reasons behind this.

Summary of v1.4 Version

In summary, here is our overall assessment of the v1.4 version's automated training process:

The fidelity of character representation has reached an excellent level, with a very high success rate in producing high-fidelity images.

Overfitting is a common issue in the character models, particularly noticeable in the anime-based models. This issue needs urgent attention and resolution.

The training speed of the automated training process is reasonable and sufficient to meet technological iteration needs, but there is room for improvement.

The automated character image crawling speed meets current requirements and possesses high scalability and flexibility.

There are still challenges in training with a small number of images (referring to original dataset images less than or equal to 70), and it is not well-supported at present. In such scenarios, the model success rate is not satisfactory.

Furthermore, we have uploaded all the raw data used for the aforementioned data analyses as attachments.

v1.5 Technical Outlook

After a detailed analysis of v1.4, we have identified several issues. Therefore, we are about to launch a brand new automated training process, the v1.5 version, in the near future. This process will maintain a similar workflow to the previous one and achieve the same level of automation.

Here is an overview of the new features that will be introduced in v1.5. Some features are still under research, so the actual implementation may vary:

Introduction of Regularization Datasets in Training

Use generic regularization datasets for web-based training and randomly sampled screenshots from anime for anime-based training.

Expected to address the prevalent overfitting issue and improve model training quality when dealing with fewer images.

Utilization of More Scientific Measurement Metrics

A research paper is already available: https://arxiv.org/abs/2309.14859

This paper extensively studies the selection of metrics for model training (including anime characters) and provides ample experimental data, along with openly accessible source code. It covers various crucial metrics such as fidelity, diversity, controllability, etc., offering comprehensive coverage.

We can integrate this into the pipeline to achieve a more comprehensive evaluation of model performance and select more reasonable training steps.

Training Efficiency Optimization

Increase the training learning rate and reduce the number of training steps.

Expected to further boost training speed and generate a larger quantity of models more rapidly.

Faster training speed implies that we can conduct large-scale experiments like the one described in this article more quickly, thus enhancing technological iteration efficiency.

Optimization of Training Buckets

The original bucket used to cluster images based on aspect ratios and resize them to a uniform area size (e.g., 512x512). This led to a problem where, in training scenarios with many small-sized images, the actual images used for training were forcibly enlarged, causing the resulting model to exhibit noticeable blurring.

We will use buckets that include multiple aspect ratios as alternatives to accommodate various image sizes without resizing, thereby eliminating the blurring issue.

Optimization of Tags during Dataset Construction

Removal of overlapping tags, e.g., when containing long_hair, very_long_hair, and extreme_long_hair simultaneously, only the first label should be retained, avoiding feature dilution caused by subsequent tags.

[Optional] Pruning of core tag, i.e., removing tag representing core character features (e.g., hair color, eye color, skin tone, hairstyle, animal ears, etc.).

Benefits of this approach include: allowing all character features to concentrate on the triggering words to avoid dilution and enhancing user experience by reducing the need for a plethora of core feature-related tags.

However, this approach also carries risks, such as severe feature mixture when a character has multiple distinct appearances (e.g., different hairstyles and hair accessories), so it is in the optional status.

Technical Upgrade and Subsequent Update Plans

As mentioned above, we are about to upgrade to the fully automated training pipeline v1.5. To facilitate this, we have made the following arrangements:

Since we have already completed the replacement of all v1.0 version models on this account (i.e., uploaded v1.4 version models for the same characters), we ceased the v1.4 version pipeline on October 7, 2023.

Therefore, until the comprehensive technical upgrade is completed:

Apart from some experiments, new dataset crawling and model training will be temporarily suspended.

A small number of inventory models will be uploaded daily on this account to ensure updates continue.

We aim to expedite the experiments and technical maintenance, and anticipate the full rollout by the end of October. Please refer to the actual launch time for the most accurate information.

Once the v1.5 pipeline is fully launched:

Unlike v1.4, we will not replace existing old version models comprehensively.

Instead, we will focus on uploading v1.5 versions for models in v1.4 that have been confirmed to have significant issues.

These are the upcoming plans for technical development and updates. We look forward to your continued interest in our future work.

![[2023-10-7] Survey of v1.4 Training Automation and Planning of version v1.5](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/012e061e-9626-4493-8765-5feea30849ae/width=1320/012e061e-9626-4493-8765-5feea30849ae.jpeg)