Objective

Testing "Reactor" face Swap pug-in

Environment

For this test I will use:

Stable Diffusion with Automatic1111 (https://github.com/AUTOMATIC1111/stable-diffusion-webui)

To install Stable Diffusion check my article : https://civitai.com/articles/3725/stable-diffusion-with-automatic-a1111-how-to-install-and-run-on-your-computerReactor sd-webui-reactor ( https://github.com/Gourieff/sd-webui-reactor.git )

Checkpoint

The Truality Engine (https://civitai.com/models/158621/the-truality-engine)

Model

I will generate some faces with stable diffusion

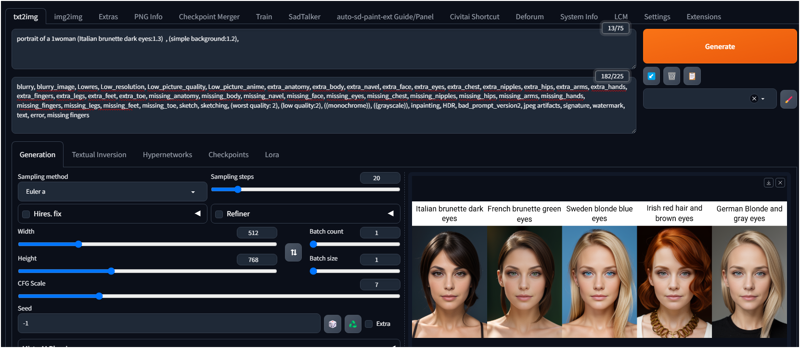

Prompt: portrait of a 1woman (Italian brunette dark eyes:1.3) , (simple background:1.2),

Negative: blurry, blurry_image, Lowres, Low_resolution, Low_picture_quality, Low_picture_anime, extra_anatomy, extra_body, extra_navel, extra_face, extra_eyes, extra_chest, extra_nipples, extra_hips, extra_arms, extra_hands, extra_fingers, extra_legs, extra_feet, extra_toe, missing_anatomy, missing_body, missing_navel, missing_face, missing_eyes, missing_chest, missing_nipples, missing_hips, missing_arms, missing_hands, missing_fingers, missing_legs, missing_feet, missing_toe, sketch, sketching, (worst quality: 2), (low quality:2), ((monochrome)), ((grayscale)), inpainting, HDR, bad_prompt_version2, jpeg artifacts, signature, watermark, text, error, missing fingers

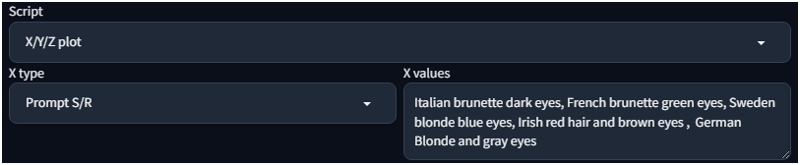

I use the prompt and an easy script X/Y to have 5 different faces

I used Prompt S/R : Italian brunette dark eyes, French brunette green eyes, Sweden blonde blue eyes, Irish red hair and brown eyes , German Blonde and gray eyes

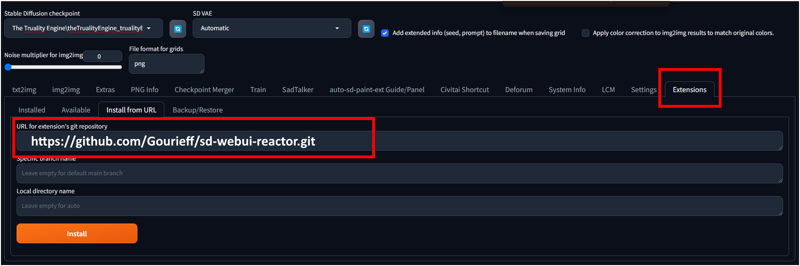

Installing Reactor

Go to the extension tab, select "Install from URL"

Paste https://github.com/Gourieff/sd-webui-reactor.git, clic install and then restart Stable Diffusion

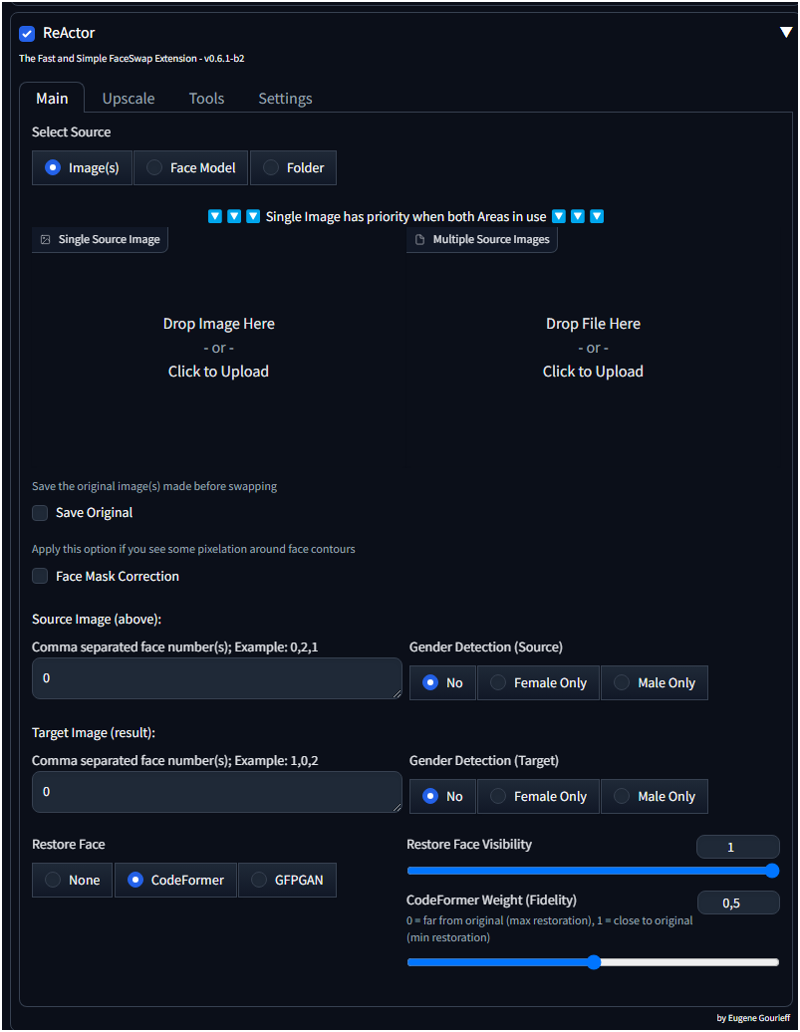

Then you will have in the main page a new ReActor page

TEST

My Test will be to generate a video and use the "Image" source with the 5 different faces that I have generated , to undestand how are the results.

Prompt:

1woman walking near the lake wearing a (bikini green:1.3), sandals , the day is sunny and the atmosphere is nice. (looking at the camera:1.3) (face focus:1.5) (zoom face:2)

Negative Prompt:

blurry, blurry_image, Lowres, Low_resolution, Low_picture_quality, Low_picture_anime, extra_anatomy, extra_body, extra_navel, extra_face, extra_eyes, extra_chest, extra_nipples, extra_hips, extra_arms, extra_hands, extra_fingers, extra_legs, extra_feet, extra_toe, missing_anatomy, missing_body, missing_navel, missing_face, missing_eyes, missing_chest, missing_nipples, missing_hips, missing_arms, missing_hands, missing_fingers, missing_legs, missing_feet, missing_toe, sketch, sketching, (worst quality: 2), (low quality:2), ((monochrome)), ((grayscale)), inpainting, HDR, bad_prompt_version2, jpeg artifacts, signature, watermark, text, error, missing fingers

Other

Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 1125437345, Face restoration: GFPGAN, Size: 512x512, Model hash: e73d775ff9, Model: theTrualityEngine_trualityENGINEPRO

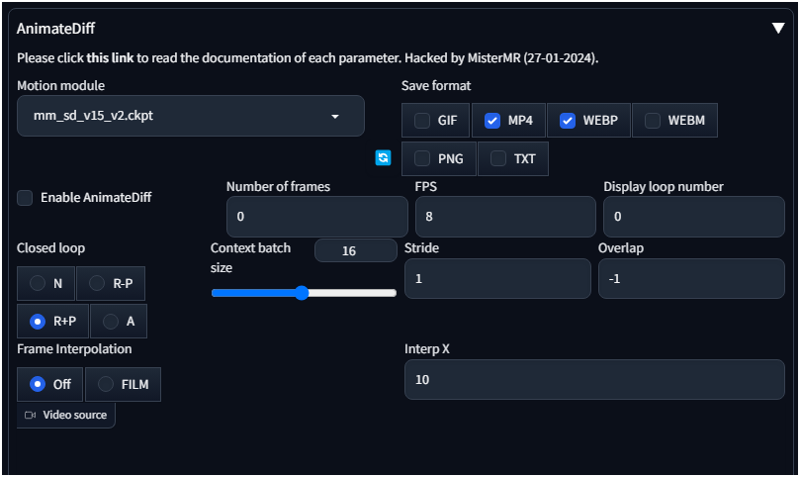

Animation - AnimateDiff

Now I setup animateDiff with the options below

(check this article for more information about AnimateDiff: https://civitai.com/articles/3736/stable-diffusion-extension-animatediff-to-generate-videos)

The default settings will generate a video of 16 frames (8 frames per second), it means 2 seconds of animation.

I set WEBP and MP4 as output ( WEBP because I can put here in the article, MP4 has a better quality)

Here is the result:

1° TEST

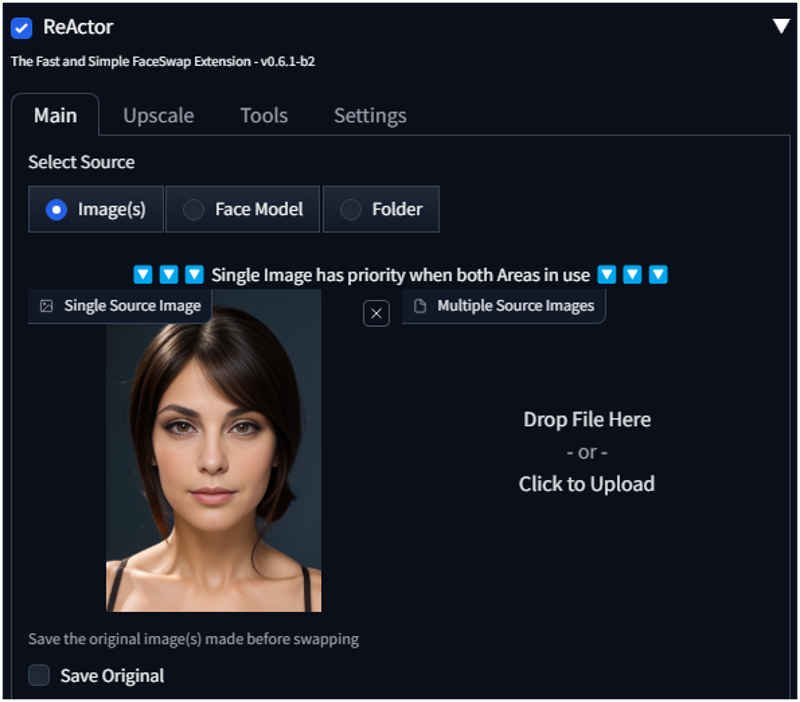

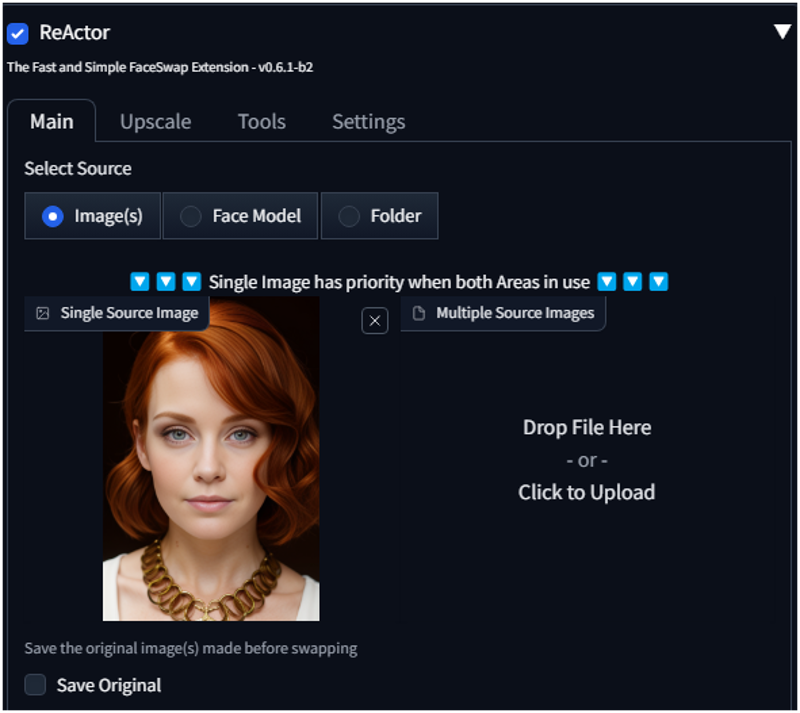

In this test I will keep the default settings, I enable Reactor and I select the first face.

I don't change anything else.

Because I didn't change the seed I will generate the same video with the new face.

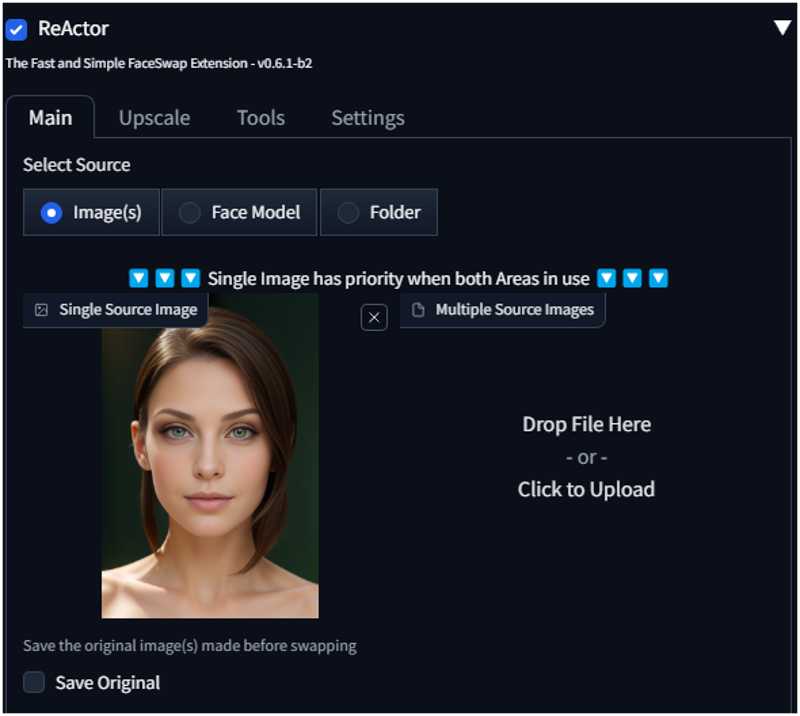

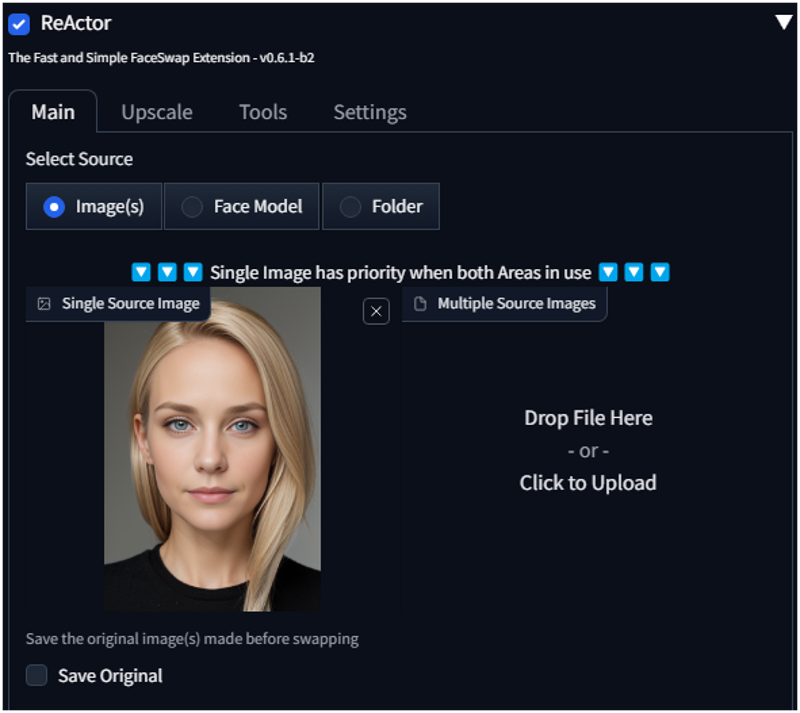

2° TEST

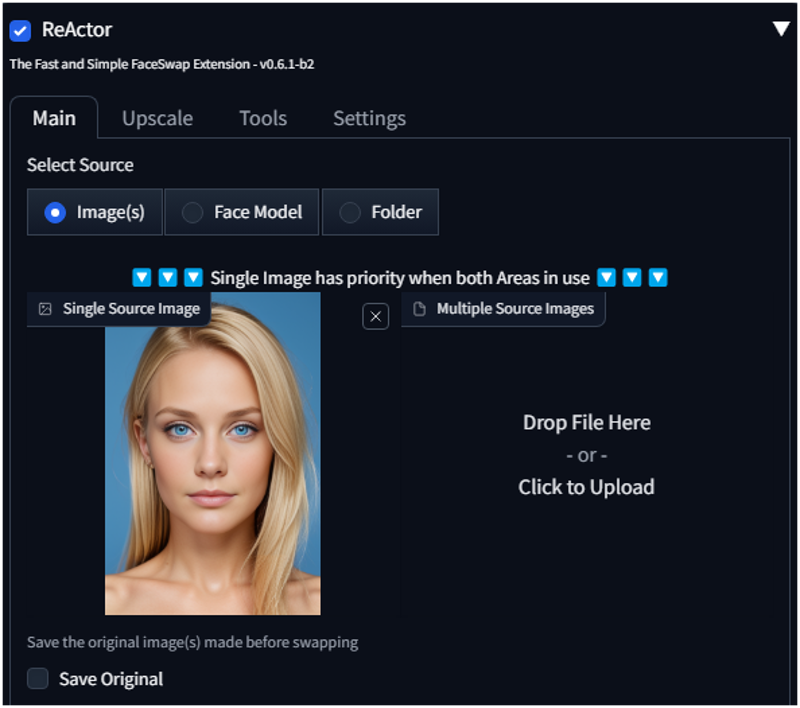

3° TEST

(eyes are not good)

4° TEST

5° TEST

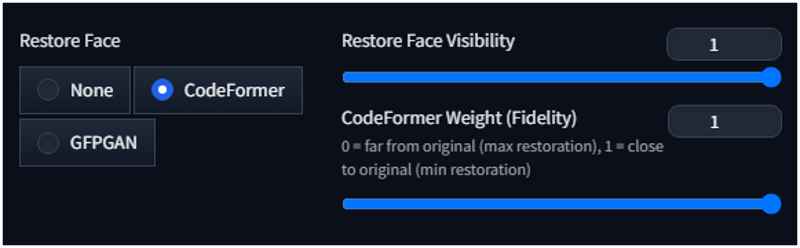

The eyes didn't convinced me and I changed couple of settings:

6° TEST

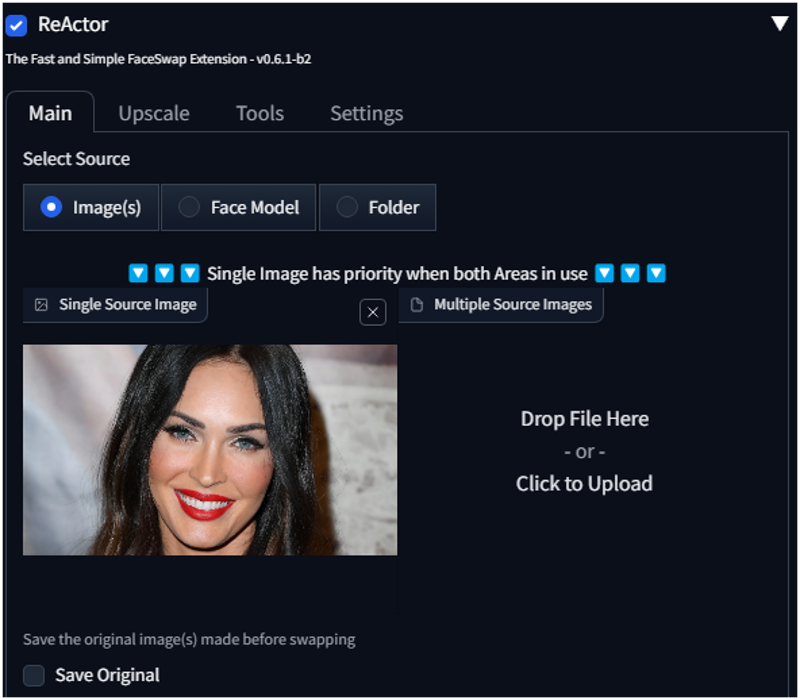

I did a test also with a picture for Megan Fox (I love her beauty)

Results:

The component is very good and doesn't a good job on replacing the faces. Sometimes the color of eyes is not perfect but using it faster and creating an embedding.

Thanks for reading my article, if you have any question write a comment.