This is a FAQ of questions commonly asked by new users.

Q: What's a LoRA? How do I use one?

A: A LoRA is a secondary type of model that pulls the base model in a particular direction. This could be a particular character, piece of clothing, scene, pose, artstyle, or any other type of categorization you can find. Loras are used by doing the following:

Download the LoRA and put it in your

\stable-diffusion-webui\models\Lorafolder. If you don't have this folder yet, install the AUTOMATIC1111 webui first.Put the LoRA in the prompt box

<lora:fingerwave_hair_v1:1>:

Putting the "weight" for the LoRA. This is what comes after the

:, set to 1 in the example image. Follow the instructions on the model page for suggestions on what weight to use, but 0.6-0.8 is often a good starting range. If you're using multiple LoRA, use a higher weight on the LoRA you want to be featured more prominently.Use the trigger words from the LoRA. Sometimes the LoRA will still have an effect without the trigger words, but you'll usually get the best results by following the instructions from the creator. Consider the fingerwave hair model which has the trigger word "fingerwave hair":

Comparison of using the LoRA alone, with partial trigger word, and with full trigger word.

You'll know for sure that you've triggered the LoRA because the file hash will show up in the generation details

Q: Why doesn't my model do the pose that I type? Am I doing something wrong? I've seen other people pose their models!

A: Consider using an openpose controlnet. This poses the model by using an image instead of words. You can read the tutorial here.

Q: Why do different LoRAs have different file sizes? Does it have to do with how many images were used to train them?

A: The number of images used to train a LoRA does not affect the file size! The dimensions/alpha setting will affect the size of the LoRA the most. Larger dimensions results in a larger file size.

Q: I keep seeing stuff about xformers? What is it, do I need it?

A: If you have an AMD card or on Mac, xformers is not available for you - sorry. Consider instead purchasing an nvidia GPU with CUDA support to enjoy the full array of popular ML tools. Xformers is optionally used with the Automatic Webui.

Nvidia GPU owners, read on:

Before pytorch 2.0 was released, xformers was an important piece of software to use in order to speed up generations. Starting with torch 2.0, xformers is no longer necessary and has been superseded by the sdp accelerators.

Here's a sample command to install torch 2.0.1 with CUDA support and related packages: pip install torch==2.1.2+cu118 torchvision --force-reinstall --extra-index-url https://download.pytorch.org/whl/cu118

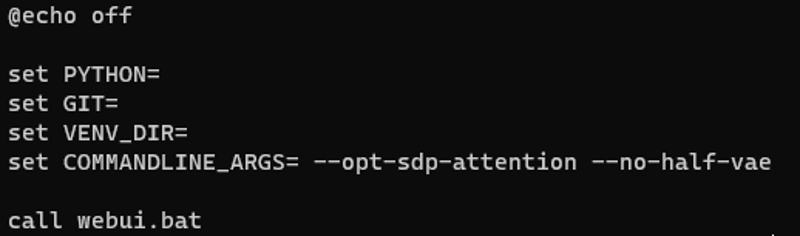

Set --opt-sdp-attention flag in your webui-user.bat to use the sdp accelerators.

It's important to note that both --xformers and --opt-sdp-attention causes your generations to be "non-deterministic", meaning there will be a small amount of variation even when using the identical prompt and seed. This tends to be in areas like edges and backgrounds, and takes moderately high attention to notice the difference.

My webui-user.bat looks like this:

TLDR: No, you don't need xformers. Use the --opt-sdp-attention flag at startup instead.

Update 10/19/2023: It is possible to further increase speed by optimizing individual checkpoints and LoRA with TensorRT

Q: My dictionary doesn't have any of these terms. What is everybody talking about?

Checkpoint: The largest file and determines what you could possibly generate. Some models generate more anime and some models generate more photorealistic images, for example.

Textual Inversion: A textual inversion is a file placed in stable-diffusion-webui\embeddings which changes the meaning of a token to have different weights. Simply put, a "token" is a word or group of words, and the "weights" are what shapes are generated by that token.

negative prompt: A secondary prompt window that tells the model what to avoid. Often, people put ugly things, or aspects of the image they don't want. If you keep randomly generating characters in hats even though it's not in the prompt, put hat in the negative prompt.

LoRA: Learned Data Characters and People. It's worth noting that what checkpoint the LoRA is trained against can have a significant impact on how compatible it will be across different checkpoints. Many users recommend training against SD1.5 (at the time of this writing, SDXL was not widely available) to ensure compatibility with the widest range of checkpoints.

Hypernetwork: Without getting too deep into the technical details, this is similar to an early version of LoRA. They aren't very popular, most people find LoRA and Lycoris easier to use.

Aesthetic Gradient: the rough statistical chance a model has to generate a certain color.

LyCORIS: Similar to LoRA, may require installing an additional software extension to use depending on your interface. Training parameters are different than LoRA and can function better for some stylistic choices.

Controlnet: See my guides on using canny controlnets, the most famous controlnet used with img2img. Another popular controlnet is openpose, which allows you to manipulate a skeleton to pose your generated image

Upscaler: high quality. I recommend the 4x foolhardy upscaler (place in stable-diffusion-webui\models\ESRGAN) and the following settings are a good starting point:

Sampler: DPM++ 2M SDE Karras

Steps: 40

Dimension: 512x768

Restore faces, highres fix (turn off restore faces for anime)

25 high res steps

0.4 denoising. Increase this for more detail, or decrease this if there are too many errors

VAE: color adjustment. Usually vae-ft-mse-840000-ema-pruned unless the model recommends otherwise

Poses: Adjust the posture of the created character with a controlnet. The guide is here: https://civitai.com/articles/157/openpose-controlnets-v11-using-poses-and-generating-new-ones

Wildcard: First select Prompt matrix in the Script dropdown. Separate multiple tokens using the | character, and the system will produce an image for every combination of them. For example, if you use photograph a woman with long hair|curly hair|dyed hair as the prompt, four images are generated:

photograph a woman with long hair

photograph a woman with long hair, curly hair

photograph a woman with long hair, dyed hair

photograph a woman with long hair, curly hair, dyed hair

Bookmark this page for updates. If you want more tips, read more articles here