Verified: 2 years ago

Other

The FLUX.1 [dev] Model is licensed by Black Forest Labs. Inc. under the FLUX.1 [dev] Non-Commercial License. Copyright Black Forest Labs. Inc.

IN NO EVENT SHALL BLACK FOREST LABS, INC. BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH USE OF THIS MODEL.

Video tba

New in V5 /Loki-Multiperson/

Loki-Faceswap-Multi-2 (for 2 persons)

Loki-Faceswap-Multi-3 (for 3 persons)

Live Portrait with replaced Static & Video Backgrounds.

New in V4 - /Loki-combineLayers/

Loki-LivePortrait-StaticBG

Loki-LivePortrait-videoBG

(note: this was built for the insightface implementation which has been research/non-commercial license, there is now a new opensource implementation which future versions will take advantage of)

LOKI - FASTEST FACE SWAP for ComfyUI

UDATE V27 - added MimicMotion support to the LOKI pack.

UPDATE V12 - added LivePortrait support to the LOKI pack.

RELEASE V8 - initial release Fastest Face Swap (all-in-one) workflow.

As an example, you might use LOKI Faceswap to create a face model for your character, then using Trio-Tpose - generate characters using those Face models. We can use LivePortrait for lipsynch speech animation or MimicMotion for character pose animation.

Mimic Motion Instructions:

Using the Driving video it will animate the head / face in the image, and save a video which is matching the FPS of the source including the audio.

Use Video of a Speaking Face

(a driver video is included in the pack)Use Image of your Character to be animated

(a tpose image is included in the pack)Running the workflow will generate the video

(optional) toggle interpolation types

follow notes on matching FPS when interpolating

Models required:

Nodes: (use manager)

https://github.com/kijai/ComfyUI-MimicMotionWrapper

Models:

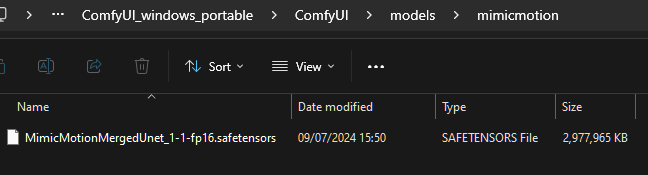

https://huggingface.co/Kijai/MimicMotion_pruned/tree/main

- place all inside /models/mimicmotion/

also requires Diffusers SVD XT

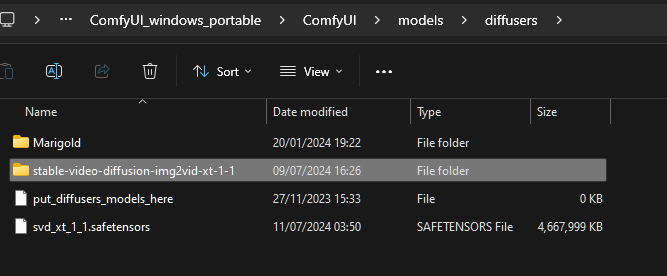

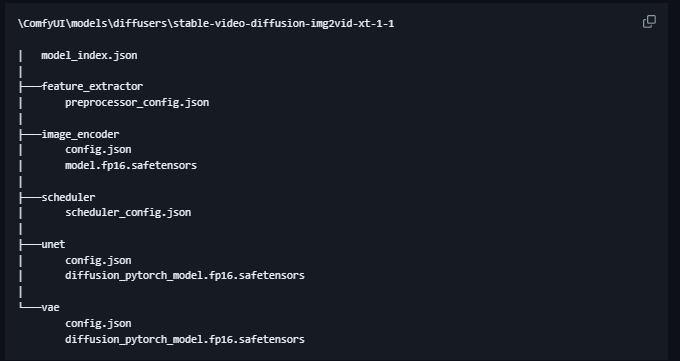

https://huggingface.co/stabilityai/stable-video-diffusion-img2vid-xt-1-1/tree/main

- place inside /models/diffusers/stable-video-diffusion-img2vid-xt-1-1/

- move the "svd_xt_1_1.safetensors" to /models/diffusers/

all is explained in the video :)

all is explained in the video :)

Live Portrait Instuctions:

We can now use LOKI-LivePortrait to animate our characters using our characters image, and Video Chat video footage you can record on any phone, webcam or other device.

Using the Driving video it will animate the head / face in the image, and save a video which is matching the FPS of the source including the audio.

Use Video of a Speaking Face

(a driver video is included in the pack)Use Image of your Character to be animated

(a tpose image is included in the pack)Running the workflow will generate the video

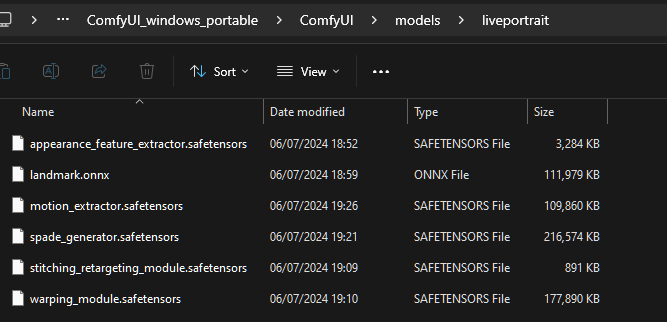

Models required: https://huggingface.co/Kijai/LivePortrait_safetensors/tree/main

https://huggingface.co/Kijai/LivePortrait_safetensors/tree/main

download all the models from the link and place them in /models/liveportrait as shown above. Full explanation and demonstration in the Loki LivePortrait Video.

All above: V12-LivePortrait workflow

All below: V8-FaceSwap workflow

All required nodes and models are available from the Launcher, or can be found in the description of the video, for example setting up the SUPIR upscaler for the first time.

jump to the Article: https://civitai.com/articles/5915

Note: SUPIR is not used to upscale the Batch modes, it would take forever! You can experiment with upscaling the video after assembly in the normal ways.