First of all this was originally a guide I created as requested by a discord Channel(it has changed quite a bit), it is also posted in rentry(That one is out of date.) This guide is relatively low granularity and is mostly focused towards character Lora/Lycoris creation, it is also geared towards training at 512x512 and SD 1.5, my video card is too crappy for SDXL training. Ask in the comments if any part needs clarification. I will try to respond(If I know the answer :P) and add it to the guide. Lycoris creation follows all the same steps as lora creation but differs in the selection of training parameters, so the differences will be in the baking section.

Edit(20250512): Updated some of my scripts that i use during dataset preparation can be found here https://civitai.com/models/82080/powershell-scripts-misc-scripts-for-dataset-operations

Mostly stuff to remove borders, square things, change to png, turn pseudo grayscale into true grayscale, remove alpha channel, turn into true monochrome mostly for lineart that is a bit faded. Alpha channel remover. A poor mans duplicate image finder using tags. And a couple scripts to rename png/txt pairs to numeric.

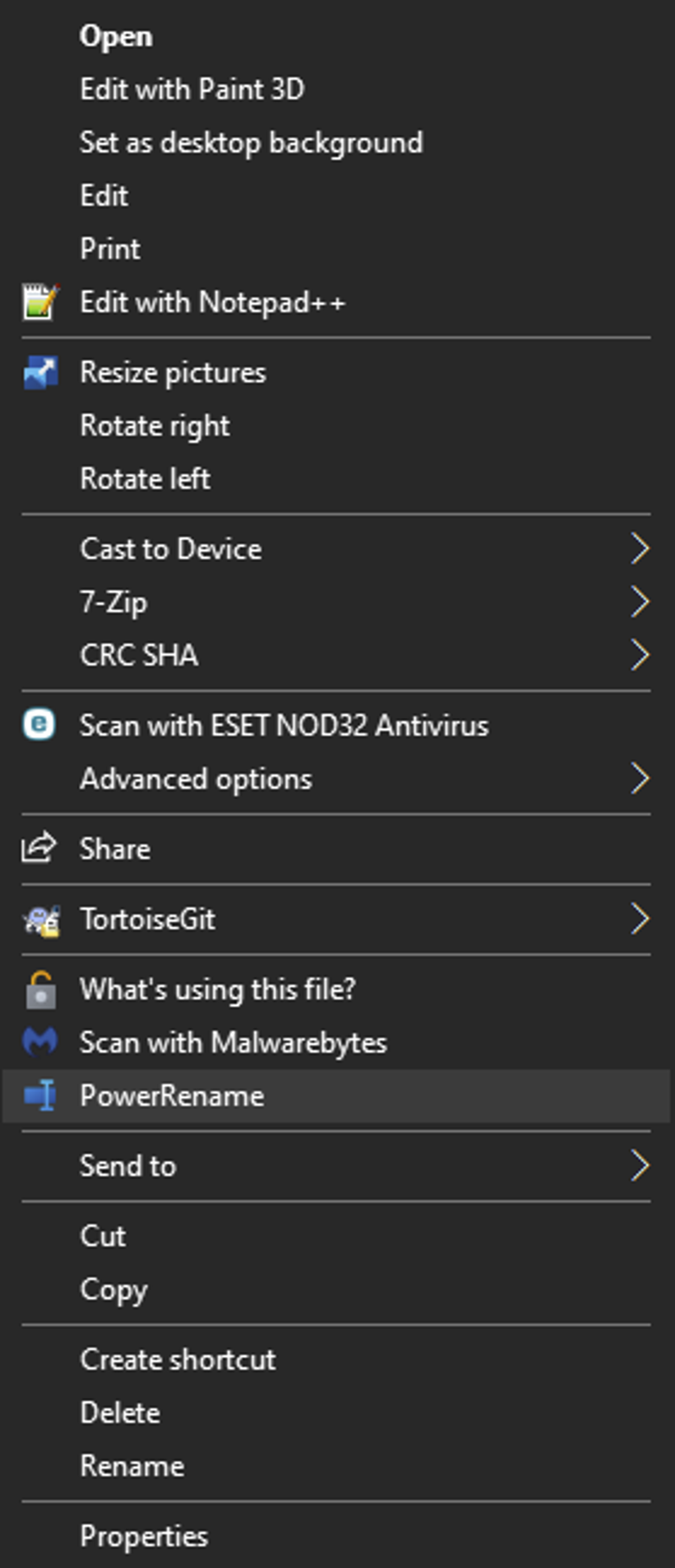

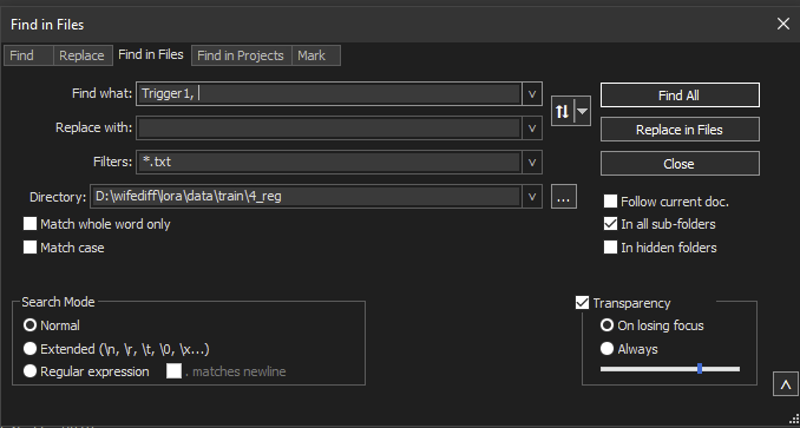

Edit(20250428): Was fixing a V1 Lora which turned into a V3 So i decided to put to paper the methodology i follow to fix triggers. The addition is in the Troubleshooting and FAQ section

Making a Lora is like baking a cake.

Lots of preparation and then letting it bake. If you didn't properly make the preparations, it will probably be inedible.

I tried to organize the guide in the same order as the required steps to train a LORA but if you are confused in the order, try the checklist at the bottom and then look up the details as required in the relevant sections.

I am making this guide for offline creation, you can also use google collab but I have no experience with that.

Introduction

A good way to visualize training is as the set of sliders when creating a character in a game, if we move a slider we may increase the size of the nose of the roundness of the eyes. They of course are much more complex, for example we can suppose that "round eyes" means that sliders a=5 b=10 and c=15 then maybe closed eyes means a=2, b =7, c=1 and d=8. We can consider a model as just a huge collection of of these sliders.

Then what is a LORA? when we ask for something "new" the model just go "What? I have no sliders for that!" That is what a LORA is we just stick some more new sliders with tape to the model and tweak some of the preexisting ones to values we prefer.

When we train a lora what we are doing is basically generate all those sliders and their values, what our captioning does is divide the resulting sliders between each of the tags. The model does this by several means:

Previous experience: For example If a tag is a type of clothing worn by a human in the lower part of the body, for example "skirt" the model will check what it knows about skirts and assign the corresponding set of sliders to it. This includes colors, shapes and relative locations.

Word similarity: If the tag is for example skirt_suit it will check the individual concepts and try to interpolate. This is the main cause of bleedover(concept overlap) in my opinion.

Comparison: It two images are largely the same and one has a "blue object" and the other doesn't and the image with the "blue object" has an extra tag, the extra sliders will be assigned to it. So now the new tag = "blue object".

Location: For example the pattern of a dress occupies the same physical space in the image as the dress concept.

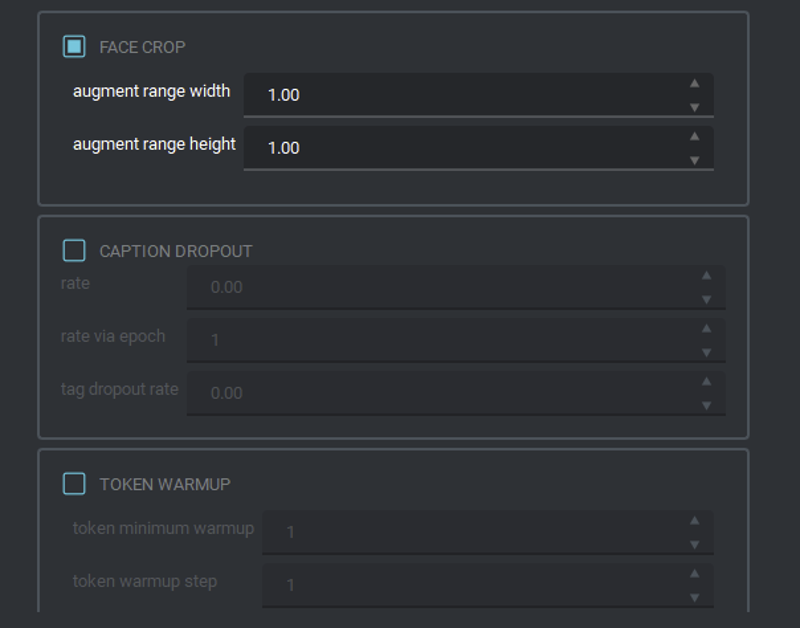

Order: The first tags from left to right will get sliders first. This is were the caption ordering options work on (Keep tokens, shuffle captions, drop captions and caption warmup) .

Reminder: Whatever sliders are not taken up by other tags will be evenly distributed in whatever tags remain(I suspect this is done in increasing order depending of current amount of sliders[which would be zero for new triggers] and then by location ), hopefully our new triggers(so remember to try to always put your triggers at the beginning of the captions).

Magic: I mean the above ones are the ones i have discerned, it could use may other ways.

Some people seem to be under the impression that what you caption is not being trained, that is completely false, it is just that adding a "red glove" to the concept of a "red glove" nets you a "red glove". For it not to be trained is what regularization images are for. Take for example the "red glove" from before, the model says I know what a red glove is" The training images says I want to change what a red glove is! and the regularization images says I agree with the model! This causes the changes to sliders representing the red glove to not change very much.

Anyway! Now that you have a basic mental image just take captioning as a Venn diagram. You must add, remove and tweak the tags so the sliders obtained from the training go to where you want. You can do all kind of shenanigans like duplicating datasets and tagging them differently so SD understands you better. Say I have an image with a girl with a red blouse and a black skirt, and i want to train her and her outfit. But! I only have one image and when I tag her as character1, outfit1 both triggers do exactly the same! OF course it does, SD has no idea of what you are talking about other than some interference from the model via word similarity.

What to do? Easy! Duplicate your image and tag both images with character1, then one with outfit1 and the other with the parts of the outfit. That way SD should understand that the part that is not the outfit is your character and that the outfit is equal to the individual parts. Just like a Venn diagram!

Of course this starts getting complex the bigger the dataset and the stuff you wish to train but after some practice you should get the hang of it so carry on and continue baking. If you screw up your lora will tell you!

On the Art-ness of AI art(musing)

If I were to agree with something said by some of anti-AI frothing-from-the-mouth crowd, It would be that AI image creation is unlike drawing or painting. If anything it is much more like cuisine.

There's a lot of parallels from how a cook has to sample what he is making, to how it has to tweak the recipe on the fly depending on the available ingredients quality, to how it can be as technical as any science measuring quantities to the microgram. Some people stick to a recipe other add seasonings on the fly. Some try to make the final result as close as possible to an ideal, some try to make something new and some just try to get an edible meal. Even the coldest most methodical methods of making a model using automated scraping says something about the creator even if he never took a peek at the dataset(They mostly look average).

I must admit some pride, not sure if misplaced or not as i select each image in my dataset to try to get to an ideal, maybe the first time it won't work out but with poking and prodding you can get ever closer to your goal. Even if your goal is getting pretty pictures of busty women!

So have pride in your creations even if they are failures! If you are grinding at your dataset and settings to reach your ideal, is that not art?

===============================================================

Preparations

First you need some indispensable things:

A nvidia videocard with at least 6GB but realistically 8 GB of VRAM. Solutions for ATI cards exist but are not mature yet.

Enough disk space to hold the installations

A working installation of Automatic1111 https://github.com/AUTOMATIC1111/stable-diffusion-webui or another UI.

Some Models (for anime it is recommended to use the NAI family: NAI, AnythingV3.0, AnythingV5.0, AnythingV4.5) I normally use AnythingV4.5

https://huggingface.co/andite/anything-v4.0/blob/main/anything-v4.5-pruned.safetensors(seems it was depublished) Can be found here: AnythingV4.5, (no relationship with anything V3 or V5). Use the pruned safetensor version with the sha256 checksum: 6E430EB51421CE5BF18F04E2DBE90B2CAD437311948BE4EF8C33658A73C86B2AA tagger/caption editor like stable-diffusion-webui-dataset-tag-editor

An upscaler for the inevitable image that is too small yet too precious to leave out of the dataset. I recommend RealESRGAN_x4plus for photorealism, RealESRGAN_x4plusAnime for anime and 2x_MangaScaleV3 for manga.

A collection of images for your character. More is always better and the more varied the poses and zoom level the better. If you are training outfits, you'll get better results if you have some back and side shots even if the character face is not clearly visible, in the worst case scenario some pics of only the outfit might do, just remember to not tag your character in those image if she is not visible.

Kohya’s scripts, a good version for windows can be found at https://github.com/derrian-distro/LoRA_Easy_Training_Scripts, The install method has changed, now you must clone the repository and click the install.bat file. I still prefer this distribution to the original command line one or the full webui ones, as it is a fine mixture between lightweight and easy to use.

===============================================================

Dataset gathering

That’s enough for a start Next begins the tedious part dataset gathering and cleanup:

First of all gather a dataset. You may borrow, steal or beg for images. More than likely you'll have to scrape a booru either manually or using a script, for rarer things you might end up re-watching that old anime you loved when you were a kid and going frame by frame doing screencaps, mpc-hc has a handy feature to save screencaps to png with a right click->file->save Image also you can move forwards and backwards one frame by doing ctrl+left or right arrow. For anime, this guide lists a good amount of places where to dig for images: Useful online tools for Datasets, and where to find data.

An ok scrapping program is grabber, I don't like it that much but we must do with what we have. Sadly it doesn't like sankaku complex and they sometimes have some images not found elsewhere.

Fist set your save folder and image saving convention and click save, in my case i used the md5 checksum.ext with ext being whatever original extention the image had. like this: %md5%.%ext%

Then go to tools->options->save->separate log files->add a separate log file

In that window type name as %md5%, folder as the same folder you put in the previous step, filename as %md5%.txt so it matches your image files and finally %all:includenamespace,excludenamespace=general,unsafe,separator=|% as text file content. Now when you download an image it will download the booru tags. You will need to process the file doing a replace all " " for "_" spaces for dashes and "|" for "," the or symbol for a comma. You might also want to prune most tags containing ":" and a prefix. But otherwise you get human generated tags of dubious quality.

For danbooru, it doesn't work out of the box, to make it work go to Sources->Danbooru->options->headers there type "User-Agent" and "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:126.0) Gecko/20100101 Firefox/126.0" the click confirm, that should allow you to see danbooru images.

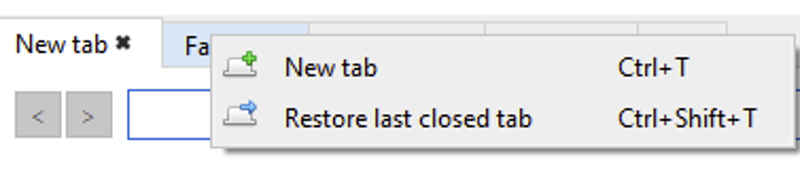

To search for images simply right click the header and select new tab then do as if you were making a caption but without commas absurdres highres characterA

After you have got you images you can either select them and click save one by one or click get all and go to the downloads tab.

On the download page simply do a ctl+a to select all, give a right click and select download. It will start to download whatever you added beware as it might be a lot so check the sources you want carefully. If a source fails simply manually skip it and select the next one do right click and download and so on.

After gathering your dataset it is good to remove duplicate images an ok program is dupe guru https://dupeguru.voltaicideas.net/, it won't catch everything and it is liable to catch images variations in which only the facial expression or a single clothing item changes.

To use first click the + sign and select your image folder

click pictures mode

click scan.

Afterwards it will give you a result page where you can see matches and percentage of similitude.

From there you can select the filter dupes only, mark all from the mark menu and then right click to decide if you wan to delete them or move them elsewhere.

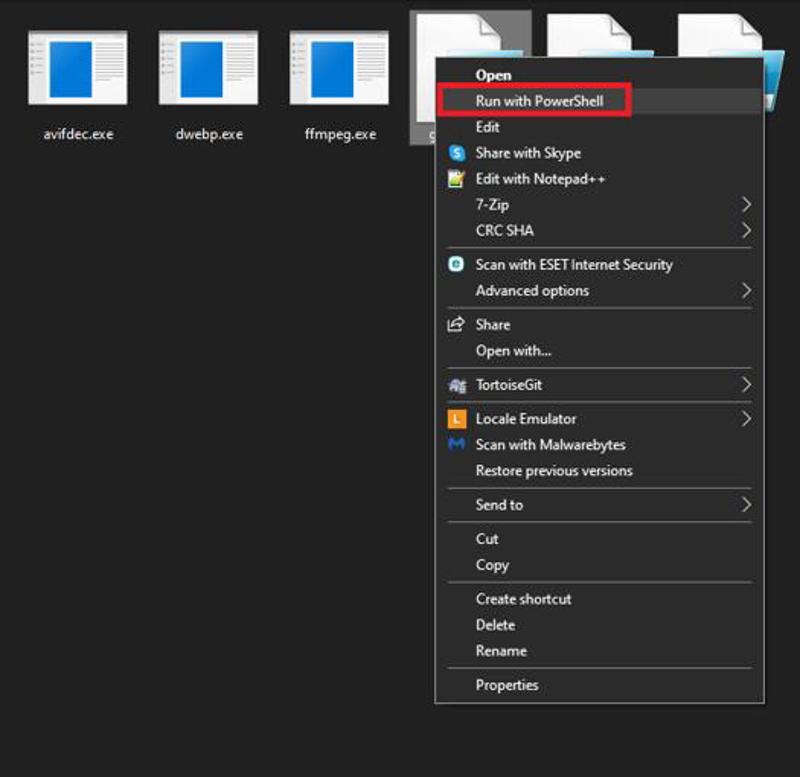

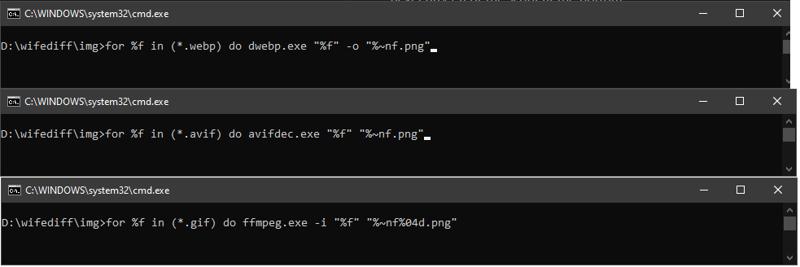

Get all your images to an useful format that means preferably png but jpg might suffice. You can use the powershell scripts I uploaded to civitai or do it yourself using the steps in the next entry (3) or the script at the bottom.

Manual PNG conversion:

For gif i use a crappy spliter open source program or ffmpeg. simply open a cmd windows in the images folder and type:

for %f in (*.gif) do ffmpeg.exe -i "%f" "%~nf%04d.png"For webp I use dwebp straight from the google libraries, dump dwebp from the downloaded zip into your images folder, open cmd in there and run:

for %f in (*.webp) do dwebp.exe "%f" -o "%~nf.png"For avif files, get the latest build of libavif (check the last successful build and get the avifdec.exe file from the artifacts tab) then dump it in the folder and run it the same as for webp:

for %f in (*.avif) do avifdec.exe "%f" "%~nf.png"

Dataset Images sources

Not all images are born equal! Other than resolution and blurriness, the source actually affects the end result.

So we have three approaches on the type of images we should prioritize depending on the type of LORA we are making:

Non-photorealistic 2D characters. For those you should try to collect images in the following order:

High Resolution Colored Fanart/official art: Color fanart is normally higher quality than most stuff. This is simply A grade stuff.

High Resolution monochrome Fanart/official art: This includes doujins, lineart and original manga. While overloading the model with monochrome stuff might make it more susceptible to produce monochrome. SD is superb at learning from monochrome and lineart giving you more detail, less blurriness and in general superior results.

Settei/Concept art: These are incredible as they often offer rotations and variations of faces and outfits. Search them in google as "settei" and you should often find a couple of repositories. These might often require some cleaning and or upscaling as they often have annotations of colors and body parts shapes.

Low Resolution monochrome Fanart/official art: monochrome art can be more easily upscaled and loses less detail when you do so.

High resolution screencaps: Screen captures are the bottom of the barrel of the good sources. Leaving aside budget constrains by animators who sometimes cheap out on intermediate frames, the homogeneity of the images can be somewhat destructive which is strange when compared with manga which seems to elevate the results instead. Beware: 80s and 90s Anime seems to train much faster, so either pad your dataset with fanart or lower steps to roughly 1/3 the normal value. This also applies to low res screencaps.

3D art: Beware if you are trying to do a pure 2D model. Adding any 3D art will pull it towards 2.5 or stylized 3D.

Low Resolution Colored Fanart/official art: This stuff will almost always need some cleaning, filtering and maybe upscaling. In the worst cases it will need img2img to fix.

AI generated Images: These ones can either go op or down in this list depending on their quality. Remember to go with a fine comb through them for not easily seen deformities and artifacts. Ai images are not bad but they can hide strange things that a human artist won't do, like a sneaky extra hand while distracting you with pretty "eyes". For more complex artifacting and "textures". There are countless ESRGAN filters in https://openmodeldb.info/ that might help, some I have used are: 1x_JPEGDestroyerV2_96000G, 1x_NoiseToner-Poisson-Detailed_108000_G, 1x_GainRESV3_Aggro and 1x_DitherDeleterV3-Smooth-[32]_115000_G.

Low resolution screencaps: We are approaching the "Oh God why?" territory. These will almost always need some extra preprocessing to be usable and in general will be a drag on the model quality. Don't be ashamed of using them! As the song goes "We do what we must because we can!" A reality of training LORAs is that most of the time you will be dealing with limited datasets in some way or another.

Anime figures photos: These ones will pull the model to a 2.5D or 3D. They are not bad per se but beware of that if that is not your objective.

Cosplay online stores images: Oh boy now it starts going downhill. If you need to do an outfit you might end up digging here, the sample images most often look horrid. Remember to clean them up, filter them and upscale them. Ah they also always have water marks so clean up that too. Make sure to tag them as mannequin and no_humans and remove 1girl or 1boy.

Cosplay images: Almost hitting the very bottom of the barrel. I don't recommend to use real people for non photorealistic stuff. If it is for an outfit i would recommend to lop off the head and tag it as head_out_of_frame.

Superdeformed/chibi: These ones are a coin toss if they will help or screw things. I recommend against them unless used merely for outfits and if you do use them make sure to tag them appropriately.

Non-photorealistic 3D characters. For those you should the exact same thing as for 2D but using mostly 3D stuff as any 2D stuff will pull the model towards a 2.5D Style

Photorealistic: Don't worry just get the highest resolution images you can which aren't blurry or have weird artifacts.

===============================================================

Dataset Regularization

The next fun step is image regularization. Thankfully bucketing allows LORA training scripts to autosort images into size buckets, automatically downscales them then uses matrix magic to ignore their shape. But! don't think yourself free of work. Images still need to be filtered for problematic anatomy, cluttered-ness, blurriness, annoying text and watermarks, etc. Cropped to remove empty space and trash. Cleaned to remove watermarks and other annoyances. And upscaled if they run afoul of being smaller than the training resolution. Finally then sorted by concept/outfit/whatever to start actually planing your LORA. So as mentioned before the main tasks of this section are filtering, resizing, processing and sorting. Also added Mask making to this section, masks are optional for masked loss training or future transparency training.

Filtering

Unless you are making a huge LORA which accounts for style then remove from your images dataset any that might clash with the others, for example chibi or Superdeformed versions of the characters. This can be accounted for by specific tagging but that can lead to a huge inflation of the time required to prepare the LORA.

Exclude any images that have too many elements or are cluttered, for example group photos, gangbang scenes where too many people appear.

Exclude images with watermarks or text in awkward places where it can’t be photoshopped out o cleaned via lama or inpaint.

Exclude images with deformed hands, limbs or poses that make no sense at first glance.

Exclude images in which the faces are too blurry, they might be useful for outfits if you crop the head though.

If you are making an anime style LORA, doujins, manga and lineart are great training sources as SD seems to pick the characteristic very easily and clearly. You will need to balance them with color images though or it will always try to generate in monochrome (Up to 50% shouldn't cause any issues, I have managed reliable results training with up to a 80%+ black and white using monochrome and grayscale in the negatives prompting.)

Resizing

Cropping: If you are using Bucketing then cropping other than to remove padding is not necessary! None the less remember to crop your images to remove any type of empty space as every pixel matters. Also remember that with bucketing your image will be downsized until its WidthxLength < training resolution squared 512x512(262,144) pixels for SD 1.5 or 1024x1024(1,048,576) for SDXL/pony. I have created a powershell script that does a downscale to the expected bucket size. Use it to check if any of your images needs to be cropped or removed from the dataset in case of it loosing too much detail during the training-script bucketing downscale process.

For manual cropping this is what you are looking for, simply use your preferred image editor and crop as tightly as possible around the subject you wish to train.

For images padded in single color backgrounds, I have had good success using image magick. It checks the color of the corners and applies some level of tolerance, eating the rows or columns depending on their color until they fail the check. I have added some images of the before and after running the command. Just install it, select a value for the tolerances(named fuzz, in my case I chose 20%) go to the folder where your images are, open a cmd window and type:

for %f in (*.png) do magick "%f" -fuzz 20% -trim "Cropped_%~nf.png"

Downscaling is mostly superfluous in the age of bucketing but! Remember the images are downscaled anyway! So be mindful of the loss of detail specially in fullbody high res images:

If you opt for downscaling, one downscaling technique is to pass images though a script which will simply resize them either though cropping or resizing and filling the empty space with white. IF you have more than enough images probably more than 250. This is the way to go and not an issue. Simply review the images and dump any that didn’t make the cut.

As I mentioned, I have created a powershell script that does a downscale to the expected bucket size. This will not change the shape of the image and will only downscale it until the image fits in a valid bucket. This is specially useful to check if an image lost too much detail as the training scripts do the exact same process. Other use is to decrease processing time. Training scripts first do a downscale to bucket and then a further downscale into latents. The first downscale takes time which would consume resources and time specially on training sites, collabs and rented gpus, so doing a downscale to bucket locally may save you a couple of bucks.

If training solely on square images, this can be accomplished in A1111 in the Extras>autosized and autofocal crop options.

If you on the other hand are in a limited image budget, Doing some downscaling/cropping can be beneficial as you can get subimages at different distances from a big fullbody high resolution image. I would recommend doing this manually. Windows paint3d is adequate if not a good option. Just go to the canvas tab and move the limits of your image. For example suppose you have a highrez fullbody image of a character. You can use the original to get 1 image(full_body). Do a cut at the waist and do a shot for a 2nd image(Upper_body). Then make a cut at the chest for a 3rd image with a mugshot only(portrait). Optionally you can also do a 4th crop from the thighs for a cowboy shot. Very very optionally you can do a crop of only the face(close-up). All 3(or 5) will be treated as different images as they are downscaled differently by the bucketing algorithm. I don't recommend doing this always but only for very limited datasets.

If using bucketing:

If using resize to square(mostly obsolete):

To simplify things a bit i have created a script(It's also as text at the bottom of the guide) which makes images square by padding them. It is useful to preprocess crappy (sub 512x512 resolution) images before feeding them to an upscaler(for those that require a fixed aspect ratio). Just copy the code into a text file and rename it to something.ps1 then right click it and click run with powershell. This is mostly outdated and was useful before kohya implemented bucketing for Embeddings.

Upscaling: Not all images are born high rez so this is important enough to have it's own section. Upscaling should probably be the last process you run on your images so the section is further below.

Image processing and cleaning

Ok so you have some clean-ish images or not so clean ones that you can’t get yourself to scrap. The next "fun" part is manual cleaning. Do a scrub image by image trying to crop, paint over or delete any extra elements. The objective is that only the target character remains in your image (If you character is interacting with another for example having sex, it is best to crop the other character mostly out of the image). Try to delete or fix watermarks, speech bubbles and sfx text. Resize images, passing low res images through an upscaler(see next section) or img2img to upscale them. I have noticed blurring other characters faces in the faceless_male or faceless_female tag style works wonders to reduce contamination. Random anecdote: In my Ranma-chan V1 LORA if you invoke 1boy you will basically get a perfect male Ranma with reddish hair as all males in the dataset are faceless.

That is just an example of faceless, realistically if you were trying to train Misato, what you want is this(Though I don't really like that image too much and i wouldn't add it to my dataset, but for demonstration purposes it works):

Img2Img: Don't be afraid to pass an extremely crappy image into img2img to try to get it less blurry or crappy. Synthetic datasets are a thing so don't feel any shame from using a partial or fully synthetic one. That's the way some original characters LORAs are made. Keep in mind this, if you already made a LORA and it ended up crappy... Use it! Using a lora from a character to fix poor images of said character is a thing and it gives great results. Just make sure the resulting image is what you want and remember any defects will be trained into your next LORA (that means hands, so use images where they are hidden or you'll have to manually fix them.) When you are doing this, make sure you interrogate the image with a good tagger, deepdanboru is awful when images are blurry(it always detects it as mosaic censorship and penises). Try to add any missing tags by hand and remember to add blurry and whatever you don't want into the negatives. I would recommend to keep denoise low .1~.3 and to iterate on the image until you feel comfortable with it. The objective is for it to be clear not for it to become pretty.

To remove backgrounds you can use stable-diffusion-webui-rembg install it from the extension tabs and will appear at the bottom of the extra tab. I don't like it. Haven't had a single good success with it. Instead i recommend transparent-background which is a lot less user friendly but seems to give me better results. I recommend you reuse your a1111 installation and put it there as it already has all requirements. Just open a power shell or cmd in stable-diffusion-webui\venv\Scripts and execute either Activate.ps1 or Activate.bat depending on if you used ps or cmd. Then install it using: pip install transparent-background After it is installed run it by typing: transparent-background --source "D:\inputDir\sourceimage.png" --dest "D:\outputDir\" --type white

This particular command will fill the background with white you can also use rgb [255, 0, 0] and a couple extra options just check the wiki part of the github page https://github.com/plemeri/transparent-background

Image cleaning 2: Lama & the masochistic art of cleaning manga, fanart and doujins

As I mentioned before, manga, doujins and lineart are just the superior training media, it seems the lack of those pesky colors makes SD gush with delight. SD just seem to have a perverse proclivity of working better on stuff that is hard to obtain or clean.

As always the elephants in the room are trice: SFX, speech bubbles and watermarks. Unless you are reaaaaaaaallly bored. You will need something to semi automate the task least you find yourself copying and pasting screentones to cover up a deleted SFX(been there, done that).

The solution? https://huggingface.co/spaces/Sanster/Lama-Cleaner-lama the Lama cleaner, it is crappy and slow but serviceable. I will add the steps to reuse your A1111 environment to install it locally because it is faster and less likely someone is stealing your perverse doujins.

Do remember that for the A1111 version, they recommend a denoise strength of .4. Honestly to the me the stand alone local version is faster, gives me better results and is a bit more friendly.

A1111 extension install:

Go to extensions tabs.

Install controlnet If you haven't already.

Either click get all available extensions and select lama in the filter or select get from URL and paste https://github.com/light-and-ray/sd-webui-lama-cleaner-masked-content.git

Install and reset your UI.

Go to inpaint in img2img and select lama cleaner and only masked, use the cursor to mask what you want gone and click generate. They seem to recommend a denoise of .4

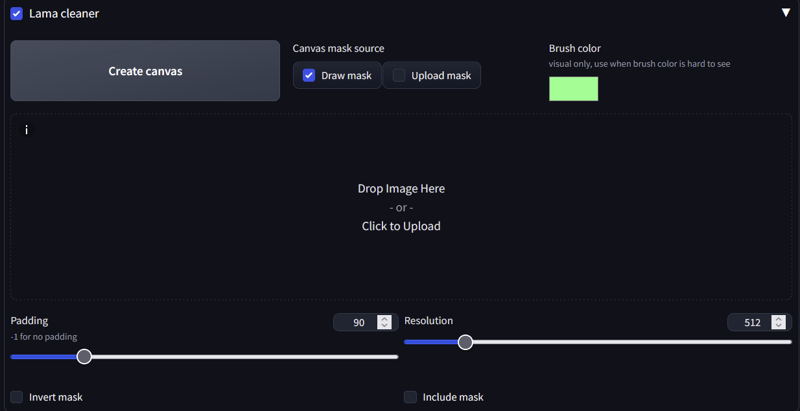

It seems lama cleaner is now also available from the extras tabs with less parameters. Make sure to add the image above and then select create canvas so the image is copied for you to paint the mask.

Local "stand alone" install:

First enable your a1111 venv by shift+right click on your stable-diffusion-webui\venv\Scripts folder and selecting open power shell window here. and typing ".\activate"

Alternatively simply create an empty venv using typing python -m venv c:\path\to\myenv in a command window, beware it will download torch and everything else.Install Lama cleaner by typing pip install lama-cleaner

This should screw up your a1111 install, but worry not!

Type: pip install -U Werkzeug

Type: pip install -U transformers --pre

Type: pip install -U diffusers --pre

Type: pip install -U tokenizers --pre

Type pip install -U flask --pre

Done, everything should be back to normal, ignore complains about incompatibilities in lama-cleaner as I have tested the last versions of those dependencies and they work fine with each other.

Launch it using: lama-cleaner --model=lama --device=cuda --port=8080 You can also use --device=cpu it is slower but not overly so.

open it in port 8080 or whatever you used http://127.0.0.1:8080

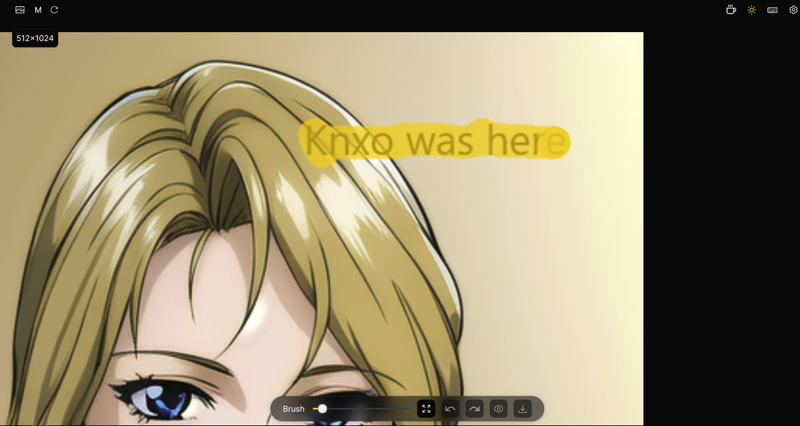

Just drag the cursor to paint a mask, the moment you release it, it will start processing.

Try to keep the mask as skintight as possible to what you want to delete unless it is surrounded by a homogeneous background.

Now the juicy part Recommendations:

Pre-crop your images to only display the expected part. Smaller images will decrease the processing time of lama and help you focus on what sfx, speech bubbles or watermarks you want deleted.

Beware of stuff at the edges of the masking area as those will be used as part of the filling. So if you have a random black pixel it may turn into a whole line. I would recommend to denoise your image and maybe remove JPEG artifacts as that will prevent the image from having too much random pixels that can become artifacts.

Forget about big speech bubbles, if you can't tell what should be behind them, Lama won't be able either. Instead turn speech bubbles into spoken_heart. SD seems to perfectly know what those are and will perfectly ignore them if they are correctly tagged during captioning. The shape doesn't matter SD seems ok with classic, square, spiky or thought spoken_heart bubbles. The tag is "spoken_heart" If you somehow missed it.

Arms, legs and hair are easier to recover, fingers, clothing patterns or complex shadows are not and you may need to either fix them yourself or crop them.

Lama is great at predicting lines, replace an empty space between two unconnected seemingly continuous lines and it will fill the rest. You can also use this to restore janky lines.

You can erase stuff near the area you are restoring so Lama won't follow the pattern of that section. For example you might need to delete the background near hair so Lama follows the pattern of the hair instead of the background.

Lama is poor at properly recreating screen tones, if it is close enough just forget about it. From my tests SD doesn't seem to pay too much attention to the pattern of the screentone as long as the average tone is correct.

Semitransparent overlays or big transparent watermarks require to be restored by small steps from the uncovered area and is all in all a pain. You may just want to manually fix them instead.

You poked around and you found a manga specific model in the options? Yeah that one seems to be slightly full of fail.

Transparency/Alpha and you

At this point you might say "transparency? Great! now i won't train that useless background!" Wrong! SD hates transparency, in the best case nothing will happen, in the worst cases either kohya will simply ignore the images or you will get weird distortions in the backgrounds as SD tries to simulate the cut edges.

So what to do? image magick once again comes to the rescue.

Make sure image magic is installed

Gather your images with transparency(or simply run this on all of them it will just take longer.)

Run the following command:

for %f in (*.png) do magick "%f" -background white -alpha remove -alpha off "RemovedAlpha_%~nf.png"

Resizing 2: Upscaling electric boogaloo

There are 3 common types of Upscaling, Animated, Lineart and Photorealistic. As I mostly do Anime character LORAs I have most experience of the first two as those cover anime, manga, doujins and fanart.

I also recommend to pass your images through this Script it will sort your originals by bucket and create a preview downscale to the expected bucket resolution. More importantly it will tell you which images need to be upscaled to properly fill their bucket. I recommend to to this early in the process as it sorts the images in subfolders by bucket.

Anime:

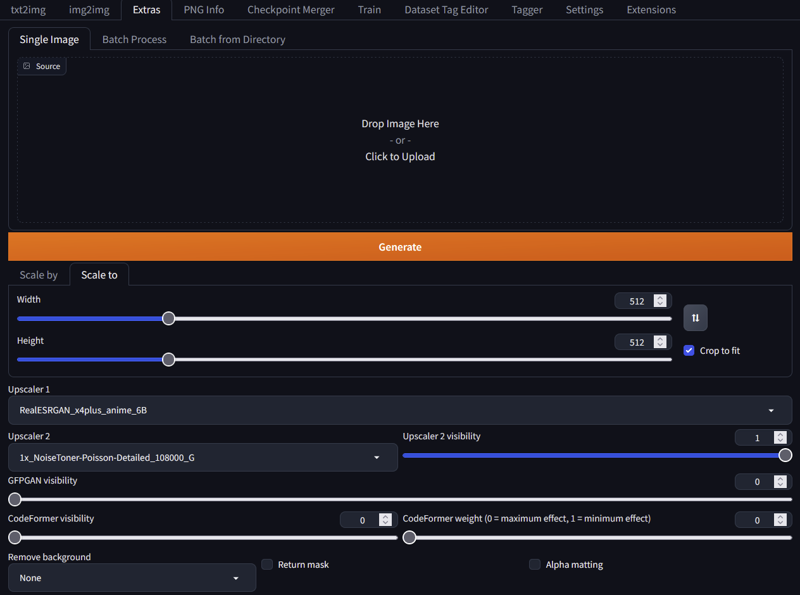

For low res upscaling, my current preferred anime scaler is https://github.com/xinntao/Real-ESRGAN/blob/master/docs/anime_model.md (RealESRGAN_x4plus_anime_6B). I have had good results for low res shit to 512. Just drop the model inside the A1111 model\ESRGAN folder and use it from the extras tab. Alternatively, I have found a good anime scaler that is a windows ready application https://github.com/lltcggie/waifu2x-caffe/releases Just download the zip file and run waifu2x-caffe.exe Then You can select multiple images and upscale them to 512x512. For low res screen caps or old images i recommend the "Photography, Anime" model. You can apply the denoise before or after depending on how crappy your original image is.

Some extra scalers and filters can be found in the wiki. https://openmodeldb.info/ 1x_NoiseToner-Poisson-Detailed_108000_G works fine to reduce some graininess and artifacts on low quality images. As the 1x indicates this are not scalers and probably should be user as a secondary filter in the extra tabs or just by themselves without upscaling.

Img2img: for img2img upscale of anime screenshots the best you can do is use a failed LORA of the character in question and use it in the prompt at low weight when doing SD upscale. You don't have one? Well then... if the images are less than 10, just pass them through an autotagger, clean any strange tag, add the default positives and negatives and img2img them using SD upscale. I recommend .1 denoising strength. Don't worry about the shape of the image when using the SD upscale the dimensions ratio is maintained. Beware with eyes and hands as you may have to do several gens to get good images without too much distortion.

For bulk img2igm, unless you plan to curate the prompts, simply go to batch in img2img, set the directories and use the settings above and simply use a generic prompt like 1girl and let it churn. You can set it to do a batch of 16 and pick the best gens.

Manga:

For manga(specially old manga) we have some couple extra enemies

jpeg compression: This looks like distortions near lines.

screen-tone/dithering: Manga and doujins as they are printed media are often colored using ink patterns instead of true grayscale. If you use a bad upscaler it can either eat all the dithering turning it to real grayscale or worse, only eating it partially leaving you a mixed mess.

For upscailing I had the best luck using 2x_MangaScaleV3 It's only downside is that it can change some grayscale into screentones. If your image has almost no screentones then DAT_X4(default in a1111) seems to do an ok job. For some pre filtering in case the images are specially crap, I had some luck filtering using 1x_JPEGDestroyerV2_96000G It can be used together with 1x_NoiseToner-Poisson-Detailed_108000_G It seems to have done it's job without eating the dithering pattern too much.

Below is an example going from unusable to a "maybe" using 2x_mangascaleV3 the small one is the original.

Photorealistic or 3D:

Not much experience with it but when i need something i normally use RealESRGAN_x4plus which seem serviceable enough.

Mask making

So all your dataset image have the same annoying background or a lot of objects that annoy you? Well the solution to that is masked loss training. Basically the training will somewhat ignore the loss of the masked areas of a supplied mask. Tools to automatically create mask would probably boil down to transparent-background or REMBG. I don't really have much faith in REMBG so I will instead add a workflow for transparent-background

First we must install transparent-background so either make a new venv or reuse you a1111 environment and install transparent-background using pip

pip install transparent-backgroundNext, while still in your venv you must run transparent background as follows:

transparent-background --source "D:\dataset" --dest "D:\dataset_mask" --type mapThis will generate the mask of your images with the suffix "_map" next we must remove the suffix, just open powershell in the mask files and run the following command:

get-childitem *.png | foreach { rename-item $_ $_.Name.Replace("_map", "") }Now all your mask files should have the same names as their originals. Lastly before using your masked images to do masked loss training, check the masks, grayscale mask in which you can see bits of your character are not good as well as masks that are completely black.

The following masks are bad:

This is what a good mask must look like:

Remember, you do masked loss training by folder so remove from your folder the images whose masks didn't take and simply put them into another folder with masked loss disabled. (currently iffy but should be fixed at some point.)

Sorting

So All your images are pristine and they are all either 512x512 or they have more than 262,144 total pixels. Some are smaller? Go back and upscale them! Ready? Well next step is sorting, simply make some folders and literally sort your images. I normally sort them by outfit and by quality.

Normally you will want to give less repeats to low quality images and you will need to know how many images you have per outfit to see if they are enough to either train it, dump it into misc or go hunt for more images below rocks in the badlands.

Now that you have some semblance of order in to your dataset you can continue to the planning stage.

===============================================================

Planning

Some people would say the planing phase should be done before collecting the dataset. Those people would soon crash face first with reality. The reality of LORA making depends entirely on the existence of a dataset. Even if you decide to use a synthetic dataset, to do any real work you have to make the damn thing first. Regardless, you have a dataset of whatever it is your heart desires. Most Loras fall into 2 or 3 Archetypes: Styles, Concepts or Characters. A character is a type of concept but is so ubiquitous that it can be a category by itself. Now apart from those categories we have a quasi LORA type the LECO.

LORAS

Loras can change due to the tagging style and this directly reflect how the concept is invoked and be separated in 3 main categories, for this explanation a concept can be either a character, a situation, an object or a style:

Fluid: In this route you leave all tags in the captioning. Thus everything about the concept is mutable and the trigger acts as bridge between the concepts. The prompting in this case using a character as an example will look like this: CharaA, log_hair, blue_eyes, long_legs, thick_thighs, red_shirt etc. The problem with this approach is in reproducibility as to make the character look as close as possible will require a very large prompt describing it in detail with tags. This style, to me requires to have access to the tags summary of the training to be the most useful. Surprisingly for the end user this is perfect for beginners or for experts. For beginners because they don't really care as long as the character looks similarlish and for experts because with time you get a feeling of what tags might be missing to reproduce a character if you don't have access to the tag summary or the dataset.

Semi static: In this route you partially prune the captions so the trigger will always reproduce the concept you want and the end user can customize the the remaining tags. Once again this example is of a character. The prompting will look like: CharaA, large_breasts, thick_thighs, Outfit1, high_heels. In this case characteristics like hair style, color as well as eye color are deleted or pruned and folded into CharaA leaving only things like breast size and other body body characteristics as editable. For this example, parts of the outfit are also deleted and folded into the Outfit1 trigger only leaving the shoes editable. This style is most useful for intermediate users as they can focus on the overall composition knowing the character will always be faithful to a degree.

An example of pruning is like this:

Unprunned: 1girl, ascot, closed_mouth, frilled_ascot, frilled_skirt, frills, green_hair, long_sleeves, looking_at_viewer, one-hour_drawing_challenge, open_mouth, plaid, plaid_skirt, plaid_vest, red_skirt, red_vest, shirt, short_hair, simple_background, skirt, solo, umbrella, vest, white_shirt, yellow_ascot, yellow_background

Pruned: CharaA, Outfit1, closed_mouth, looking_at_viewer, open_mouth, simple_background, solo, umbrella, yellow_background

The rationale is simple: CharaA will absob concepts like 1girl which represent the character, while Outfit1 will absorb the concepts of the clothing. Be mindful that for the model to learn the different between CharaA and Outfit1, the trigger must appear in different images so the model learns that they are in fact different concepts. For example the character dressed in a different outfit will teach the model that Outfit1 only represent the outfit as CharaA appears in images that have different outfits.

Recommendations:

For character outfits, never fold shoes(high_heel, boots, footwear) tags into the outfits. This often causes a bias towards fullbody shots as the model tries to display the full body including the top and the shoes, it can also cause partially cropped heads.

For characters, never prune breast size. People get awfully defensive when they can't change breast size.

For styles remember to prune inherent things to the style for example if black and white prune monochrome or if the art is very "dark" then prune tags like night, shadow, sunset, etc.

Static: If you prune all tags relating to the concept you get this. It will fight any change you try to make. For example for a character someone will accuse you of something for not making x bodypart editable. I always make the boobs in my loras editable and I still get complains of this type. The prompting will look like: CharaA. Well not this extreme, you still can still force some changes. What you are doing is essentially turning the whole character, outfit and all into a single concept and it will be reproduced as such.

Characters

So you decided to make a character. Hopefully you already decided which approach to take and hopefully it was the semi static one, or if lazy the fluid one will do.

As can be easily surmised only the first and second routes are useful. The Semi static path requires much more experience on what to prune and what to keep in the captioning. Check the captioning section's Advanced triggers for more details on this. Surprisingly most creators seems to stick to the fluid style, I am not sure if end users prefer it, it is due to laziness or they never felt the need to experiment with captioning to do more complex things.

Anyway you decided your poison, now comes the outfits.

If you are using a Fluid approach just make sure you have a good enough representation of the outfit in the dataset anything from 8 to 50 images will do, just leave them in there. After you finish training try to recreate the outfit via prompting and attach it to the notes when you publish...

If you are using a semi static approach you may want or need to train individual outfits as sub concepts. I would say I try to add all representative outfits but in reality I mostly add sexy ones. Here is where you start compromising as you will inevitably end up short on images. So... add the ugly ones. You know which ones. Those that didn't make the cut. Just touch them up with photoshop or img2img. I have honestly ended up digging on cosplay outfits auctions for that low res image of an outfit in a mannequin. As I mentioned in the beginning the dataset is everything and any planing is dependent on the dataset.

Anyway as i mentioned, when selecting an outfit to train You would need at the very least 8 images with as varied angles as possible. Don't expecting anything good for a complex outfit unless you have more 20+ images. 8 is the very minimum and you will probably need some help from the model, so in this case it is best to construct the outfit triggers in the following way Color1_item2_Color2_Item2...ColorN_ItemN. It is possible to add other descriptors in the trigger like Yellow_short_Kimono in this case being made of two tokens yellow and short_kimono. If it were for example sleeveless and with an obi I would likely put it as Yellow_short_Kimono_Obi_Sleeveless. I am not sure how much the order affect the efficacy of the model contamination(contribution in this case) But I try to sort them in decreasing visibility order. with modifiers that can not be directly attached to the outfit at the very end. So I would put sleeveless, halterneck, highleg, detached_sleeves, etc at the end.

Character Packs

So you decided you want to make a character pack? If you are using a fluid character captioning style then you better pray you don't have two blonde characters. The simple truth is that doing this increases bleedover. If your characters faces are similar and their hair colors and styles are different enough, this is doable, but I have never seen an outstanding character pack done in this way. Don't misunderstand me, they don't look bad or wrong, well when they are done correctly. They look bland as the extra characters pulls the LORA a bit towards a happy medium due to the shared tags. But if you like a "bland" style that is perfectly fine! At this point there's probably hundreds of "Genshin impact style" lookalike LORAs and they are thriving! How is that possible? I haven't the foggiest. If I may borrow the words of some of the artists that like to criticize us people who use AI, I would say they are "pretty but a bit soulless". Then again I am old and I have seen hand-drawn anime from the 80s.

Moving along... If you are using a semi static captioning style, just basically make one dataset for each character, mash it together and train it. Due to the pruning there should be no concept overlap, This will minimize bleedover. Sounds easy? It is! only issue is that it is the same work as doing two different LORAs and will take twice the training time.

So now you are wondering why bother? Well there's two main reasons:

To decrease the amount of LORAS. Why have dozens of LORAs if you can have one per series! Honestly this makes sense from an end user's perspective but from a creator's perspective it only adds more failure points, because you may have gotten 5 characters right but the sixth may look like ass. Remember! Unless you are being paid for it, making the end user's life easier at your expense is completely optional, and if you are being paid, you should only make their life "a bit" easier so they keep requiring your services in the future. :P On the other hand if you are a bit OCD and want to have your LORAS organized like that, then I guess it is time well spent.

The real reason, So you can have two or more characters in the same image at the same time! This can be done with multiple LORAs, but they will inevitably have style and weights conflicts. This can only be mitigated by using a dreambooth model or a LORA with both characters. If you are thinking NSFW you are completely correct! But also a simple couple walking hand to hand(which some people consider the most perverse form of NSFW.)

For this you necessarily need the two characters be captioned in a semi static way, otherwise when you try to prompt the characters SD will not know which attributes to assign to each one. For example if you have a "1boy, red_hair, muscles" and "1girl, black_hair, large_breasts" SD is liable to do whatever it wants(either making a single person or distribute the traits randomly). If on the other hand you have "CharacterA, CharacterB" it becomes much harder for SD to fuck up. One consideration is that SD doesn't manage multiple characters very well so it is essential to add a 3rd group of images to the dataset with both characters in the same image.

In other words, for best result the workflow should be as follows. You need a 3 distinct groups of images Character A , Character B and character A+B, each one with their respective trigger. I would recommend at least 50+ images of each. Second of all you will need to do extensive tag pruning for group A and B in the semi static style as if you were making 2 different character LORAs making sure SD clearly knows which attributes belongs to who. Afterwards the A+B group must be tagged with the 3 triggers, making sure to prune any tags alluding to the number of people. Basically what you will be doing is stuffing two character loras and a concept lora(the concept being two people) into one single lora. So at the end just merge the 3 datasets, add some tweaks for extra outfits(if any) and that's it. Even after all that the lora may require you to use 2girls. 2boys or "1boy, 1girl" for it to make the correct amount of persons. SD is annoying like that.

Styles

Styles is pretty much what it sounds like, an artist's style. What you try to capture is the overall ambience, body proportions of characters, architecture and drawing style(if not photo realistic) of an artist. For example if you check my LORAS or at least their sample images you would notice I favor a more somber style, a bit more mature factions and big tits(no way around it I am jaded enough to know I am an irredeemable pervert) If you collected all my datasets and sample images and merged them into a LORA you would get a KNXO style I guess.

I don't really like training styles as I find it a bit disrespectful specially if the artist is still alive. I have only done one Style/character combo LORA as that particular doujin artist vowed to stop drawing said character after being pressured by the studio who now owns the rights of the franchise. Regardless, if you like an artist's style so much you can't help yourself, just send a kudos to the artist and do whatever you want. I am not your mother.

Theoretically style is the simplest LORA type to train, just use a big, clean and properly tagged dataset. If you are training a style you will need as many varied pictures as possible. For this type of training, captions are treated differently,

There are 2 Common approaches to tagging styles:

Contemporary approach: Try to be as specific as possible in your tags tagging as much as possible. Add the trigger and eliminate the tags that are associated to the style. For example Retro style, a specific background always used by the artist, a perspective you wish to keep and tags like that. The rationale is distributing all concepts to their correct tag leaving the nebulous style as the odd concept out assigning it to the trigger.

Old approach: what you want to do is either delete all tags from the caption files, only leaving the trigger and let the LORA take over when it is invoked. The rationally being to simply dump everything into trigger creating a bias in the unet towards the style.

Essentially the approaches are distilling the style vs overloading other concepts. AFAIK both techniques work. I haven't done enough experiments to do a 1 to 1 comparison. But in pure darwinistic terms, the first solution is more widely used today so I guess it is superior(?).

Remember and can't emphasize it enough, clean your dataset as best as possible, for example if you use manga or doujins then clean the sfx and other annoyances as they will affect the final product(unless they are part of the esthetic you want of course).

Concepts

Concepts... First of all you are shit out of luck. Training a concept is 50% art and 50% luck. First make sure to clean up your images as good as possible to remove most extraneous elements. Try to pick images that are simple and obvious about what is happening. Try to pick images that are as different as possible and only share the concept you want. For Tagging you need to add your trigger and eliminate all tags which touch your concept, leaving the others alone.

Some people will say adding a concept is just like training a character, that is true to an extent for physical objects concepts. In any other instance... Oh! boy are they wrong! SD was mainly trained to draw people and it really knows it's stuff, fingers and hands aside. It also knows common objects, common being the keyword. If SD has no idea of what you are training you will have to basically sear it into the model using unholy fire. On the other extreme if it is a variation of something it can already do, it will likely burn like gunpowder. Here are some examples

A good example for the first case would be an anthill, a bacteria or a randomly repeating pattern. One would think, "well I just need a bunch of example images as varied as possible". Nope, If SD doesn't understand it, you will need to increase the LR, reps and epochs. In my particular case I ended up needing 14 epochs using prodigy, so the LR was probably up the wazoo. I had to use a huge dataset with a bunch of repeats. I think it was 186 images 15 repeat for 14 epochs. Also using keep token on my trigger and using caption shuffle. As I said i had to basically sear it into the LORA. This particular case would be a good candidate for regularization images to decrease the contamination towards the model, I evaded using them by making the dataset more varied. The result was mediocre in my opinion, though people seem to like it as it does what it is supposed to.

For the second case I did a variation of a pose/clothing combo. (Don't look for it if you have virginal eyes). Problem being, it is a variation of a common concept. It is actually possible to do (with extremely little reliability) by just using prompting, also I wanted to be as style neutral as possible for it to easily mix. Long story short, any amount of repetitions caused it to either overbake or be extremely style affecting. The only solution i found was these settings: Dim 1 alpha 1 at 8 epoch 284 unique images with 1 repetition. The low dim and alpha made it less likely to affect the style, the unique images acted like reg images pulling the style in many directions cancelling it a bit and the low reps kept overcooking under control. I ended up doing two variations one with adamw and one with Prodigy. The prodigy being more consistent but more style heavy despite my best efforts. The AdamW much more neutral but a bit flaky.

TLDR: Use low alpha and dim for generalized concepts and poses you wish to be flexible. Use a dataset as big and varied as possible. If the concept is alien to SD sear it with fire, and if it is already seared with fire touch it with a feather.

LECO or Sliders

LECO can be consider a type of Demi LORA in fact they share the same file structure and can be invoked as a normal LORA. Despite being identical to LORA in most ways LECO are trained in a completely different way without using images. To train LECO you need at least 8GB of VRAM. Currently there are two scripts able to train LECO:

https://github.com/p1atdev/LECO: Older implementation mostly focused on adding or removing concepts.

https://github.com/ostris/ai-toolkit: More modern implementation focused on creating sliders.

For training I used Ai Toolkit, beware the project is not very mature so it is constantly changing. I will add instructions using a specific version of it that i know is working and will need some tweaks.

The following instructions are for a windows install using cuda 121. Make sure you have git for windows https://gitforwindows.org/ installed.

First open powershell in an empty folder and do the following steps:

git clone https://github.com/ostris/ai-toolkit.git

git checkout 561914d8e62c5f2502475ff36c064d0e0ec5a614

cd ai-toolkit

git submodule update --init --recursive

python -m venv venv

.\venv\Scripts\activate

Afterwards download the attached ai toolkit zip file on the right, it has a clone of my currently working environment using cuda 121 and torch 2.2.1. Overwrite the requirements file as well as the file within the folder(it has a cast to integer fix).

Then Run:

pip install -r requirements.txtIt should take a while while it downloads everything. In the meanwhile download train_slider.example.yml in the right and it is time to edit it.

Realistically the only values you need to edit are:

Name: The name of the LECO

LR: Learning rate was recommended as 2e-4, that was too high better set it to 1e-4 or a bit lower.

Hyperparameters: Optimizer, denoising, scheduler, all work fine, the LECO burnt well before the 500 steps so that's ok too.

steps: Had some weirdness rounding to 500, the last epoch wasn't generated so add 1 to the max just in case. I defaulted to 501

dtype(Train): if your videocard supports it use bf16, if not use FP32, FP16 causes NAN loss errors making the LECO unusable.

dtype(Save): always leave it as float16 for compatibility.

name_or_path: The path to your model use "/" for the folder structure, if you use the other one it will crash.

modeltypes:set V2 and Vpred if SD2.0, xl if XL or leave them false if on SD1.5.

max_step_saves_to_keep: This is the amount of epochs you wish to keep, if you put less than the total amount the older ones will be deleted.

prompts: the --m part is the strength of the LECO, recommend the following values so you can see the progress: -2, -1,-.5,-.1,0,.1,.5,1,2. they should look like this:

- "1girl. skirt, standing --m -2"resolutions: 512 for SD1.5 768 for 2 and 1024 for XL.

target_class: The concept you wish to modify for example skirt or 1girl

positive: The maximum extent of the concept, for example for a skirt it would be long_skirt

negative: The minimum extent of the concept, for a skirt it would be microskirt, but in my case that was not enough as that concept is not very well trained in my model so i had to help it by including other concepts related to it, it ended up being: "microskirt,lowleg_skirt,underbutt,thighs,panties,pantyshot,underwear"

metadata: Just put your name and web address, you don't want them to say they belong to KNXO

So you finished filling up your config file and installing all the requirements? Then in powershell with the venv open and in the ai-toolkit folder, type:

python run.py path2ConfigFile/train_slider.example.yml Then supposing everything went fine it will start training. LECO train a lot slower than LORA and require more VRAM, on the other hand they support resume, you can just stop the training and start it again as needed. AItools spits the progress and Epoch in the output folder so check the progress there.

To know a LECO is done check the sample images, if the extremes have stopped changing then it is overcooked. For example the following images are step 50 weight 2 and step 100 weight 1. Not only is it obvious both are the same but the second one has a blue artifact in her left shoulder. So in this case the epoch of step 50 is the one i want(The recommended LR 2e-4 was really stupidly high).

So you decided which epoch looks best, then simply test it like any other LORA. Congratulations you have made your first pseudo LORA. LECO difficulty most comes from actually setting the environment and finding good tags for both extremes of the concept.

Masked Training

Is your dataset full of images with the same or a problematic backgrounds? Maybe too much clutter? Well Masked training may or may not be a solution! The pros of this approach is that the training is simply more concrete, reducing extraneous factors. The con? You have to make masks, hopefully good quality masks. Unless you are a masochist and want to manually create the mask of each one of your images you will need something to generate them and it will likely be either transparent background or REMBG. Go check the mask making section for how to do it with transparent background.

As far as I know Masked loss training works the same as normal training but when saving the weights, the masked zones are dampened or ignored depending on the opacity of the mask. Is simple words the black part in the mask is ignored.

From my tests Masked training works slightly better than for example training with a white background, it is not a massive improvement by any means but it works good enough. For it to truly be valuable it needs a couple of conditions:

The dataset is mask making program friendly:

Images must be as solid as possible blurry images, monochrome, lineart and anything that is too fuzzy will likely fail to produce a good mask.

The dataset has something you "really" don't wish to train and it can't be cropped out:

This applies for example the if dataset has repetitive or problematic backgrounds.

Problematic items or backgrounds aren't properly tagged.

If they are ignored it doesn't matter too much if they are poorly tagged.

So if your dataset is properly cropped and tagged, the gains will be minimal.

Anyway to do mask training simply create the masks for your images and make sure they have the same name as your originals then simply enable the option to use it and set the masks folder, then proceed to train as usual.

I saw no particular dampening in the learning rate nor performance overhead. They might exist but they are manageable. All in all Masked training is just another situational tool for training.

Block Training

First of all I am not an expert at block training as i find it to be a step too far. Anyway let's begin with the obvious. SD is made from a text encoder(or several) and a Unet. For our purposes lets focus on the unet and just establish that a SD1.5 LORA has 17 blocks and a Locon has 26. The difference in blocks only means that the remaining 9 blocks are zeros in a LORA. So a lora is 17 blocks plus 9 zero blocks and a Locon is 26 blocks with values. For SDXL it is 12 and 20 blocks for it's Unet. We will use the full LOCON notation as it is what most programs use. I will also use examples for SD1.5 using the 26 values.

Available LOCON blocks:

SD1.5:BASE,IN00,IN01,IN02,IN03,IN04,IN05,IN06,IN07,IN08,IN09,IN10,IN11,M00,OUT00,OUT01,OUT02,OUT03,OUT04,OUT05,OUT06,OUT07,OUT08,OUT09,OUT10,OUT11

SDXL:BASE,IN00,IN01,IN02,IN03,IN04,IN05,IN06,IN07,IN08,M00,OUT00,OUT01,OUT02,OUT03,OUT04,OUT05,OUT06,OUT07,OUT08

Block training is a bit more experimental and an iterative process. I don't normally use it as at some point you have to say good enough and move on. None the less if you are a perfectionist this might help you. Beware you can spend the rest of your life tweaking it to perfection. Also you will need to know how to analyze your LORA to see which blocks to increase of decrease, that is a bit out of the scope of this training guide.

Now lets dispel the myth. As far as I know any table claiming that X block equates to Y body part is lying. While some relations exist they are too variable to make a general assessment. The equivalences are dataset dependent and using empiric tables may or may not give you good results. So You would think "Then Block training is Useless!" well yes and no. Uninformed block training using one of those equivalence table is useless, when you have an idea of what needs to be changed then we have some tangible progress.

Also you must consider that while Kohya supports Block training, Lycoris seemingly does not. So it is limited to LORA and OG Kohya's LOCON(not the more modern Lycoris implementation).

There are two ways to do "proper" block training:

The first method is an iterative one:

First train a lora as normal.

Use an extension like https://github.com/hako-mikan/sd-webui-lora-block-weight to see which blocks can be lowered or increased to produce better images or reduce overfitting.

After installing it you can prompt like this <lora:Lora1:0.7:0.7:lbw=1,1,1,1,1,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0,0,0,0,0,0,0> or <lora:Lora1:0.7:0.7:lbw=XYZ> the first to manually input the block value the XYZ one to make a plot for comparison. Do take note the 0.7 values are the weight of the Unet and text encoder which the extension takes as separate values.

Retrain using the same dataset and parameters but using what you learnt to tweak the block weights.

In this case, block weights is just a multiplier and the easier to edit. Dims and alpha will affect detail and learning rate respectively. So if you want a block reinforced you can increase the weight multiplier or alpha, If you want some more detail increase the dim slightly. I would recommend to stick to the multiplier or in special cases alpha but i am not your mother.

Rinse and repeat adjusting things.

The second approach doesn't involve training but a post training modification:

First follow the first three steps as before.

Install the following extension: https://github.com/hako-mikan/sd-webui-supermerger.git

Go to supermerger lora tab and merge the lora against itself. As far as i know at alpha .5 it should average the weights so leave it at default and merge the lora against itself with the reduced or increased block weights. I get the feeling a stand alone tool should exist for it. Alas I don't know one.

should look like this:

Lora_1:1:.5,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,Lora_1:1:.5,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

The example above should mix Lora_1 against itself setting all blocks to 0 except the Base block that should be now set at .5 weight. If instead one had the Base block set to 1 and the second set to .5 the result would have the block set at .75 (might be wrong so don't quote me on it.).

Planning Conclusion

Finally, manage your expectations. A character LORA is good when you can make it produce the likeness of the character you desire. An object concept LORA is the same. But how do you measure the success of a pose? One could say that as long as the pose is explicitly the same(basically overbake it on purpose) then mission accomplished! But you could also add that the pose lora plays well with other LORAs, that it be style neutral or that it has enough view angle variations while still being the same pose. To me the concept LORA I made were multi week projects that left me slightly burnt out and a bit distraught as every improvement only highlighted the shortcomings of the LORA. So remember this, if you are getting tired of it and the LORA more or less works, Say "fuck it!" and dump it into the wild and let the end users use it and suffer. Forget about it, The users will wine and complain about the shortcomings but that will give you an idea of what really needs to be fixed. So just forget about it and come back to it when the feeling of failure has faded and you can and want to sink more time into it, now with some real feedback on what to fix.

===============================================================

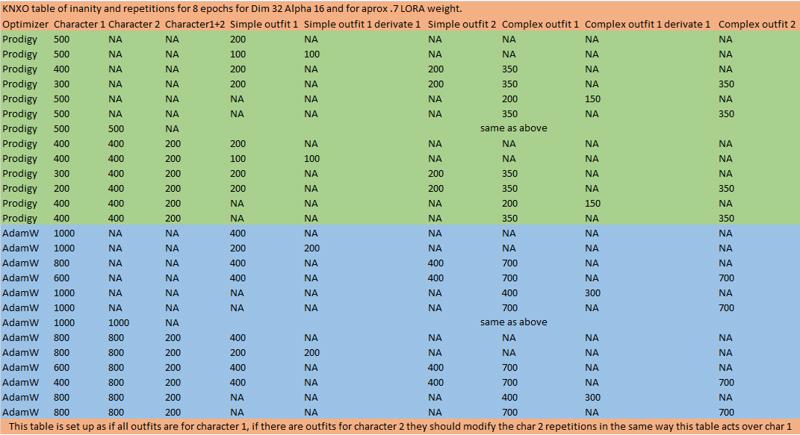

Folders

So All your images are neat, 512x512 or a couple of buckets. The next step is the Folder structure, images must be inside a folder with the following format X_Name where X is the amount of times the image will be processed. You’ll end with a path like this train\10_DBZStart where inside the train folder is the folder containing the images. Regularization images use the same structure. You can have many folders, all are treated the same and allow you to keep things tidy if you are training multiple concepts like different outfits for a character. It also allows you to add higher processing repetitions to high quality images or maybe a tagged outfit with very few images. For now just set everything to 10 repetitions, you will need to tweak these number after you finish sorting your images into the folders.

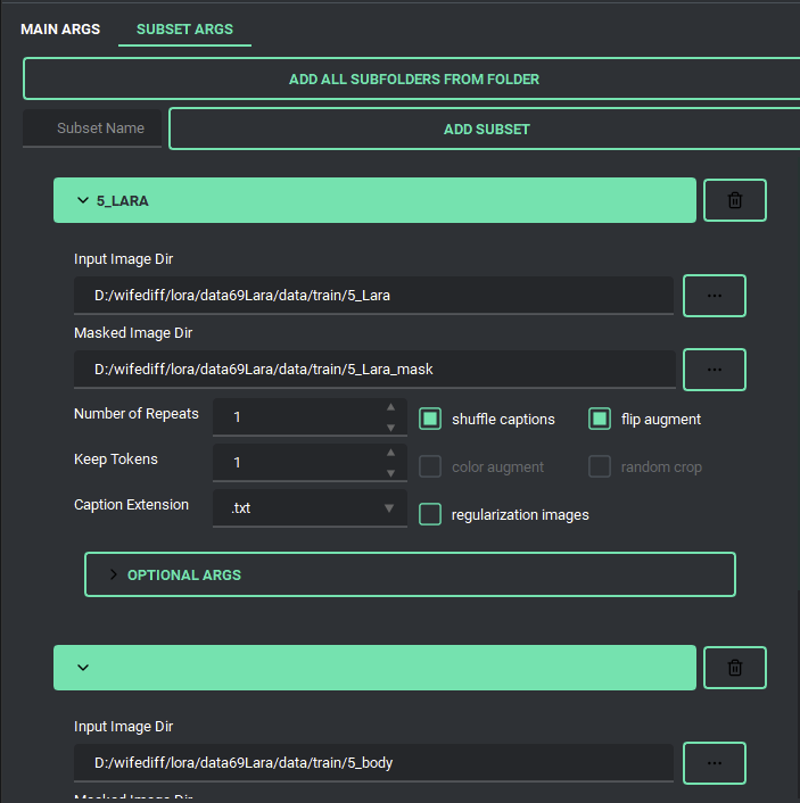

In the example below I tagged 6 outfits, the misc folder has well misc outfits without enough images to be viable. I adjusted the repetitions depending on the amount of images inside each folder to try to keep them balanced. Check the Repetitions and Epochs section to adjust them.

So after you finish the structure it is time to sort your images into their corresponding folders. I recommend that if the shot is above the heart, to dump it into misc as it won't provide much info for the outfit, those partial outfits in misc should be tagged with their partial visible parts rather than the outfit they belong to. That is unless they have a special part not visible in the lower part of the outfit, in this case leave them as is and treat them as normal part of the outfit and just be cautious of not overloading the outfit with mugshots. Random suggestion: For outfits, adding a couple of full body headless(to make the character unrecognizable) shots tagged with "1girl, outfitname, etc" do wonders to separate the concept of the outfit from the character.

===============================================================

Repetitions and Epochs

Setting Repetitions and Epochs can be an issue of what came first the chicken or the egg. The most important factors are the Dim, alpha, optimizer, the learning rate and the amount of epochs and the repetitions. Everyone has their own recipes for fine tuning. Some are better some are worse.

Mine is as generic as it can be and it normally gives good results when generating at around .7 weight. I have updated this part as ip noise gamma and min snr gamma options seem to considerably decrease the amount of steps needed. For prodigy to 2000 to 3000 total steps for SD1.5 and 1500 to 2500 for SDXL.

Remember kohya based training scripts calculate the amount of steps by dividing them by the batch size. When I talk about steps i am talking about the total ones without dividing by the batch size.

Now what does steps per epoch actually means? It is just the amount of repeats times the amount of images in a folder. Suppose I am making a character LORA with 3 outfits. I have 100 outfit1 images, 50 outfit2, 10 outfit3.

I would set the folders repetitions to be:

3_outfit1 = 3 reps * 100 img =300 steps per epoch

6_outfit2 = 6 reps * 50 img =300 steps per epoch

30_outfit3 = 30 reps * 10 img =300 steps per epoch

Remember you are also training the main concept when doing this, in the case above this results in the character being trained 900 steps. So be careful not to overcook it(or overfit it). The more overlapping concepts you add the higher the risk of overcooking the lora.

This can be mitigated by removing the relation between the character and the outfit. Take for example outfit1 from above, I could take 50 of the images and remove the character tag and replace it for the original description tags(hair color, eye color, etc). that way when outfit1 is being trained character is not. Another alternative that somewhat works is using scale normalization that "flattens" values that shoot too high beyond the rest limiting overcooking a bit. Using the prodigy Prodigy optimizer also makes thing less prone to overcook. Finally when everything else fails, the usage of regularization images can help mitigate this issue take the example above, You split the outfit images tagging half with the character trigger and the other half with the individual tags. Using regularization images with those tags will return them to "a base state" of the model allowing you to continue training them without overfitting them thus effectively only training the outfit trigger.

Warning: Don't use Scale normalization with prodigy as they are not compatible.

For the main concept (character in the example) I would recommend to keep it below (6000 steps in adamW or 3000 for prodigy) per total before you need to start tweaking the dataset to keep it down and prevent it from burning.

Remember these are all approximations and if you have 10 more images for one outfit you can leave it be. If your Lora is a bit overcooked, most of the time it can be compensated by lowering the weight when generating. If your LORA starts deepfrying images at less than .5 weight i would definitely retrain, it will still be usable but the usability range becomes too narrow. There's also a rebase script around to change the weight so you could theoretically set .5 to become 1.1 thus increasing the usability range.

Recipe

I use 8 epochs, Dim 32, Alpha 16 Prodigy with a learning rate of 1 with 300 steps per epoch per concept and 100 steps per sub-concept(normally outfits). Alternatively use Dim 32, AdamW with a learning rate of .0001. and double the quantities of steps for prodigy. (Don't add decimals always round down) It got overcooked? Lower the repetitions/learning rate or in adamw enable normalization.

WARNING: Using screencaps while not bad per se is more prone to overbaking due to the homogeneity of the datasets, I have found this to be a problem specially with old anime, when captioning you will notice it normally gets the retro, 80s style and 90s tags. In this case for prodigy they seem to train absurdly faster and do a good job with 1000~2000 total steps rather than the common 2000~3000.

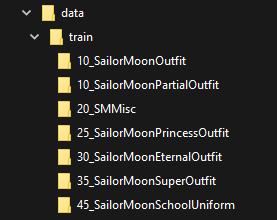

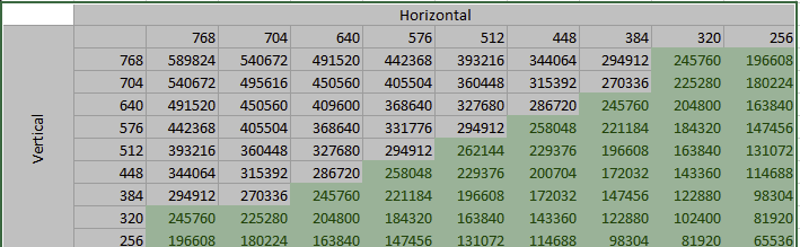

KNXO's shitty repetitions table

Below I add a crappy hard to read table, I'll probably attach it a bit larger to the right in the downloads as a zip file. Each row being an scenario and the NA value meaning that it is unneeded. For example the first row is for a simple one character one outfit lora. This is for dim 32 alpha 16.

*By simple outfit i mean it: shirt, pants, skirts without frills or weird patterns.

*The character column represents a misc folder full of random images of the character in untrainable outfits(too few images of the outfit or trained outfits missing too many of it's parts) and face shots(as well as nudes). It is good for the LORA to have a baseline shape of the character separate from it's outfits. In this folder outfits should be tagged naturally ie in pieces as the autotagger normally does without pruning the individual parts.

*The outfit columns represent folders with complete and clear view of the outfit and tagged as such. These images may or may not also be tagged with the character trigger if it applies(for example: mannequins wearing the outfit should not be tagged with the character trigger, only with the outfit trigger).

If your desired scenario is not explicitly there, then interpolate it, for example if you have a two character lora with 3 outfits per character, just apply the scenario 3 for both characters(ie reduce the character reps of both to 400). The table essentially explains more or less the required repetitions for a 1 or 2 character LORA with up to 4 outfits(2 complex and 2 simple ones) It also allows for the substitution of a base outfit plus a derivative outfit, for example an outfit with a variant with a scarf or a jacket or something simple like that.

Beware this table is completely baseless empiric drivel from my part, that it has worked for me is mere coincidence and add to it that it still needs some tweaking in a per case basis, but i mostly stick to it These values gravitate towards the high end so you may end up choosing epochs earlier than the last one. If i were to guess your best bet would be epoch 5 or 6.

Beware2: Older anime seems to need roughly 1/3 of the training steps that contemporary anime because "reasons"(I am not exactly sure but it likely due to NAI's original dataset).

Beware 3: ip noise gamma and min snr gamma options seem to considerably decrease the amount of steps needed. So try mutiplying the values by 3/5 like 500*3/5=300 steps.

Beware 4 for sdxl try mutiplying the values by 1/2 500*1/2=250 steps.

===============================================================

Captioning

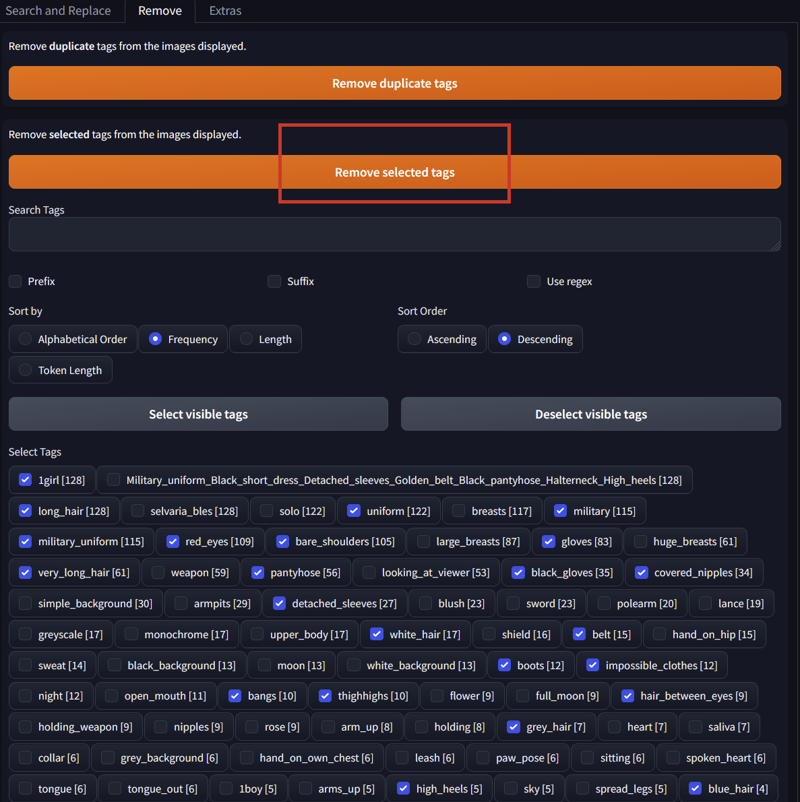

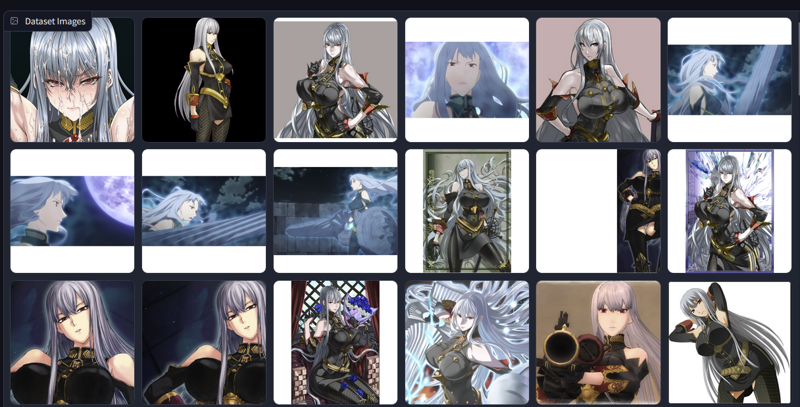

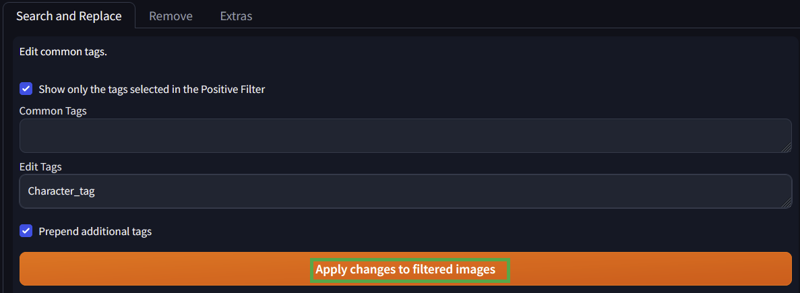

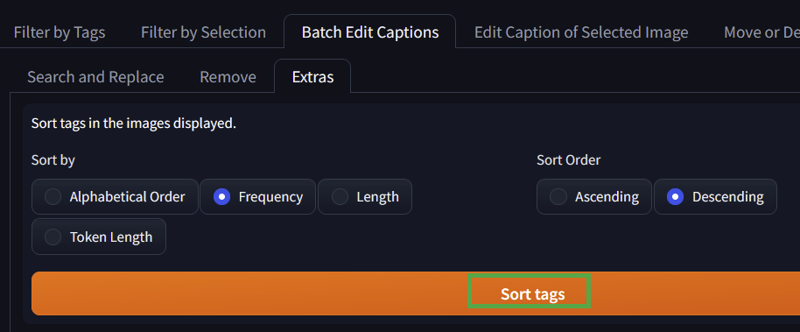

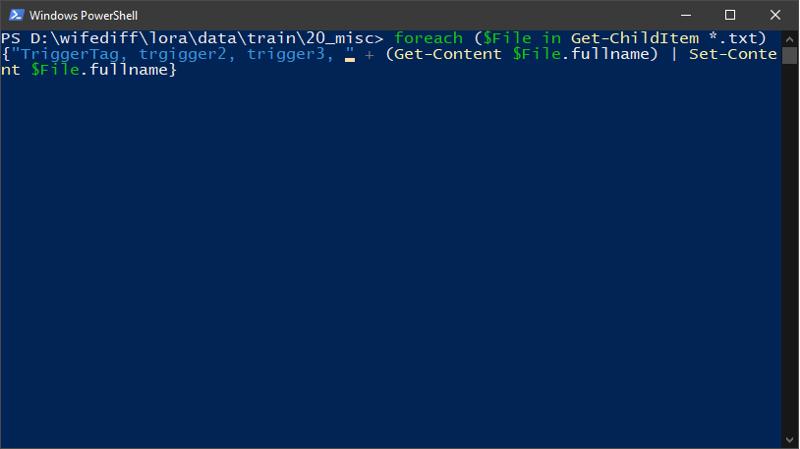

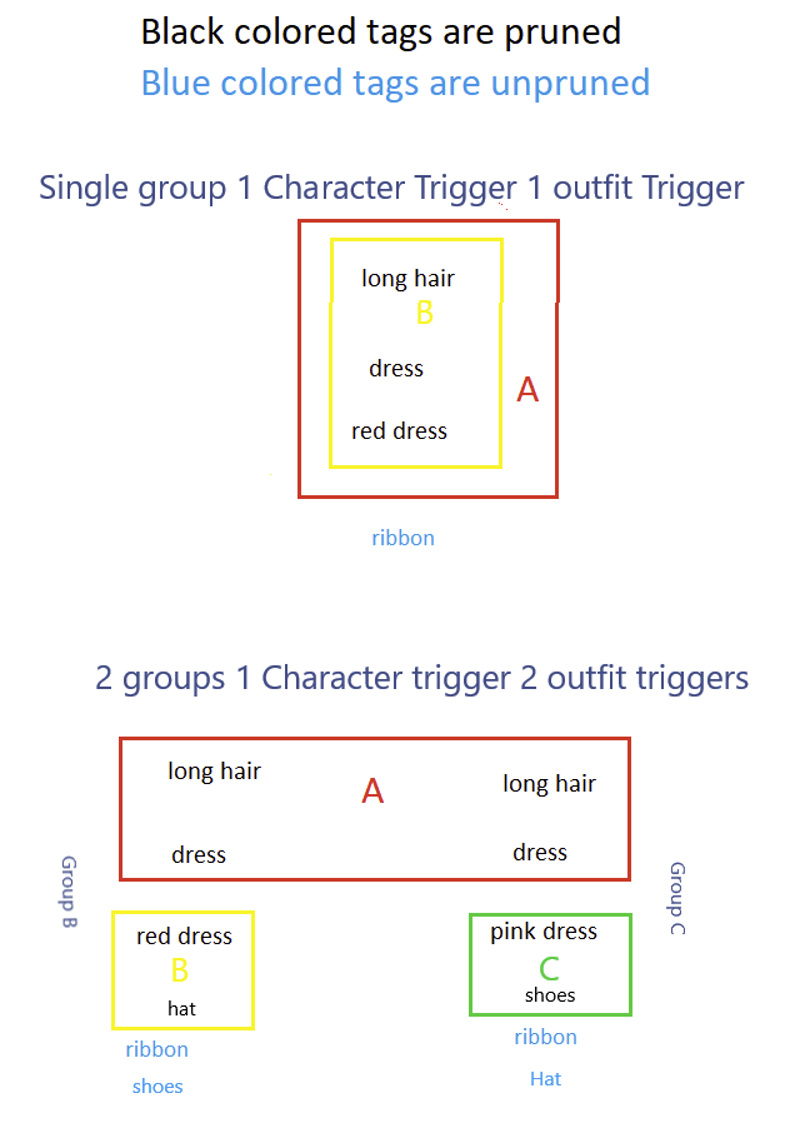

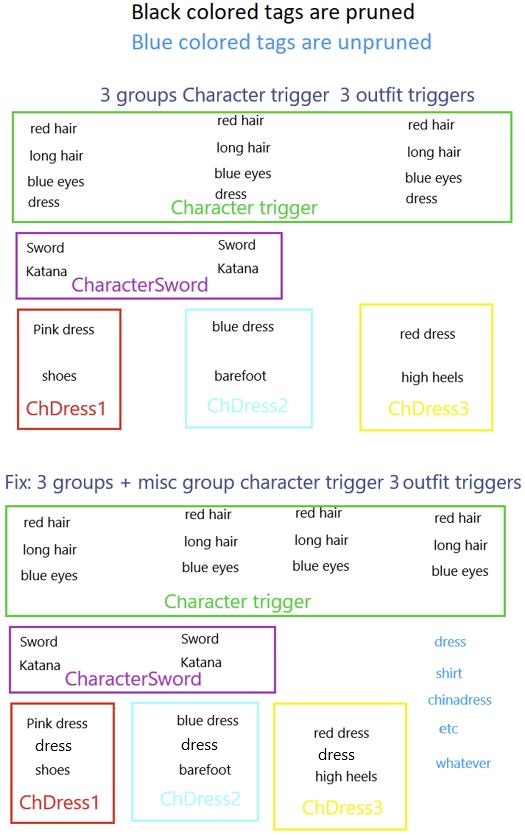

So all your images are clean and in nice 512x512 size, the next step is captioning. Captioning can be as deep as a puddle or as deep as the Marianas trench. Captioning(adding tags) and pruning(deleting tags) are the way we create triggers, a trigger is a custom tag which has absorbed (for lack of a better word) the concepts of pruned tags. For anime characters it is recommended to use deepboru WD1.4 vit-v2 which uses danbooru style tagging. The best way i have found is to use diffusion-webui-dataset-tag-editor(look for it at extensions) for A1111 which includes a tag manager and waifu diffusion tagger.

Go to the stable-diffusion-webui-dataset-tag-editor's A1111 tab and select a tagger in the dataset load settings, select use tagger if empty. Then simply load the directory of your images and after everything finishes tagging simply click save. I recommend to select two of the WD1.4 taggers, it will create duplicate tags but then you can go to batch edit captions->remove->remove duplicates and get maximum tag coverage.