Objective

Generate videos with XL checkpoint and animatediff.

Software

Please check my previous articles for installation :

Stable Diffusion : https://civitai.com/articles/3725/stable-diffusion-with-automatic-a1111-how-to-install-and-run-on-your-computer

AnimateDiff :https://civitai.com/articles/3736/stable-diffusion-extension-animatediff-to-generate-videos

Reactor (improve faces) : https://civitai.com/articles/3932/stable-diffusion-testing-reactor-face-swap-plug-in

Checkpoint

DynaVision XL ( https://civitai.com/models/122606/dynavision-xl-all-in-one-stylized-3d-sfw-and-nsfw-output-no-refiner-needed )

Juggernaut XL ( https://civitai.com/models/133005?modelVersionId=357609 )

Pony Diffusion V6 XL ( https://civitai.com/models/257749?modelVersionId=290640 )

AnimateDiff

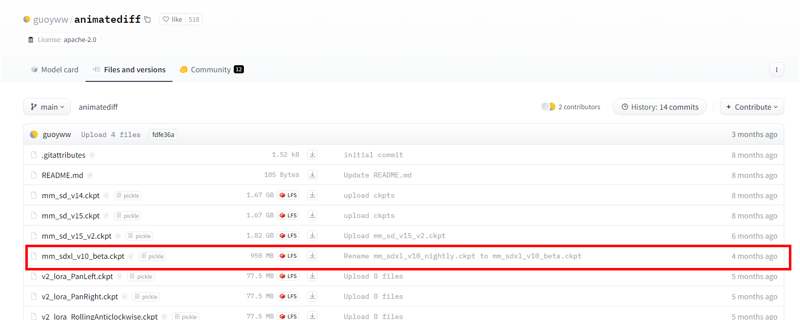

Download the model mm_sdxl_v10_beta.ckpt from https://huggingface.co/guoyww/animatediff/tree/main

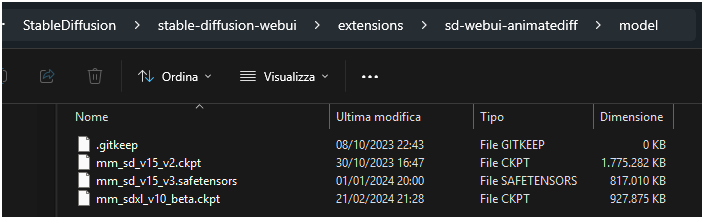

Copy the file under:

<StableDiffusion folder>\stable-diffusion-webui\extensions\sd-webui-animatediff\model

In the same folder you should have also the models for 1.5 models.

TESTS

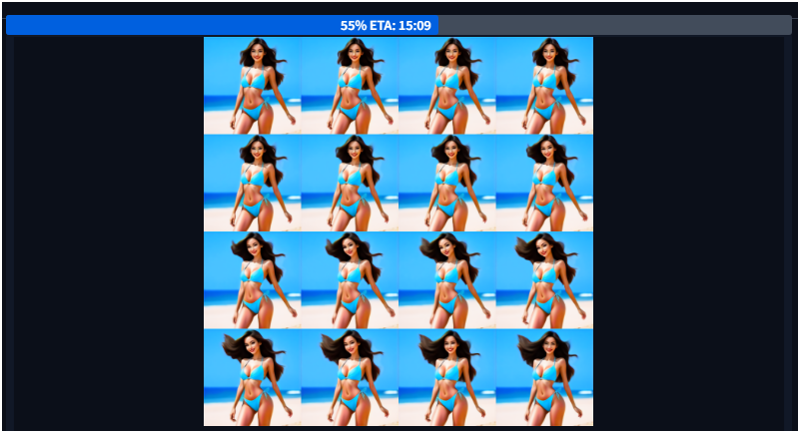

My idea is to generate the video at resolution 1024x1024 with the same prompt with 3 different checkpoints.

Prompt

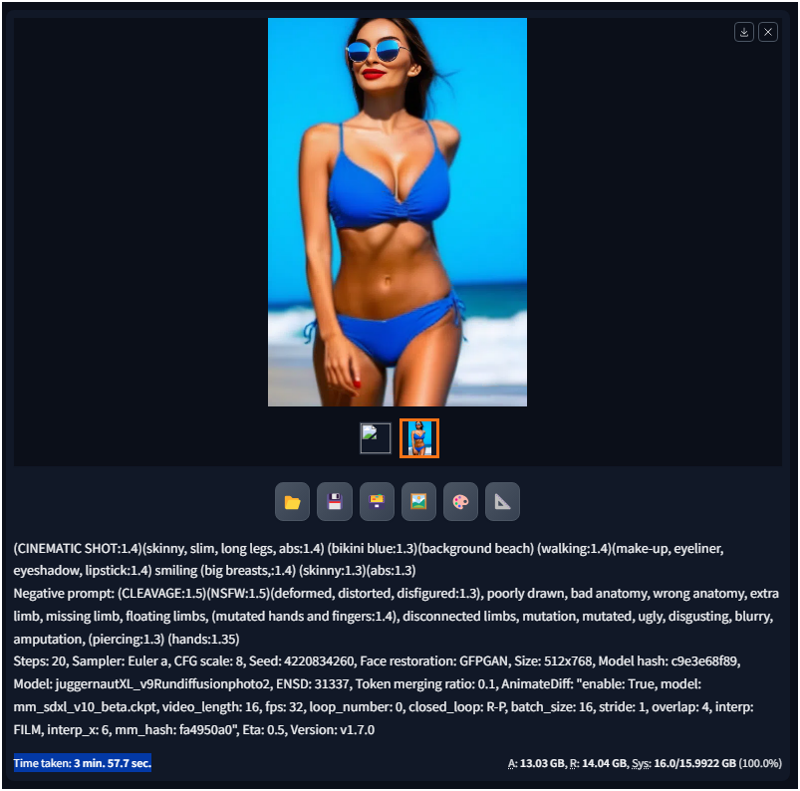

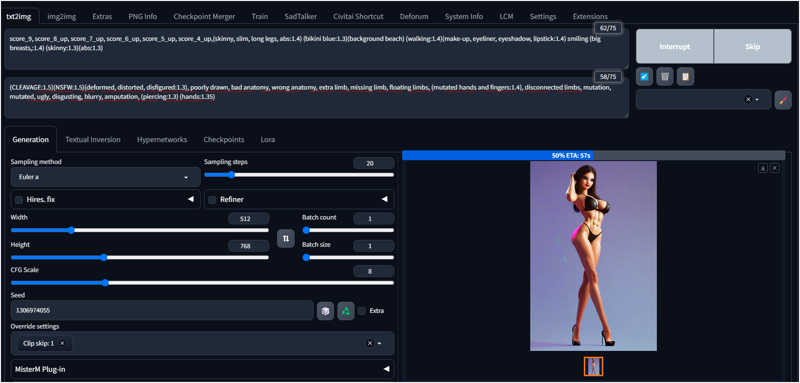

(CINEMATIC SHOT:1.4)(skinny, slim, long legs, abs:1.4) (bikini blue:1.3)(background beach) (walking:1.4)(make-up, eyeliner, eyeshadow, lipstick:1.4) smiling (big breasts,:1.4) (skinny:1.3)(abs:1.3)

Negative

(CLEAVAGE:1.5)(NSFW:1.5)(deformed, distorted, disfigured:1.3), poorly drawn, bad anatomy, wrong anatomy, extra limb, missing limb, floating limbs, (mutated hands and fingers:1.4), disconnected limbs, mutation, mutated, ugly, disgusting, blurry, amputation, (piercing:1.3) (hands:1.35)

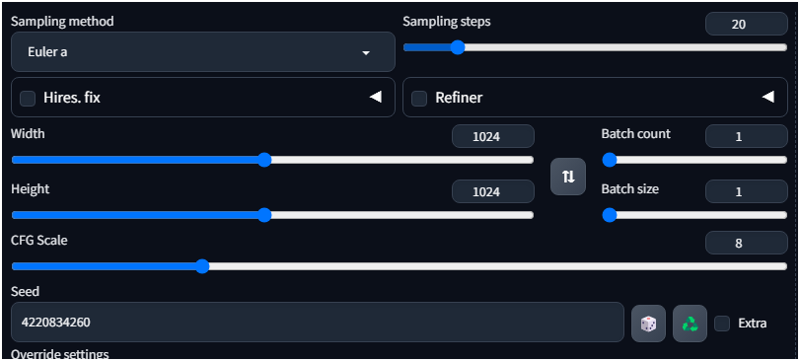

Other settings

Steps: 20

Sampler: Euler a

CFG scale: 8

Seed: 4220834260

Face restoration: GFPGAN

Size: 1024x1024,

This is the output image with DynaVision XL

Now to generate the videos I need to setup animatedDiff

Animatediff setup

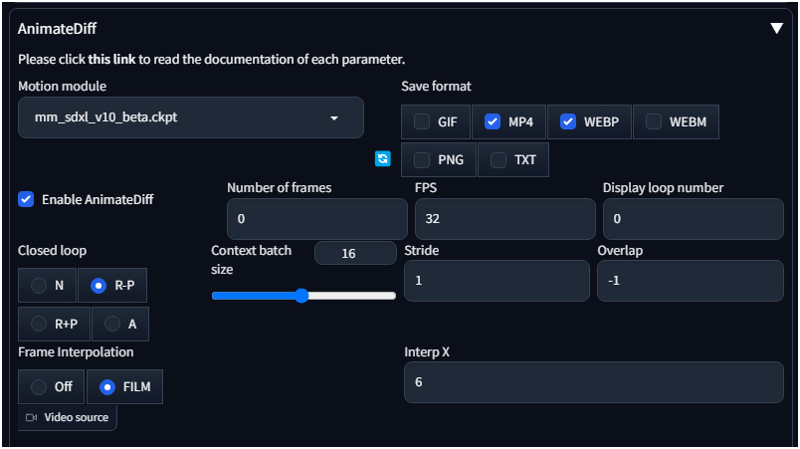

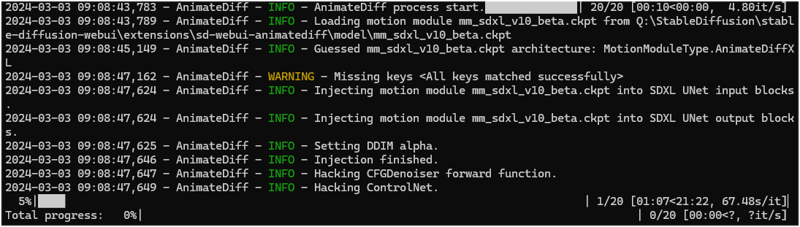

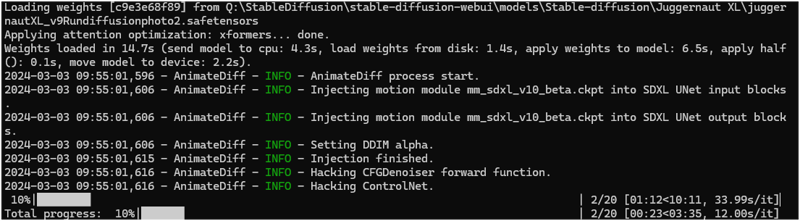

I select he module mm_sdxl_v10_beta.ckpt

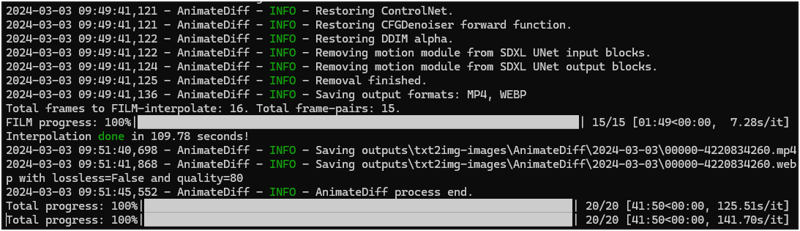

I will save in mp4 and webp (for this article).

I like to set 32 fps because I set the FIKM option with interp X = 6. This will make the video more fluid ( check my article about this : https://civitai.com/articles/4199/stable-diffusion-videos-with-animatediff-and-deforum-interpolation )

I keep the number of frames to 16 (changing this parameter not always works well)

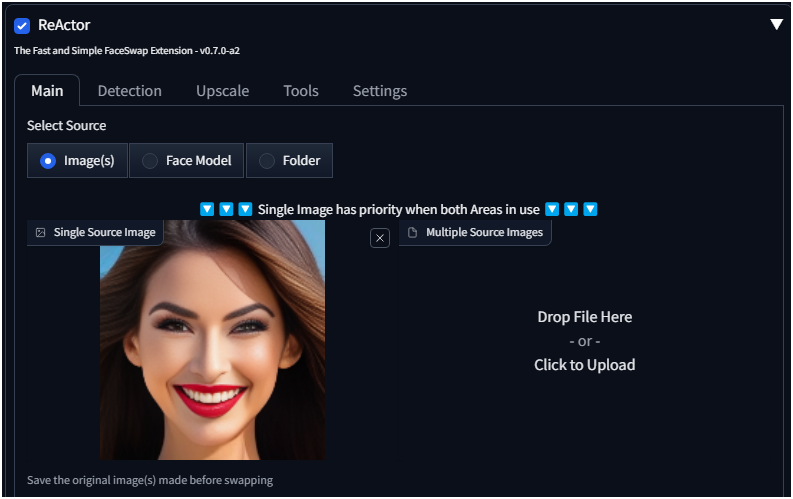

ReActor

In reactor I will keep the default settings with the face generated as image..

1° TEST - DynaVision XL 👍

I run the generation of the video with the previous settings, I have a NVIDIA 4080 graphics card, but the process takes really long..

I think is quite better to generate a video with SD1.5, split the images and rescale (like in my article : https://civitai.com/articles/3501/ai-stable-diffusion-animated-gif-making-video-more-realistic ) but what I want it is to use the XL checkpoints and loras, because text are graphics are better.

Very very slow...

It took about 40 minutes👎 ..

(webp quality is ugly, mp4 is much better)

2° TEST - Juggernaut XL 👍

Because it takes too long I will change the size to 512x768 and remove Reactor in this test.

with lower resultion the speed is better: near 4 minutes for my video.

(webp quality is ugly, mp4 is much better)

3° TEST - Pony Diffusion V6 XL 👎

Same settings for Pony Diffusion V6XL but it doesn't work well.

I did changed the prompt and the seed to check the image generation for this checkpoint

but it doesn't digest the animatediff model.

Test Result

The animatediff beta model works also with some of the XL checkpoint, it the resolution is higher that 768 it takes ages to process the images.

I hope you enjoy my article